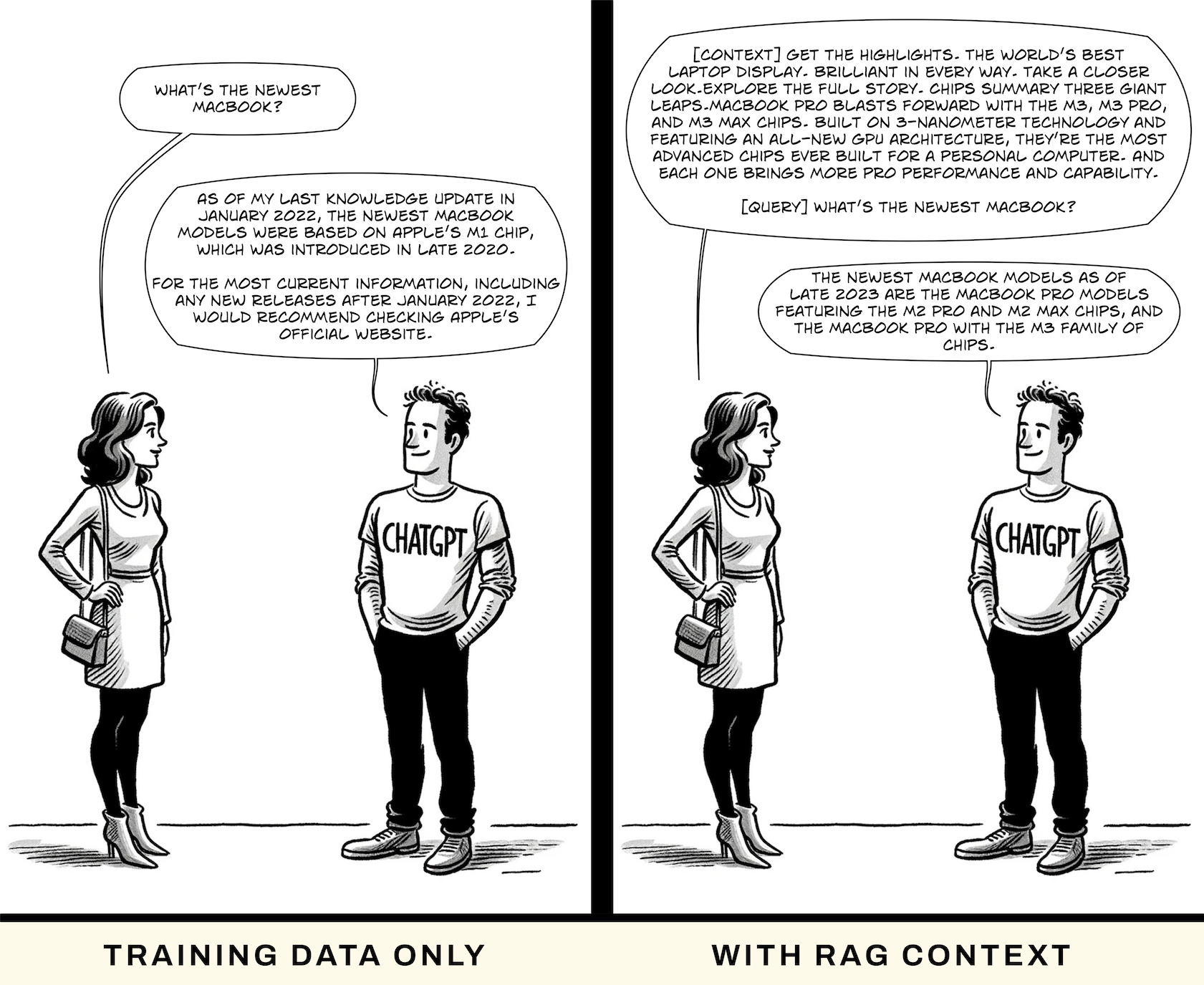

Massive language fashions (LLMs) like GPT-3.5 have confirmed to be succesful when requested about generally recognized topics or subjects that they might have obtained a big amount of coaching information for. Nevertheless, when requested about subjects that embody information they haven’t been skilled on, they both state that they don’t possess the information or, worse, can hallucinate believable solutions.

Retrieval Augmented Era (RAG) is a technique that improves the efficiency of Massive Language Fashions (LLMs) by integrating an data retrieval element with the mannequin’s textual content era capabilities. This method addresses two foremost limitations of LLMs:

-

Outdated Information: Conventional LLMs, like ChatGPT, have a static information base that ends at a sure cut-off date (for instance, ChatGPT’s information cut-off is in January 2022). This implies they lack data on latest occasions or developments.

-

Information Gaps and Hallucination: When LLMs encounter gaps of their coaching information, they could generate believable however inaccurate data, a phenomenon referred to as “hallucination.”

RAG tackles these points by combining the generative capabilities of LLMs with real-time data retrieval from exterior sources. When a question is made, RAG retrieves related and present data from an exterior information retailer and makes use of this data to offer extra correct and contextually acceptable responses by including this data to the immediate. That is equal to handing somebody a pile of papers lined in textual content and instructing them that “the reply to this query is contained on this textual content; please discover it and write it out for me utilizing pure language.” This method permits LLMs to reply with up-to-date data and reduces the chance of offering incorrect data resulting from information gaps.

RAG Structure

This text focuses on what’s referred to as “naive RAG”, which is the foundational method of integrating LLMs with information bases. We’ll focus on extra superior methods on the finish of this text, however the basic concepts of RAG methods (of all ranges of complexity) nonetheless share a number of key elements working collectively:

-

Orchestration Layer: This layer manages the general workflow of the RAG system. It receives consumer enter together with any related metadata (like dialog historical past), interacts with numerous elements, and orchestrates the circulate of knowledge between them. These layers usually embody instruments like LangChain, Semantic Kernel, and customized native code (usually in Python) to combine completely different components of the system.

-

Retrieval Instruments: These are a set of utilities that present related context for responding to consumer prompts. They play an vital position in grounding the LLM’s responses in correct and present data. They’ll embody information bases for static data and API-based retrieval methods for dynamic information sources.

-

LLM: The LLM is on the coronary heart of the RAG system, accountable for producing responses to consumer prompts. There are a lot of sorts of LLM, and may embody fashions hosted by third events like OpenAI, Anthropic, or Google, in addition to fashions operating internally on a corporation’s infrastructure. The particular mannequin used can differ based mostly on the applying’s wants.

-

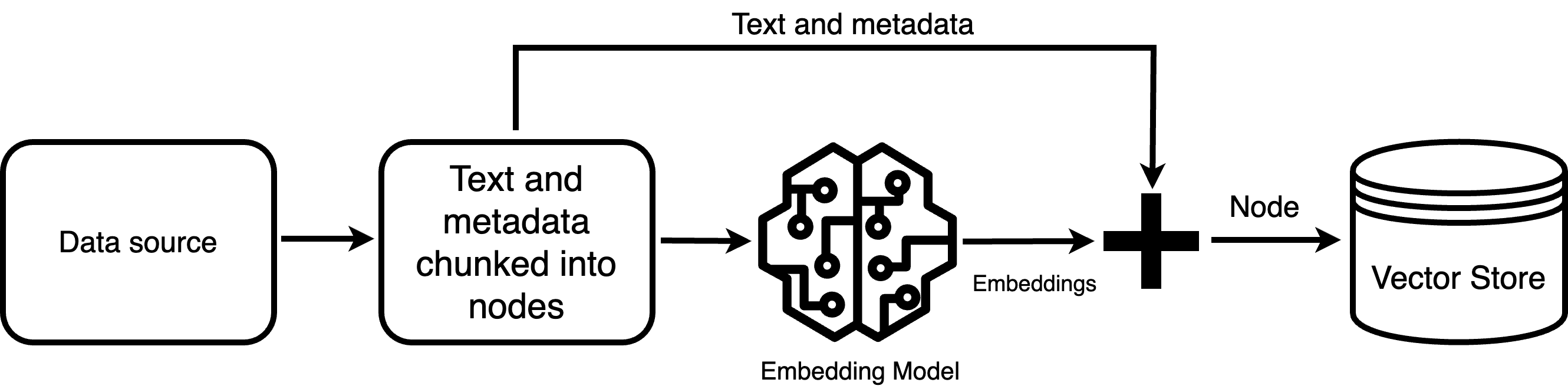

Information Base Retrieval: Entails querying a vector retailer, a sort of database optimized for textual similarity searches. This requires an Extract, Rework, Load (ETL) pipeline to arrange the info for the vector retailer. The steps taken embody aggregating supply paperwork, cleansing the content material, loading it into reminiscence, splitting the content material into manageable chunks, creating embeddings (numerical representations of textual content), and storing these embeddings within the vector retailer.

-

API-based Retrieval: For information sources that enable programmatic entry (like buyer data or inner methods), API-based retrieval is used to fetch contextually related information in real-time.

-

Prompting with RAG: Entails creating immediate templates with placeholders for consumer requests, system directions, historic context, and retrieved context. The orchestration layer fills these placeholders with related information earlier than passing the immediate to the LLM for response era. Steps taken can embody duties like cleansing the immediate of any delicate data and guaranteeing the immediate stays throughout the LLM’s token limits

The problem with RAG is discovering the proper data to offer together with the immediate!

Indexing Stage

- Knowledge Group: Think about you’re the little man within the cartoon above, surrounded by textbooks. We take every of those books and break them into bite-sized items—one may be about quantum physics, whereas one other may be about house exploration. Every of those items, or paperwork, is processed to create a vector, which is like an deal with within the library that factors proper to that chunk of knowledge.

- Vector Creation: Every of those chunks is handed by means of an embedding mannequin, a sort of mannequin that creates a vector illustration of lots of or hundreds of numbers that encapsulate the which means of the knowledge. The mannequin assigns a singular vector to every chunk—form of like creating a singular index that a pc can perceive. This is named the indexing stage.

Querying Stage

- Querying: Whenever you wish to ask an LLM a query it might not have the reply to, you begin by giving it a immediate, reminiscent of “What’s the most recent growth in AI laws?”

- Retrieval: This immediate goes by means of an embedding mannequin and transforms right into a vector itself—it is prefer it’s getting its personal search phrases based mostly on its which means and never simply an identical matches to its key phrases. The system then makes use of this search time period to scour the vector database for probably the most related chunks associated to your query.

-png-2.png?width=2056&height=1334&name=rag-query-drawio%20(1)-png-2.png)

- Prepending the Context: Essentially the most related chunks are then served up as context. It’s just like handing over reference materials earlier than asking your query, besides we give the LLM a directive: “Utilizing this data, reply the next query.” Whereas the immediate to the LLM will get prolonged with quite a lot of this background data, you as a consumer don’t see any of this. The complexity is dealt with behind the scenes.

- Reply Era: Lastly, outfitted with this newfound data, the LLM generates a response that ties within the information it’s simply retrieved, answering your query in a manner that feels prefer it knew the reply all alongside.

Chunking methods

The precise chunking of the paperwork is considerably of an artwork in itself. GPT-3.5 has a most context size of 4,096 tokens, or about 3,000 phrases. These phrases symbolize the sum whole of what the mannequin can deal with—if we create a immediate with a context 3,000 phrases lengthy, the mannequin is not going to have sufficient room to generate a response. Realistically, we shouldn’t immediate with greater than about 2,000 phrases for GPT-3.5. This implies there’s a trade-off with chunk dimension that’s data-dependent.

With smaller chunk_size values, the textual content returned produces extra detailed chunks of textual content however dangers lacking data in the event that they’re situated far-off within the textual content. However, bigger chunk_size values usually tend to embody all vital data within the high chunks, guaranteeing higher response high quality, but when the knowledge is distributed all through the textual content, it is going to miss vital sections.

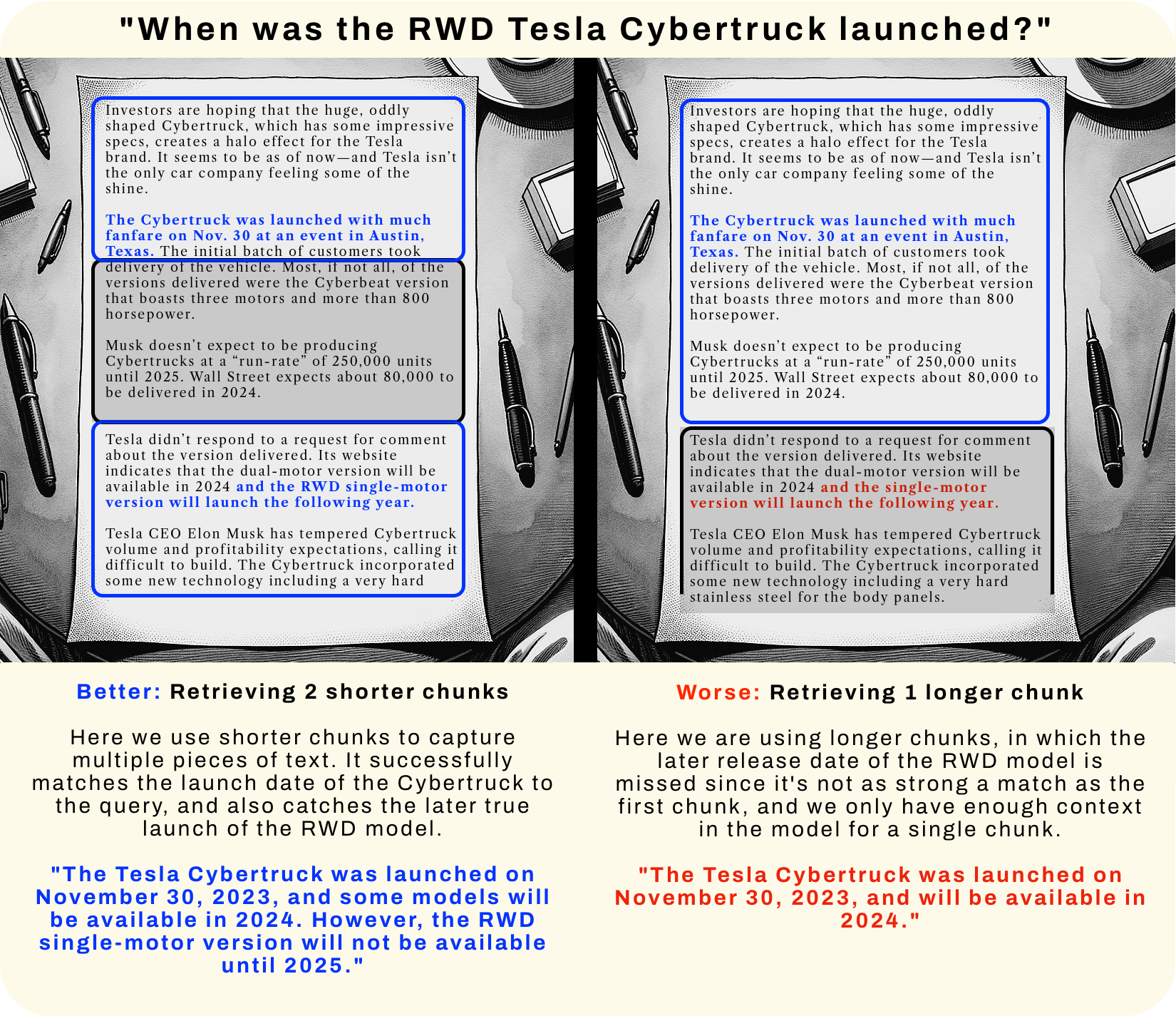

Let’s use some examples for example how this trade-off works, utilizing the latest Tesla Cybertruck launch occasion. Whereas some fashions of the truck shall be accessible in 2024, the most cost effective mannequin—with simply RWD—is not going to be accessible till 2025. Relying on the formatting and chunking of the textual content used for RAG, the mannequin’s response could or could not encounter this truth!

In these photos, blue signifies the place a match was discovered and the chunk was returned; the gray field signifies the chunk was not retrieved; and the crimson textual content signifies the place related textual content existed however was not retrieved. Let’s check out an instance the place shorter chunks succeed:

Exhibit A: Shorter chunks are higher… generally.

Exhibit A: Shorter chunks are higher… generally.

Within the picture above, on the left, the textual content is structured in order that the admission that the RWD shall be launched in 2025 is separated by a paragraph but additionally has related textual content that’s matched by the question. The tactic of retrieving two shorter chunks works higher as a result of it captures all the knowledge. On the correct, the retriever is barely retrieving a single chunk and subsequently doesn’t have the room to return the extra data, and the mannequin is given incorrect data.

Nevertheless, this isn’t at all times the case; generally longer chunks work higher when textual content that holds the true reply to the query doesn’t strongly match the question. Right here’s an instance the place longer chunks succeed:

Exhibit B: Longer chunks are higher… generally.

Exhibit B: Longer chunks are higher… generally.

Optimizing RAG

Enhancing the efficiency of a RAG system entails a number of methods that concentrate on optimizing completely different elements of the structure:

-

Improve Knowledge High quality (Rubbish in, Rubbish out): Guarantee the standard of the context supplied to the LLM is excessive. Clear up your supply information and guarantee your information pipeline maintains enough content material, reminiscent of capturing related data and eradicating pointless markup. Rigorously curate the info used for retrieval to make sure it is related, correct, and complete.

-

Tune Your Chunking Technique: As we noticed earlier, chunking actually issues! Experiment with completely different textual content chunk sizes to take care of enough context. The way in which you cut up your content material can considerably have an effect on the efficiency of your RAG system. Analyze how completely different splitting strategies impression the context’s usefulness and the LLM’s means to generate related responses.

-

Optimize System Prompts: Tremendous-tune the prompts used for the LLM to make sure they information the mannequin successfully in using the supplied context. Use suggestions from the LLM’s responses to iteratively enhance the immediate design.

-

Filter Vector Retailer Outcomes: Implement filters to refine the outcomes returned from the vector retailer, guaranteeing that they’re intently aligned with the question’s intent. Use metadata successfully to filter and prioritize probably the most related content material.

-

Experiment with Totally different Embedding Fashions: Attempt completely different embedding fashions to see which gives probably the most correct illustration of your information. Take into account fine-tuning your individual embedding fashions to raised seize domain-specific terminology and nuances.

-

Monitor and Handle Computational Assets: Concentrate on the computational calls for of your RAG setup, particularly when it comes to latency and processing energy. Search for methods to streamline the retrieval and processing steps to cut back latency and useful resource consumption.

-

Iterative Improvement and Testing: Repeatedly check the system with real-world queries and use the outcomes to refine the system. Incorporate suggestions from end-users to grasp efficiency in sensible eventualities.

-

Common Updates and Upkeep: Repeatedly replace the information base to maintain the knowledge present and related. Alter and retrain fashions as essential to adapt to new information and altering consumer necessities.

Superior RAG methods

Thus far, I’ve lined what’s referred to as “naive RAG.” Naive RAG usually begins with a primary corpus of textual content paperwork, the place texts are chunked, vectorized, and listed to create prompts for LLMs. This method, whereas basic, has been considerably superior by extra advanced methods. Developments in RAG structure have considerably developed from the fundamental or ‘naive’ approaches, incorporating extra refined strategies for enhancing the accuracy and relevance of generated responses. Aas you’ll be able to see by the record under, this can be a quick creating discipline and masking all these methods would necessitate its personal article:

- Enhanced Chunking and Vectorization: As an alternative of straightforward textual content chunking, superior RAG makes use of extra nuanced strategies for breaking down textual content into significant chunks, even perhaps summarizing them utilizing one other mannequin. These chunks are then vectorized utilizing transformer fashions. The method ensures that every chunk higher represents the semantic which means of the textual content, resulting in extra correct retrieval.

- Hierarchical Indexing: This entails creating a number of layers of indices, reminiscent of one for doc summaries and one other for detailed doc chunks. This hierarchical construction permits for extra environment friendly looking and retrieval, particularly in massive databases, by first filtering by means of summaries after which going deeper into related chunks.

- Context Enrichment: Superior RAG methods concentrate on retrieving smaller, extra related textual content chunks and enriching them with further context. This might contain increasing the context by including surrounding sentences or utilizing bigger guardian chunks that comprise the smaller, retrieved chunks.

- Fusion Retrieval or Hybrid Search: This method combines conventional keyword-based search strategies with fashionable semantic search methods. By integrating completely different algorithms, reminiscent of tf-idf (time period frequency–inverse doc frequency) or BM25 with vector-based search, RAG methods can leverage each semantic relevance and key phrase matching, resulting in extra complete search outcomes.

- Question Transformations and Routing: Superior RAG methods use LLMs to interrupt down advanced consumer queries into easier sub-queries. This enhances the retrieval course of by aligning the search extra intently with the consumer’s intent. Question routing entails decision-making about the very best method to deal with a question, reminiscent of summarizing data, performing an in depth search, or utilizing a mixture of strategies.

- Brokers in RAG: This entails utilizing brokers (smaller LLMs or algorithms) which might be assigned particular duties throughout the RAG framework. These brokers can deal with duties like doc summarization, detailed question answering, and even interacting with different brokers to synthesize a complete response.

- Response Synthesis: In superior RAG methods, the method of producing responses based mostly on retrieved context is extra intricate. It might contain iterative refinement of solutions, summarizing context to suit inside LLM limits, or producing a number of responses based mostly on completely different context chunks for a extra rounded reply.

- LLM and Encoder Tremendous-Tuning: Tailoring the LLM and the Encoder (accountable for context retrieval high quality) for particular datasets or purposes can tremendously improve the efficiency of RAG methods. This fine-tuning course of adjusts these fashions to be more practical in understanding and using the context supplied for response era.

Placing all of it collectively

RAG is a extremely efficient methodology for enhancing LLMs resulting from its means to combine real-time, exterior data, addressing the inherent limitations of static coaching datasets. This integration ensures that the responses generated are each present and related, a big development over conventional LLMs. RAG additionally mitigates the problem of hallucinations, the place LLMs generate believable however incorrect data, by supplementing their information base with correct, exterior information. The accuracy and relevance of responses are considerably enhanced, particularly for queries that demand up-to-date information or domain-specific experience.

Moreover, RAG is customizable and scalable, making it adaptable to a variety of purposes. It presents a extra resource-efficient method than constantly retraining fashions, because it dynamically retrieves data as wanted. This effectivity, mixed with the system’s means to constantly incorporate new data sources, ensures ongoing relevance and effectiveness. For end-users, this interprets to a extra informative and satisfying interplay expertise, as they obtain responses that aren’t solely related but additionally mirror the most recent data. RAG’s means to dynamically enrich LLMs with up to date and exact data makes it a strong and forward-looking method within the discipline of synthetic intelligence and pure language processing.