In recent times, giant language fashions (LLMs) have made outstanding strides of their skill to grasp and generate human-like textual content. These fashions, akin to OpenAI’s GPT and Anthropic’s Claude, have demonstrated spectacular efficiency on a variety of pure language processing duties. Nevertheless, in relation to advanced reasoning duties that require a number of steps of logical considering, conventional prompting strategies typically fall quick. That is the place Chain-of-Thought (CoT) prompting comes into play, providing a robust immediate engineering approach to enhance the reasoning capabilities of huge language fashions.

Key Takeaways

- CoT prompting enhances reasoning capabilities by producing intermediate steps.

- It breaks down advanced issues into smaller, manageable sub-problems.

- Advantages embody improved efficiency, interpretability, and generalization.

- CoT prompting applies to arithmetic, commonsense, and symbolic reasoning.

- It has the potential to considerably affect AI throughout numerous domains.

Chain-of-Thought prompting is a way that goals to boost the efficiency of huge language fashions on advanced reasoning duties by encouraging the mannequin to generate intermediate reasoning steps. Not like conventional prompting strategies, which usually present a single immediate and count on a direct reply, CoT prompting breaks down the reasoning course of right into a sequence of smaller, interconnected steps.

At its core, CoT prompting includes prompting the language mannequin with a query or drawback after which guiding it to generate a series of thought – a sequence of intermediate reasoning steps that result in the ultimate reply. By explicitly modeling the reasoning course of, CoT prompting permits the language mannequin to deal with advanced reasoning duties extra successfully.

One of many key benefits of CoT prompting is that it permits the language mannequin to decompose a posh drawback into extra manageable sub-problems. By producing intermediate reasoning steps, the mannequin can break down the general reasoning job into smaller, extra targeted steps. This method helps the mannequin preserve coherence and reduces the possibilities of dropping monitor of the reasoning course of.

CoT prompting has proven promising ends in bettering the efficiency of huge language fashions on a wide range of advanced reasoning duties, together with arithmetic reasoning, commonsense reasoning, and symbolic reasoning. By leveraging the ability of intermediate reasoning steps, CoT prompting permits language fashions to exhibit a deeper understanding of the issue at hand and generate extra correct and coherent responses.

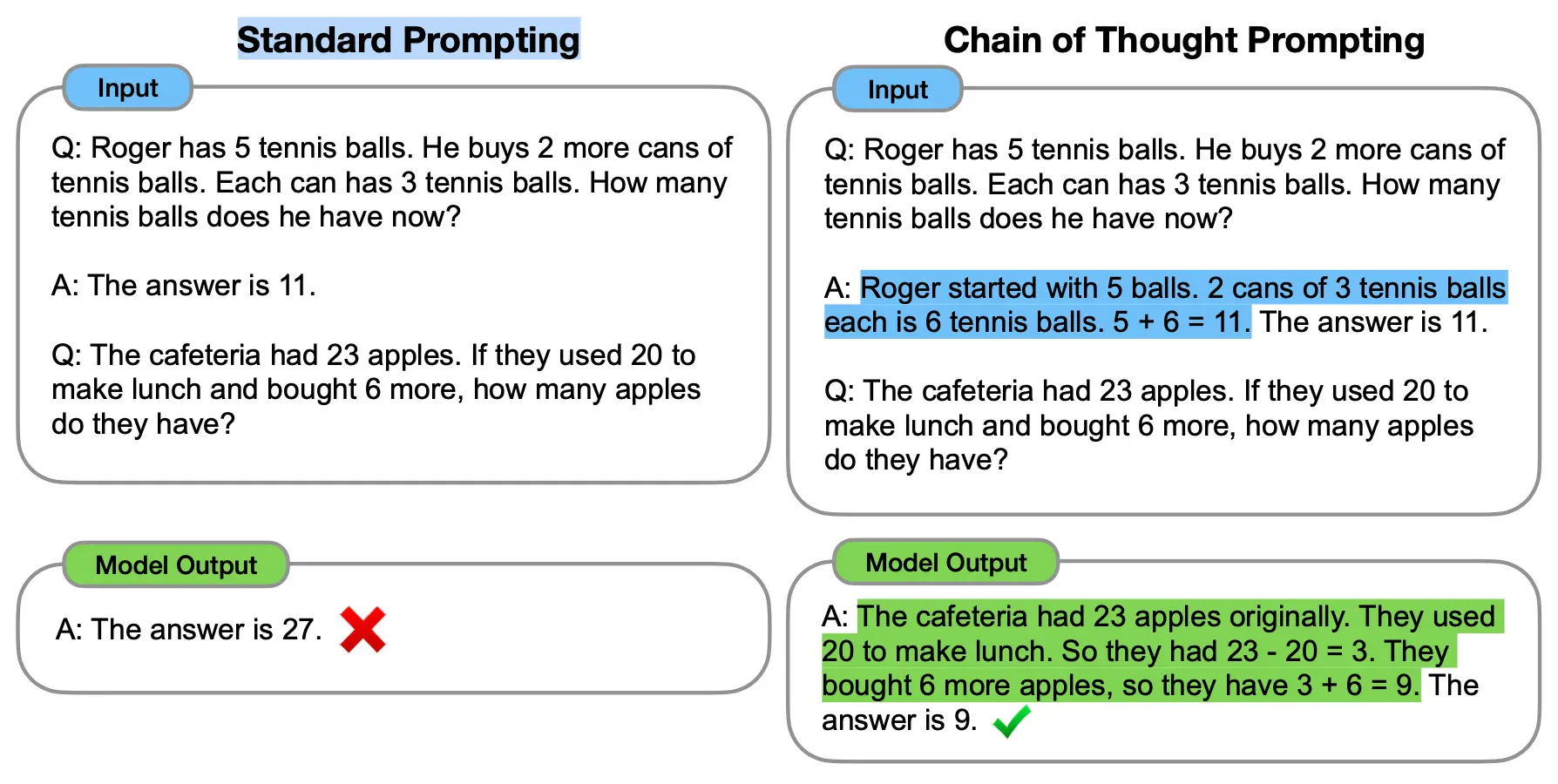

Commonplace vs COT prompting (Wei et al., Google Analysis, Mind Group)

CoT prompting works by producing a sequence of intermediate reasoning steps that information the language mannequin by means of the reasoning course of. As a substitute of merely offering a immediate and anticipating a direct reply, CoT prompting encourages the mannequin to interrupt down the issue into smaller, extra manageable steps.

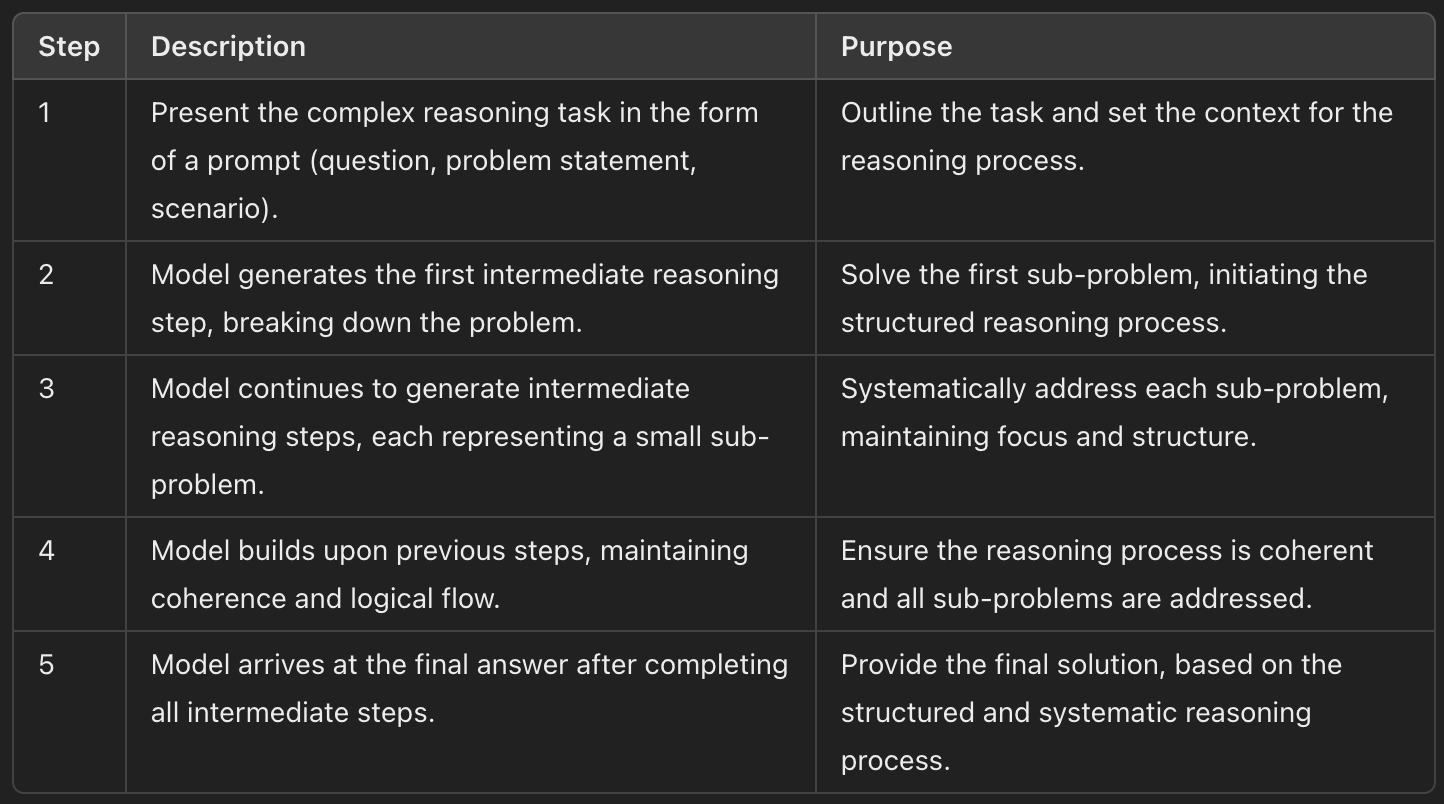

The method begins by presenting the language mannequin with a immediate that outlines the advanced reasoning job at hand. This immediate could be within the type of a query, an issue assertion, or a state of affairs that requires logical considering. As soon as the immediate is offered, the mannequin generates a sequence of intermediate reasoning steps that result in the ultimate reply.

Every intermediate reasoning step within the chain of thought represents a small, targeted sub-problem that the mannequin wants to unravel. By producing these steps, the mannequin can method the general reasoning job in a extra structured and systematic method. The intermediate steps permit the mannequin to keep up coherence and maintain monitor of the reasoning course of, lowering the possibilities of dropping focus or producing irrelevant data.

Because the mannequin progresses by means of the chain of thought, it builds upon the earlier reasoning steps to reach on the ultimate reply. Every step within the chain is related to the earlier and subsequent steps, forming a logical move of reasoning. This step-by-step method permits the mannequin to deal with advanced reasoning duties extra successfully, as it may deal with one sub-problem at a time whereas nonetheless sustaining the general context.

The technology of intermediate reasoning steps in CoT prompting is often achieved by means of fastidiously designed prompts and coaching strategies. Researchers and practitioners can use numerous strategies to encourage the mannequin to provide a series of thought, akin to offering examples of step-by-step reasoning, utilizing particular tokens to point the beginning and finish of every reasoning step, or fine-tuning the mannequin on datasets that exhibit the specified reasoning course of.

5-Step COT prompting course of

By guiding the language mannequin by means of the reasoning course of utilizing intermediate steps, CoT prompting permits the mannequin to unravel advanced reasoning duties extra precisely and effectively. The specific modeling of the reasoning course of additionally enhances the interpretability of the mannequin’s outputs, because the generated chain of thought offers insights into how the mannequin arrived at its ultimate reply.

CoT prompting has been efficiently utilized to a wide range of advanced reasoning duties, demonstrating its effectiveness in bettering the efficiency of huge language fashions.

Let’s discover a number of examples of how CoT prompting can be utilized in numerous domains.

Arithmetic Reasoning

One of the crucial easy purposes of CoT prompting is in arithmetic reasoning duties. By producing intermediate reasoning steps, CoT prompting can assist language fashions resolve multi-step arithmetic issues extra precisely.

For instance, think about the next drawback:

"If John has 5 apples and Mary has 3 occasions as many apples as John, what number of apples does Mary have?"

Utilizing CoT prompting, the language mannequin can generate a series of thought like this:

John has 5 apples.Mary has 3 occasions as many apples as John.To seek out the variety of apples Mary has, we have to multiply John's apples by 3.5 apples × 3 = 15 applesDue to this fact, Mary has 15 apples.

By breaking down the issue into smaller steps, CoT prompting permits the language mannequin to motive by means of the arithmetic drawback extra successfully.

Commonsense Reasoning

CoT prompting has additionally proven promise in tackling commonsense reasoning duties, which require a deep understanding of on a regular basis information and logical considering.

As an example, think about the next query:

"If an individual is allergic to canine and their good friend invitations them over to a home with a canine, what ought to the individual do?"

A language mannequin utilizing CoT prompting would possibly generate the next chain of thought:

The individual is allergic to canine.The good friend's home has a canine.Being round canine can set off the individual's allergy symptoms.To keep away from an allergic response, the individual ought to decline the invitation.The individual can counsel another location to fulfill their good friend.

By producing intermediate reasoning steps, CoT prompting permits the language mannequin to exhibit a clearer understanding of the scenario and supply a logical resolution.

Symbolic Reasoning

CoT prompting has additionally been utilized to symbolic reasoning duties, which contain manipulating and reasoning with summary symbols and ideas.

For instance, think about the next drawback:

"If A implies B, and B implies C, does A suggest C?"

Utilizing CoT prompting, the language mannequin can generate a series of thought like this:

A implies B implies that if A is true, then B should even be true.B implies C implies that if B is true, then C should even be true.If A is true, then B is true (from step 1).If B is true, then C is true (from step 2).Due to this fact, if A is true, then C should even be true.So, A does suggest C.

By producing intermediate reasoning steps, CoT prompting permits the language mannequin to deal with summary symbolic reasoning duties extra successfully.

These examples exhibit the flexibility and effectiveness of CoT prompting in bettering the efficiency of huge language fashions on advanced reasoning duties throughout totally different domains. By explicitly modeling the reasoning course of by means of intermediate steps, CoT prompting enhances the mannequin’s skill to deal with difficult issues and generate extra correct and coherent responses.

Advantages of Chain-of-Thought Prompting

Chain-of-Thought prompting gives a number of important advantages in advancing the reasoning capabilities of huge language fashions. Let’s discover a number of the key benefits:

Improved Efficiency on Advanced Reasoning Duties

One of many main advantages of CoT prompting is its skill to boost the efficiency of language fashions on advanced reasoning duties. By producing intermediate reasoning steps, CoT prompting permits fashions to interrupt down intricate issues into extra manageable sub-problems. This step-by-step method permits the mannequin to keep up focus and coherence all through the reasoning course of, resulting in extra correct and dependable outcomes.

Research have proven that language fashions skilled with CoT prompting persistently outperform these skilled with conventional prompting strategies on a variety of advanced reasoning duties. The specific modeling of the reasoning course of by means of intermediate steps has confirmed to be a robust approach for bettering the mannequin’s skill to deal with difficult issues that require multi-step reasoning.

Enhanced Interpretability of the Reasoning Course of

One other important good thing about CoT prompting is the improved interpretability of the reasoning course of. By producing a series of thought, the language mannequin offers a transparent and clear rationalization of the way it arrived at its ultimate reply. This step-by-step breakdown of the reasoning course of permits customers to grasp the mannequin’s thought course of and assess the validity of its conclusions.

The interpretability supplied by CoT prompting is especially precious in domains the place the reasoning course of itself is of curiosity, akin to in instructional settings or in methods that require explainable AI. By offering insights into the mannequin’s reasoning, CoT prompting facilitates belief and accountability in the usage of giant language fashions.

Potential for Generalization to Numerous Reasoning Duties

CoT prompting has demonstrated its potential to generalize to a variety of reasoning duties. Whereas the approach has been efficiently utilized to particular domains like arithmetic reasoning, commonsense reasoning, and symbolic reasoning, the underlying rules of CoT prompting could be prolonged to different sorts of advanced reasoning duties.

The power to generate intermediate reasoning steps is a basic talent that may be leveraged throughout totally different drawback domains. By fine-tuning language fashions on datasets that exhibit the specified reasoning course of, CoT prompting could be tailored to deal with novel reasoning duties, increasing its applicability and affect.

Facilitating the Growth of Extra Succesful AI Methods

CoT prompting performs a vital position in facilitating the event of extra succesful and clever AI methods. By bettering the reasoning capabilities of huge language fashions, CoT prompting contributes to the creation of AI methods that may deal with advanced issues and exhibit greater ranges of understanding.

As AI methods turn out to be extra refined and are deployed in numerous domains, the flexibility to carry out advanced reasoning duties turns into more and more essential. CoT prompting offers a robust instrument for enhancing the reasoning abilities of those methods, enabling them to deal with tougher issues and make extra knowledgeable selections.

A Fast Abstract

CoT prompting is a robust approach that enhances the reasoning capabilities of huge language fashions by producing intermediate reasoning steps. By breaking down advanced issues into smaller, extra manageable sub-problems, CoT prompting permits fashions to deal with difficult reasoning duties extra successfully. This method improves efficiency, enhances interpretability, and facilitates the event of extra succesful AI methods.

FAQ

How does Chain-of-Thought prompting (CoT) work?

CoT prompting works by producing a sequence of intermediate reasoning steps that information the language mannequin by means of the reasoning course of, breaking down advanced issues into smaller, extra manageable sub-problems.

What are the advantages of utilizing chain-of-thought prompting?

The advantages of CoT prompting embody improved efficiency on advanced reasoning duties, enhanced interpretability of the reasoning course of, potential for generalization to numerous reasoning duties, and facilitating the event of extra succesful AI methods.

What are some examples of duties that may be improved with chain-of-thought prompting?

Some examples of duties that may be improved with CoT prompting embody arithmetic reasoning, commonsense reasoning, symbolic reasoning, and different advanced reasoning duties that require a number of steps of logical considering.