Probably the most inspiring a part of my function is touring across the globe, assembly our prospects from each sector and seeing, studying, collaborating with them as they construct GenAI options and put them into manufacturing. It’s thrilling to see our prospects actively advancing their GenAI journey. However many available in the market should not, and the hole is rising.

AI leaders are rightfully struggling to maneuver past the prototype and experimental stage, it’s our mission to vary that. At DataRobot, we name this the “confidence hole”. It’s the belief, security and accuracy and considerations surrounding GenAI which can be holding groups again, and we’re dedicated to addressing it. And, it’s the core focus of our Spring ’24 launch and its groundbreaking options.

This launch focuses on the three most vital hurdles to unlocking worth with GenAI.

First, we’re bringing you enterprise-grade open-source LLM assist, and a collection of analysis and testing metrics, that will help you and your groups confidently create production-grade AI purposes. That can assist you safeguard your repute and stop danger from AI apps operating amok, we’re bringing you real-time intervention and moderation for all of your GenAI purposes. And eventually, to make sure your whole fleet of AI property keep in peak efficiency, we’re bringing you a first-of-its-kind multi-cloud and hybrid AI Observability that will help you totally govern and optimize your entire AI investments.

Confidently Create Manufacturing-Grade AI Functions

There may be a number of discuss fine-tuning an LLM. However, we’ve got seen that the true worth lies in fine-tuning your generative AI utility. It’s tough, although. In contrast to predictive AI, which has 1000’s of simply accessible fashions and customary information science metrics to benchmark and assess efficiency towards, generative AI hasn’t—till now.

In contrast to predictive AI, which has 1000’s of simply accessible fashions and customary information science metrics to benchmark and assess efficiency towards, generative AI hasn’t—till now.

In our Spring ’24 launch, get enterprise-grade assist for any open-source LLM. We’ve additionally launched a complete set of LLM analysis, testing, and metrics. Now, you’ll be able to fine-tune your generative AI utility expertise, guaranteeing their reliability and effectiveness.

Enterprise-Grade Open Supply LLMs Internet hosting

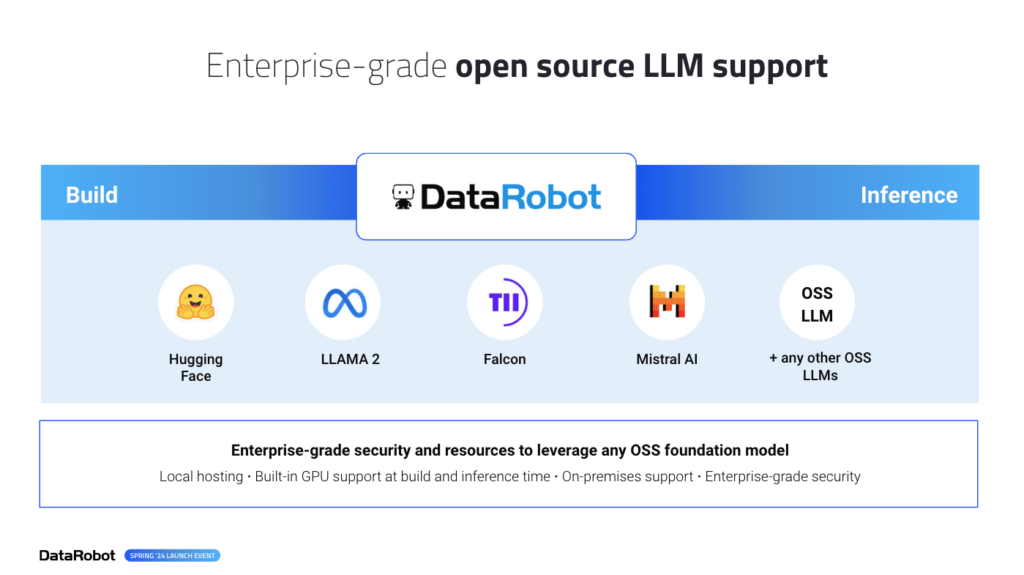

Privateness, management, and adaptability stay essential for all organizations concerning LLMs.There was no simple reply for AI Leaders who’ve been caught with having to select between vendor lock-in dangers utilizing main API-based LLMs that would turn out to be sub-optimal and costly within the fast future, determining arise and host your personal open supply LLM, or custom-building, internet hosting, and sustaining your personal LLM.

With our Spring Launch, you have got entry to the broadest number of LLMs, permitting you to decide on the one which aligns along with your safety necessities and use instances. Not solely do you have got ready-to-use entry to LLMs from main suppliers like Amazon, Google, and Microsoft, however you even have the pliability to host your personal {custom} LLMs. Moreover, our Spring ’24 Launch affords enterprise-level entry to open-source LLMs, additional increasing your choices.

We now have made internet hosting and utilizing open-source foundational fashions like LLaMa, Falcon, Mistral, and Hugging Face simple with DataRobot’s built-in LLM safety and sources. We now have eradicated the complicated and labor-intensive handbook DevOps integrations required and made it as simple as a drop-down choice.

LLM Analysis, Testing and Evaluation Metrics

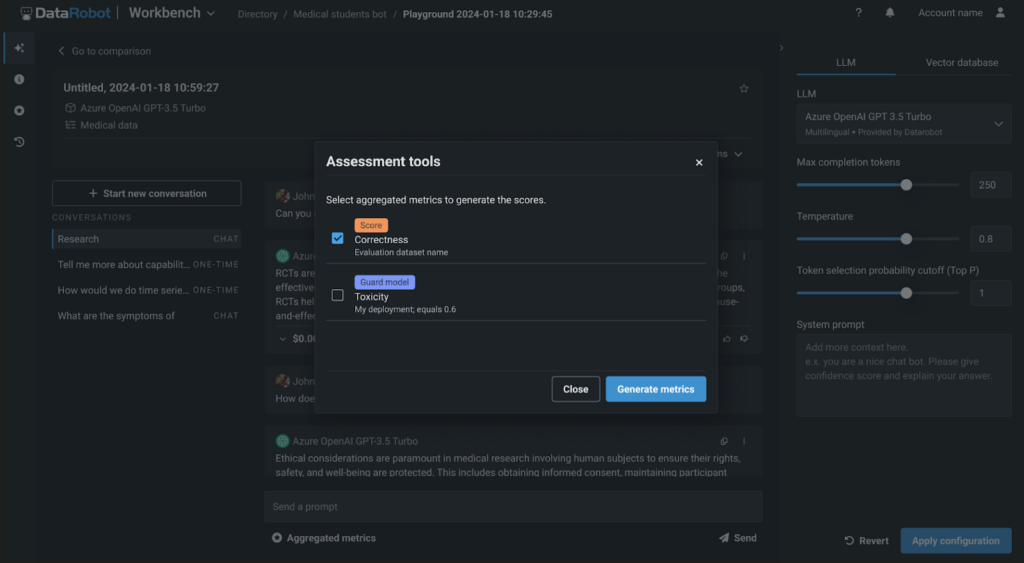

With DataRobot, you’ll be able to freely select and experiment throughout LLMs. We additionally offer you superior experimentation choices, comparable to attempting numerous chunking methods, embedding strategies, and vector databases. With our new LLM analysis, testing, and evaluation metrics, you and your groups now have a transparent manner of validating the standard of your GenAI utility and LLM efficiency throughout these experiments.

With our first-of-its-kind artificial information era for prompt-and-answer analysis, you’ll be able to rapidly and effortlessly create 1000’s of question-and-answer pairs. This allows you to simply see how properly your RAG experiment performs and stays true to your vector database.

We’re additionally supplying you with a complete set of analysis metrics. You possibly can benchmark, examine efficiency, and rank your RAG experiments primarily based on faithfulness, correctness, and different metrics to create high-quality and invaluable GenAI purposes.

And with DataRobot, it’s all the time about selection. You are able to do all of this as low code or in our totally hosted notebooks, which even have a wealthy set of recent codespace performance that eliminates infrastructure and useful resource administration and facilitates simple collaboration.

Observe and Intervene in Actual-Time

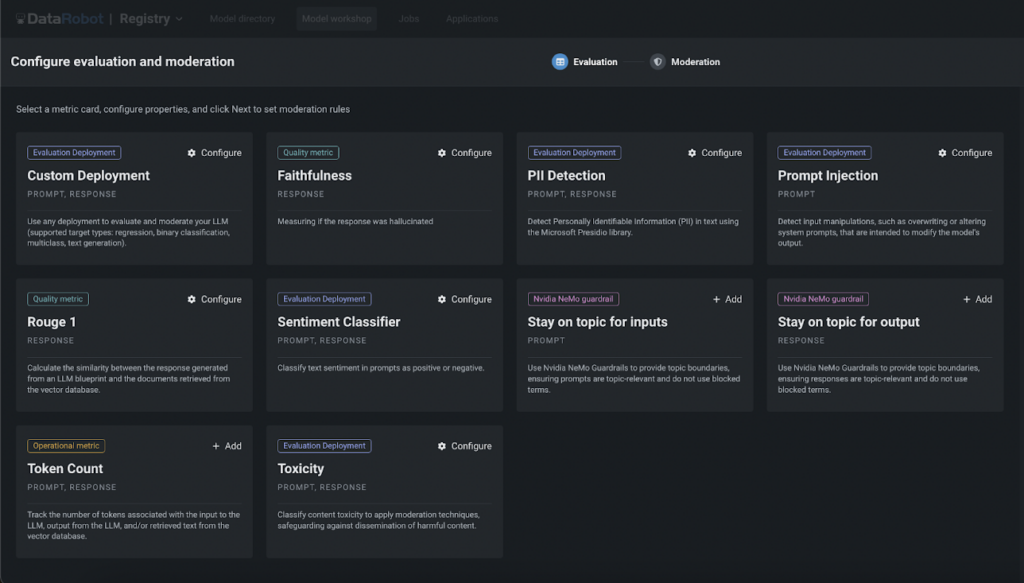

The most important concern I hear from AI leaders about generative AI is reputational danger. There are already loads of information articles about GenAI purposes exposing personal information and authorized courts holding firms accountable for the guarantees their GenAI purposes made. In our Spring ’24 Launch, we’ve addressed this problem head-on.

With our wealthy library of customizable guards, workflows, and notifications, you’ll be able to construct a multi-layered protection to detect and stop surprising or undesirable behaviors throughout your whole fleet of GenAI purposes in actual time.

Our library of pre-built guards could be totally personalized to stop immediate injections and toxicity, detect PII, mitigate hallucinations, and extra. Our moderation guards and real-time intervention could be utilized to your entire generative AI purposes – even these constructed exterior of DataRobot, supplying you with peace of thoughts that your AI property will carry out as supposed.

Govern and Optimize Infrastructure Investments

Due to generative AI, the proliferation of recent AI instruments, tasks, and groups engaged on them has elevated exponentially. I typically hear about “shadow GenAI” tasks and the way AI leaders and IT groups wrestle to reign all of it in. They discover it difficult to get a complete view, compounded by complicated multi-cloud and hybrid environments. The shortage of AI observability opens organizations as much as AI misuse and safety dangers.

Cross-Setting AI Observability

We’re right here that will help you thrive on this new regular the place AI exists in a number of environments and areas. With our Spring ’24 Launch, we’re bringing the first-of-its-kind, cross-environment AI observability – supplying you with unified safety, governance, and visibility throughout clouds and on-premise environments.

Your groups get to work within the instruments and methods they need; AI leaders get the unified governance, safety, and observability they should shield their organizations.

Our personalized alerts and notification insurance policies combine with the instruments of your selection, from ITSM to Jira and Slack, that will help you scale back time-to-detection (TTD) and time-to-resolution (TTR).

Insights and visuals assist your groups see, diagnose, and troubleshoot points along with your AI property – Hint prompts to the response and content material in your vector database with ease, See Generative AI matter drift with multi-language diagnostics, and extra.

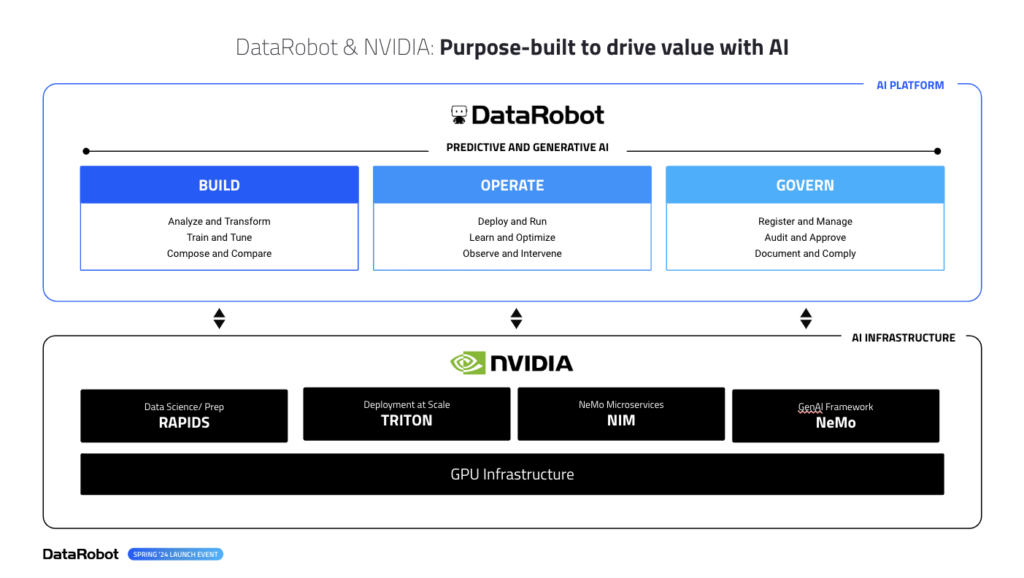

NVIDIA and GPU integrations

And, in case you’ve made investments in NVIDIA, we’re the first and solely AI platform to have deep integrations throughout all the floor space of NVIDIA’s AI Infrastructure – from NIMS, to NeMoGuard fashions, to their new Triton inference providers, all prepared for you on the click on of a button. No extra managing separate installs or integration factors, DataRobot makes accessing your GPU investments simple.

Our Spring ’24 launch is filled with thrilling options, together with GenAI, predictive capabilities, and enhancements in time collection forecasting, multimodal modeling, and information wrangling.

All of those new options can be found in cloud, on-premise, and hybrid environments. So, whether or not you’re an AI chief or a part of an AI crew, our Spring ’24 launch units the muse to your success.

That is only the start of the improvements we’re bringing you. We now have a lot extra in retailer for you within the months forward. Keep tuned as we’re laborious at work on the subsequent wave of improvements.

Get Began

Be taught extra about DataRobot’s GenAI options and speed up your journey in the present day.

- Be a part of our Catalyst program to speed up your AI adoption and unlock the total potential of GenAI to your group.

- See DataRobot’s GenAI options in motion by scheduling a demo tailor-made to your particular wants and use instances.

- Discover our new options, and join along with your devoted DataRobot Utilized AI Skilled to get began with them.

In regards to the writer

Venky Veeraraghavan leads the Product Group at DataRobot, the place he drives the definition and supply of DataRobot’s AI platform. Venky has over twenty-five years of expertise as a product chief, with earlier roles at Microsoft and early-stage startup, Trilogy. Venky has spent over a decade constructing hyperscale BigData and AI platforms for a number of the largest and most complicated organizations on the earth. He lives, hikes and runs in Seattle, WA along with his household.