Final yr, we launched basis mannequin help in Databricks Mannequin Serving to allow enterprises to construct safe and customized GenAI apps on a unified information and AI platform. Since then, hundreds of organizations have used Mannequin Serving to deploy GenAI apps custom-made to their distinctive datasets.

As we speak, we’re excited to announce new updates that make it simpler to experiment, customise, and deploy GenAI apps. These updates embrace entry to new giant language fashions (LLMs), simpler discovery, easier customization choices, and improved monitoring. Collectively, these enhancements assist you to develop and scale GenAI apps extra rapidly and at a decrease value.

Databricks Mannequin Serving is accelerating our AI-driven initiatives by making it straightforward to securely entry and handle a number of SaaS and open fashions, together with these hosted on or exterior Databricks. Its centralized method simplifies safety and price administration, permitting our information groups to focus extra on innovation and fewer on administrative overhead – Greg Rokita, VP, Expertise at Edmunds.com

Entry New Open and Proprietary Fashions By Unified Interface

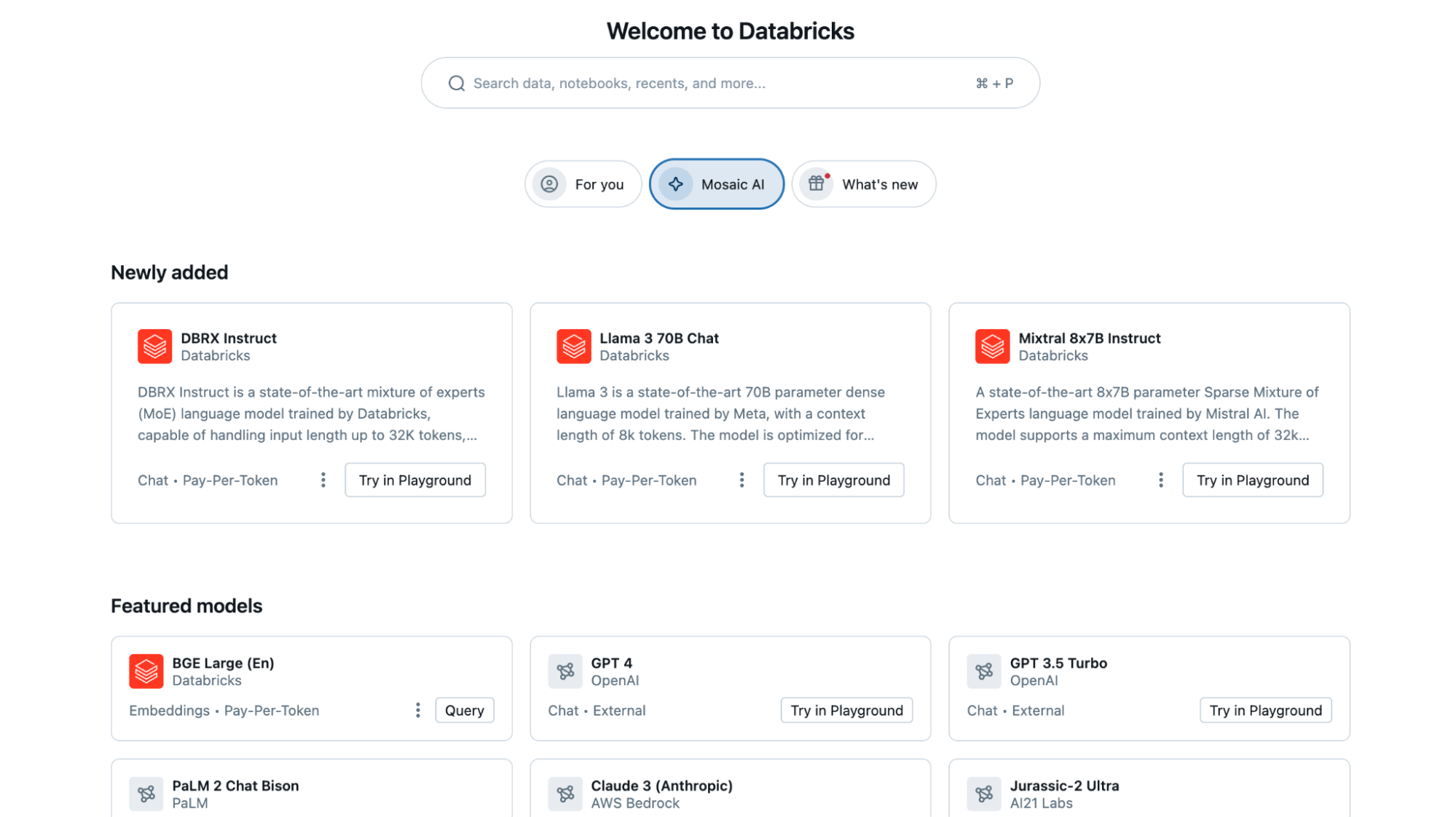

We’re regularly including new open-source and proprietary fashions to Mannequin Serving, supplying you with entry to a broader vary of choices through a unified interface.

- New Open Supply Fashions: Current additions, resembling DBRX and Llama-3, set a brand new benchmark for open language fashions, delivering capabilities that rival probably the most superior closed mannequin choices. These fashions are immediately accessible on Databricks through Basis Mannequin APIs with optimized GPU inference, holding your information safe inside Databricks’ safety perimeter.

- New Exterior Fashions Help: The Exterior Fashions characteristic now helps newest proprietary state-of-the-art fashions, together with Gemini Professional and Claude 3. Exterior fashions will let you securely handle Third-party mannequin supplier credentials and supply charge limiting and permission help.

All fashions may be accessed through a unified OpenAI-compatible API and SQL interface, making it straightforward to check, experiment with, and choose one of the best mannequin in your wants.

shopper = OpenAI(

api_key='DATABRICKS_TOKEN',

base_url='https://<YOUR WORKSPACE ID>.cloud.databricks.com/serving-endpoints'

)

chat_completion = shopper.chat.completions.create(

messages=[

{

"role": "user",

"content": "Tell me about Large Language Models"

}

],

# Specify the mannequin, both exterior or hosted on Databricks. For example,

# change 'claude-3-sonnet' with 'databricks-dbrx-instruct'

# to make use of a Databricks-hosted mannequin.

mannequin='claude-3-sonnet'

)

print(chat_completion.selections[0].message.content material)At Experian, we’re growing Gen AI fashions with the bottom charges of hallucination whereas preserving core performance. Using the Mixtral 8x7b mannequin on Databricks has facilitated fast prototyping, revealing its superior efficiency and fast response instances.” – James Lin, Head of AI/ML Innovation at Experian.

Uncover Fashions and Endpoints By New Discovery Web page and Search Expertise

As we proceed to develop the record of fashions on Databricks, lots of you will have shared that discovering them has turn into tougher. We’re excited to introduce new capabilities to simplify mannequin discovery:

- Personalised Homepage: The brand new homepage personalizes your Databricks expertise primarily based in your widespread actions and workloads. The ‘Mosaic AI’ tab on the Databricks homepage showcases state-of-the-art fashions for simple discovery. To allow this Preview characteristic, go to your account profile and navigate to Settings > Developer > Databricks Homepage.

- Common Search: The search bar now helps fashions and endpoints, offering a quicker solution to discover present fashions and endpoints, lowering discovery time, and facilitating mannequin reuse.

Construct Compound AI Programs with Chain Apps and Perform Calling

Most GenAI purposes require combining LLMs or integrating them with exterior programs. With Databricks Mannequin Serving, you may deploy customized orchestration logic utilizing LangChain or arbitrary Python code. This allows you to handle and deploy an end-to-end software fully on Databricks. We’re introducing updates to make compound programs even simpler on the platform.

- Vector Search (now GA): Databricks Vector Search seamlessly integrates with Mannequin Serving, offering correct and contextually related responses. Now usually out there, it is prepared for large-scale, production-ready deployments.

- Perform Calling (Preview): At the moment, in non-public preview, perform calling permits LLMs to generate structured responses extra reliably. This functionality means that you can use an LLM as an agent that may name capabilities by outputting JSON objects and mapping arguments. Frequent perform calling examples are: calling exterior providers like DBSQL, translating pure language into API calls, and extracting structured information from textual content. Be a part of the preview.

- Guardrails (Preview): In non-public preview, guardrails present request and response filtering for dangerous or delicate content material. Be a part of the preview.

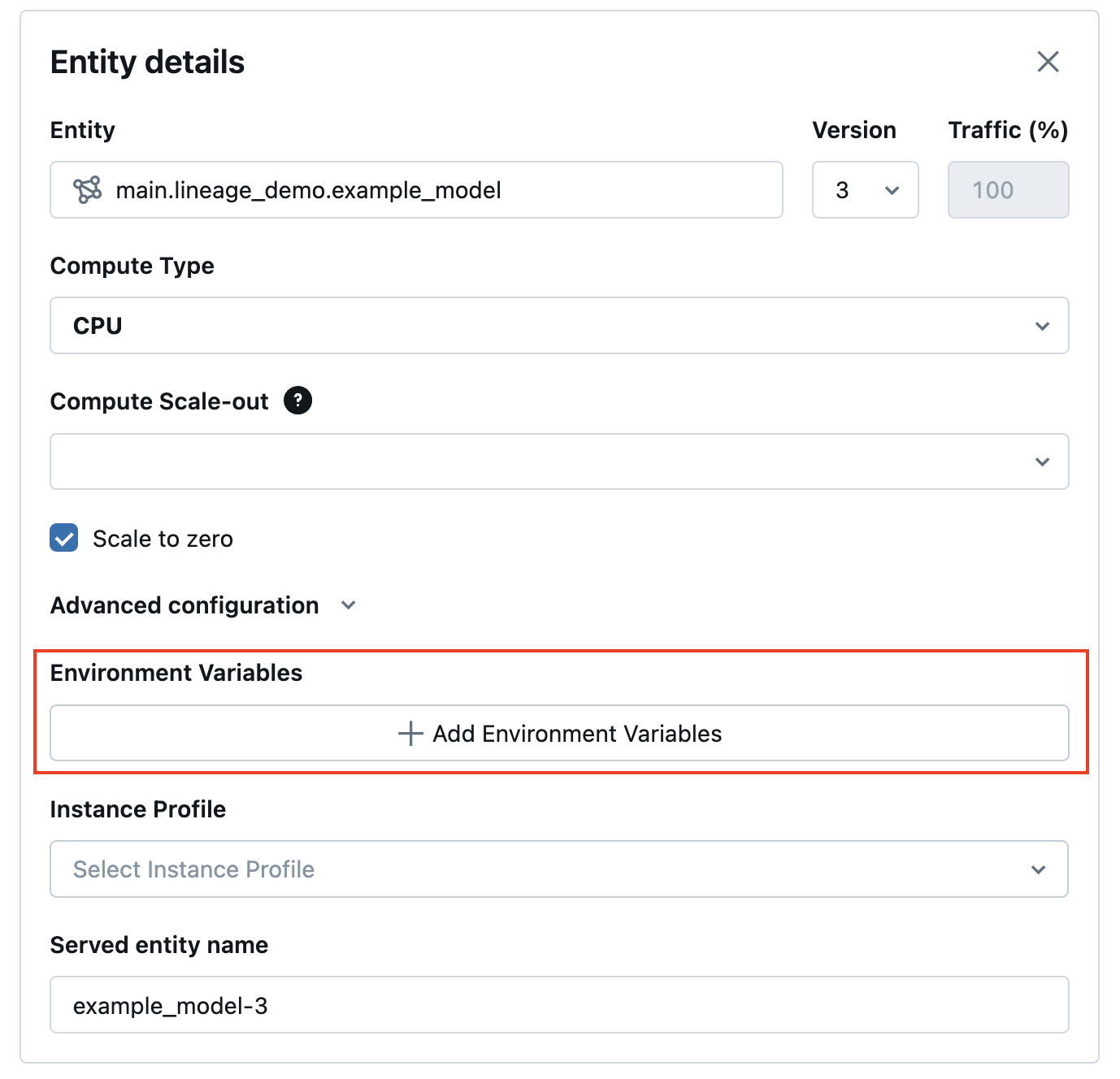

- Secrets and techniques UI: The brand new Secrets and techniques UI streamlines the addition of surroundings variables and secrets and techniques to endpoints, facilitating seamless communication with exterior programs (API can also be out there).

Extra updates are coming quickly, together with streaming help for LangChain and PyFunc fashions and playground integration to additional simplify constructing production-grade compound AI apps on Databricks.

By bringing mannequin serving and monitoring collectively, we are able to guarantee deployed fashions are all the time up-to-date and delivering correct outcomes. This streamlined method permits us to deal with maximizing the enterprise influence of AI with out worrying about availability and operational considerations. – Don Scott, VP Product Growth at Hitachi Options

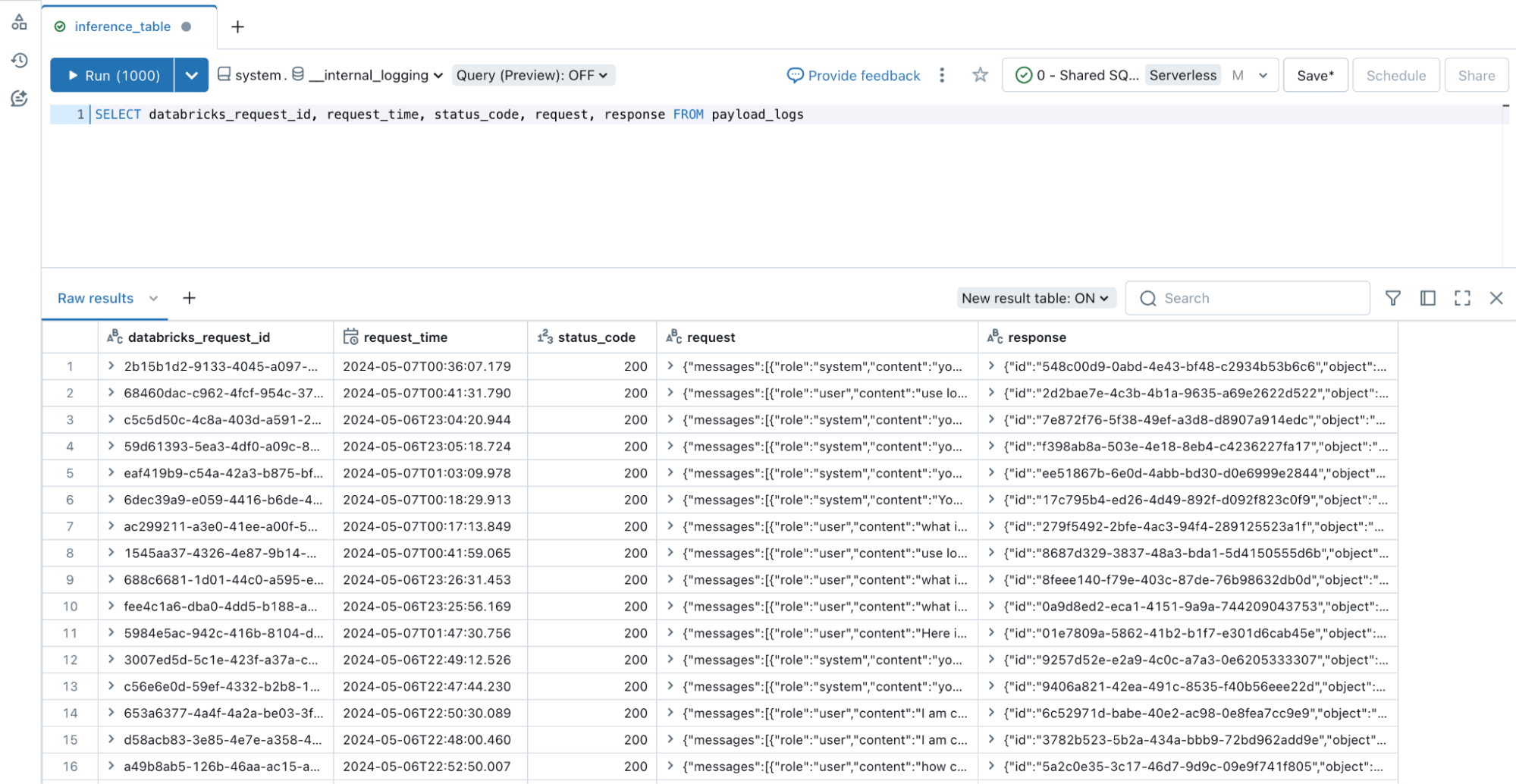

Monitor All Forms of Endpoints with Inference Tables

Monitoring LLMs and different AI fashions is simply as essential as deploying them. We’re excited to announce that Inference Tables now helps all endpoint varieties, together with GPU-deployed and externally hosted fashions. Inference Tables constantly seize inputs and predictions from Databricks Mannequin Serving endpoints and log them right into a Unity Catalog Delta Desk. You’ll be able to then make the most of present information instruments to judge, monitor, and fine-tune your AI fashions.

To hitch the preview, go to your Account > Previews > Allow Inference Tables For Exterior Fashions And Basis Fashions.

Get Began As we speak!

Go to the Databricks AI Playground to attempt Basis Fashions straight out of your workspace. For extra info, please confer with the next sources: