Within the ever-evolving panorama of synthetic intelligence, Apple has been quietly pioneering a groundbreaking strategy that might redefine how we work together with our Iphones. ReALM, or Reference Decision as Language Modeling, is a AI mannequin that guarantees to convey a brand new stage of contextual consciousness and seamless help.

Because the tech world buzzes with pleasure over OpenAI’s GPT-4 and different giant language fashions (LLMs), Apple’s ReALM represents a shift in considering – a transfer away from relying solely on cloud-based AI to a extra personalised, on-device strategy. The purpose? To create an clever assistant that actually understands you, your world, and the intricate tapestry of your each day digital interactions.

On the coronary heart of ReALM lies the flexibility to resolve references – these ambiguous pronouns like “it,” “they,” or “that” that people navigate with ease due to contextual cues. For AI assistants, nevertheless, this has lengthy been a stumbling block, resulting in irritating misunderstandings and a disjointed person expertise.

Think about a situation the place you ask Siri to “discover me a wholesome recipe based mostly on what’s in my fridge, however maintain the mushrooms – I hate these.” With ReALM, your iPhone wouldn’t solely perceive the references to on-screen info (the contents of your fridge) but additionally bear in mind your private preferences (dislike of mushrooms) and the broader context of discovering a recipe tailor-made to these parameters.

This stage of contextual consciousness is a quantum leap from the keyword-matching strategy of most present AI assistants. By coaching LLMs to seamlessly resolve references throughout three key domains – conversational, on-screen, and background – ReALM goals to create a very clever digital companion that feels much less like a robotic voice assistant and extra like an extension of your personal thought processes.

The Conversational Area: Remembering What Got here Earlier than

Conversational AI, ReALM tackles a long-standing problem: sustaining coherence and reminiscence throughout a number of turns of dialogue. With its skill to resolve references inside an ongoing dialog, ReALM may lastly ship on the promise of a pure, back-and-forth interplay together with your digital assistant.

Think about asking Siri to “remind me to guide tickets for my trip after I receives a commission on Friday.” With ReALM, Siri wouldn’t solely perceive the context of your trip plans (doubtlessly gleaned from a earlier dialog or on-screen info) but additionally have the attention to attach “getting paid” to your common payday routine.

This stage of conversational intelligence appears like a real leap ahead, enabling seamless multi-turn dialogues with out the frustration of continually re-explaining context or repeating your self.

The On-Display Area: Giving Your Assistant Eyes

Maybe probably the most groundbreaking side of ReALM, nevertheless, lies in its skill to resolve references to on-screen entities – an important step in direction of creating a very hands-free, voice-driven person expertise.

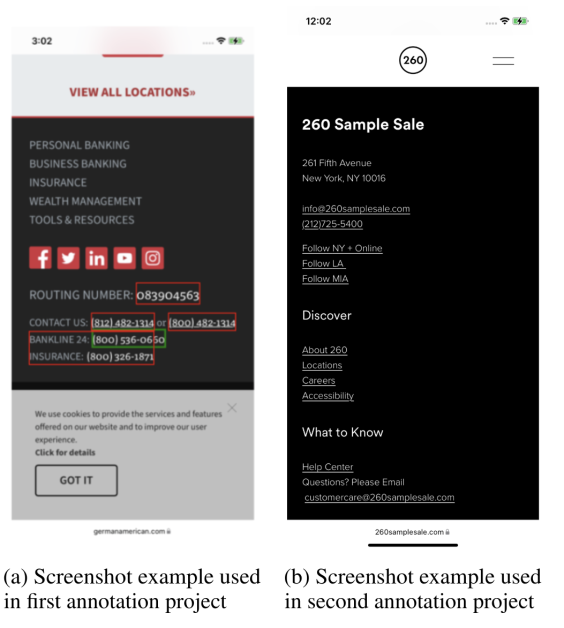

Apple’s analysis paper delves right into a novel approach for encoding visible info out of your system’s display right into a format that LLMs can course of. By primarily reconstructing the format of your display in a text-based illustration, ReALM can “see” and perceive the spatial relationships between varied on-screen components.

Take into account a situation the place you are a listing of eating places and ask Siri for “instructions to the one on Major Road.” With ReALM, your iPhone wouldn’t solely comprehend the reference to a selected location but additionally tie it to the related on-screen entity – the restaurant itemizing matching that description.

This stage of visible understanding opens up a world of potentialities, from seamlessly appearing on references inside apps and web sites to integrating with future AR interfaces and even perceiving and responding to real-world objects and environments by way of your system’s digicam.

The analysis paper on Apple’s ReALM mannequin delves into the intricate particulars of how the system encodes on-screen entities and resolves references throughout varied contexts. This is a simplified rationalization of the algorithms and examples supplied within the paper:

- Encoding On-Display Entities: The paper explores a number of methods to encode on-screen components in a textual format that may be processed by a Massive Language Mannequin (LLM). One strategy entails clustering surrounding objects based mostly on their spatial proximity and producing prompts that embody these clustered objects. Nevertheless, this methodology can result in excessively lengthy prompts because the variety of entities will increase.

The ultimate strategy adopted by the researchers is to parse the display in a top-to-bottom, left-to-right order, representing the format in a textual format. That is achieved by way of Algorithm 2, which kinds the on-screen objects based mostly on their heart coordinates, determines vertical ranges by grouping objects inside a sure margin, and constructs the on-screen parse by concatenating these ranges with tabs separating objects on the identical line.

By injecting the related entities (telephone numbers on this case) into the textual illustration, the LLM can perceive the on-screen context and resolve references accordingly.

- Examples of Reference Decision: The paper gives a number of examples as an instance the capabilities of the ReALM mannequin in resolving references throughout totally different contexts:

a. Conversational References: For a request like “Siri, discover me a wholesome recipe based mostly on what’s in my fridge, however maintain the mushrooms – I hate these,” ReALM can perceive the on-screen context (contents of the fridge), the conversational context (discovering a recipe), and the person’s preferences (dislike of mushrooms).

b. Background References: Within the instance “Siri, play that tune that was enjoying on the grocery store earlier,” ReALM can doubtlessly seize and determine ambient audio snippets to resolve the reference to the precise tune.

c. On-Display References: For a request like “Siri, remind me to guide tickets for the holiday after I get my wage on Friday,” ReALM can mix info from the person’s routines (payday), on-screen conversations or web sites (trip plans), and the calendar to know and act on the request.

These examples show ReALM’s skill to resolve references throughout conversational, on-screen, and background contexts, enabling a extra pure and seamless interplay with clever assistants.

The Background Area

Shifting past simply conversational and on-screen contexts, ReALM additionally explores the flexibility to resolve references to background entities – these peripheral occasions and processes that always go unnoticed by our present AI assistants.

Think about a situation the place you ask Siri to “play that tune that was enjoying on the grocery store earlier.” With ReALM, your iPhone may doubtlessly seize and determine ambient audio snippets, permitting Siri to seamlessly pull up and play the monitor you had in thoughts.

This stage of background consciousness appears like step one in direction of really ubiquitous, context-aware AI help – a digital companion that not solely understands your phrases but additionally the wealthy tapestry of your each day experiences.

The Promise of On-Gadget AI: Privateness and Personalization

Whereas ReALM’s capabilities are undoubtedly spectacular, maybe its most vital benefit lies in Apple’s long-standing dedication to on-device AI and person privateness.

Not like cloud-based AI fashions that depend on sending person knowledge to distant servers for processing, ReALM is designed to function completely in your iPhone or different Apple gadgets. This not solely addresses considerations round knowledge privateness but additionally opens up new potentialities for AI help that actually understands and adapts to you as a person.

By studying straight out of your on-device knowledge – your conversations, app utilization patterns, and even ambient sensory inputs – ReALM may doubtlessly create a hyper-personalized digital assistant tailor-made to your distinctive wants, preferences, and each day routines.

This stage of personalization appears like a paradigm shift from the one-size-fits-all strategy of present AI assistants, which frequently battle to adapt to particular person customers’ idiosyncrasies and contexts.

ReALM-250M mannequin achieves spectacular outcomes:

-

- Conversational Understanding: 97.8

- Artificial Activity Comprehension: 99.8

- On-Display Activity Efficiency: 90.6

- Unseen Area Dealing with: 97.2

The Moral Issues

After all, with such a excessive diploma of personalization and contextual consciousness comes a bunch of moral issues round privateness, transparency, and the potential for AI techniques to affect and even manipulate person conduct.

As ReALM features a deeper understanding of our each day lives – from our consuming habits and media consumption patterns to our social interactions and private preferences – there’s a threat of this know-how being utilized in ways in which violate person belief or cross moral boundaries.

Apple’s researchers are keenly conscious of this pressure, acknowledging of their paper the necessity to strike a cautious steadiness between delivering a very useful, personalised AI expertise and respecting person privateness and company.

This problem just isn’t distinctive to Apple or ReALM, in fact – it’s a dialog that your entire tech trade should grapple with as AI techniques develop into more and more refined and built-in into our each day lives.

In the direction of a Smarter, Extra Pure AI Expertise

As Apple continues to push the boundaries of on-device AI with fashions like ReALM, the tantalizing promise of a very clever, context-aware digital assistant feels nearer than ever earlier than.

Think about a world the place Siri (or no matter this AI assistant could also be known as sooner or later) feels much less like a disembodied voice from the cloud and extra like an extension of your personal thought processes – a companion that not solely understands your phrases but additionally the wealthy tapestry of your digital life, your each day routines, and your distinctive preferences and contexts.

From seamlessly appearing on references inside apps and web sites to anticipating your wants based mostly in your location, exercise, and ambient sensory inputs, ReALM represents a big step in direction of a extra pure, seamless AI expertise that blurs the traces between our digital and bodily worlds.

After all, realizing this imaginative and prescient would require extra than simply technical innovation – it can additionally necessitate a considerate, moral strategy to AI improvement that prioritizes person privateness, transparency, and company.

As Apple continues to refine and develop upon ReALM’s capabilities, the tech world will undoubtedly be watching with bated breath, desperate to see how this groundbreaking AI mannequin shapes the way forward for clever assistants and ushers in a brand new period of really personalised, context-aware computing.

Whether or not ReALM lives as much as its promise of outperforming even the mighty GPT-4 stays to be seen. However one factor is definite: the age of AI assistants that actually perceive us – our phrases, our worlds, and the wealthy tapestry of our each day lives – is nicely underway, and Apple’s newest innovation might very nicely be on the forefront of this revolution.