To scientists, machine studying is a comparatively outdated know-how. The final decade has seen appreciable progress, each on account of new strategies – again propagation & deep studying, and the transformers algorithm – and large funding of personal sector assets, particularly computing energy. The end result has been the placing and massively publicised success of huge language fashions.

However this speedy progress poses a paradox – for all of the technical advances over the past decade, the affect on productiveness development has been undetectable. The productiveness stagnation that has been such a characteristic of the final decade and a half continues, with all of the deleterious results that produces in flat-lining residing requirements and difficult public funds. The state of affairs is harking back to an earlier, 1987, remark by the economist Robert Solow: “You may see the pc age in all places however within the productiveness statistics.”

There are two attainable resolutions of this new Solow paradox – one optimistic, one pessimistic. The pessimist’s view is that, when it comes to innovation, the low-hanging fruit has already been taken. On this perspective – most famously acknowledged by Robert Gordon – right this moment’s improvements are literally much less economically important than improvements of earlier eras. In comparison with electrical energy, Fordist manufacturing techniques, mass private mobility, antibiotics, and telecoms, to present only a few examples, even synthetic intelligence is just of second order significance.

So as to add additional to the pessimism, there’s a rising sense that the method of innovation itself is affected by diminishing returns – within the phrases of a well-known latest paper: “Are concepts getting more durable to seek out?”.

The optimistic view, in contrast, is that the productiveness beneficial properties will come, however they may take time. Historical past tells us that economies want time to adapt to new normal goal applied sciences – infrastructures & enterprise fashions must be tailored, and the abilities to make use of them must be unfold by the working inhabitants. This was the expertise with the introduction of electrical energy to industrial processes – factories had been configured round the necessity to transmit mechanical energy from central steam engines by elaborate techniques of belts and pulleys to the person machines, so it took time to introduce techniques the place every machine had its personal electrical motor, and the interval of adaptation would possibly even contain a short lived discount in productiveness. Therefore, one would possibly anticipate a brand new know-how to comply with a J-shaped curve.

Whether or not one is an optimist or a pessimist, there are a selection of widespread analysis questions that the rise of synthetic intelligence raises:

- Are we measuring productiveness proper? How can we measure worth in a world of fast paced applied sciences?

- How do companies of various sizes adapt to new applied sciences like AI?

- How necessary – and the way rate-limiting – is the event of latest enterprise fashions in reaping the advantages of AI?

- How can we drive productiveness enhancements within the public sector?

- What would be the position of AI in well being and social care?

- How do nationwide economies make system-wide transitions? When economies have to make simultaneous transitions – for instance internet zero and digitalisation – how do they work together?

- What establishments are wanted to help the quicker and wider diffusion of latest applied sciences like AI, & the event of the abilities wanted to implement them?

- Given the UK’s financial imbalances, how can regional innovation techniques be developed to extend absorptive capability for brand new applied sciences like AI?

A finer-grained evaluation of the origins of our productiveness slowdown really deepens the brand new Solow paradox. It seems that the productiveness slowdown has been most marked in probably the most tech-intensive sectors. Within the UK, probably the most cautious decomposition equally finds that it’s the sectors usually regarded as most tech intensive which have contributed to the slowdown – transport gear (i.e., vehicles and aerospace), prescribed drugs, pc software program and telecoms.

It’s value trying in additional element on the case of prescribed drugs to see how the promise of AI would possibly play out. The decline in productiveness of the pharmaceutical business follows a number of many years by which, globally, the productiveness of R&D – expressed because the variety of new medicine delivered to market per $billion of R&D – has been falling exponentially.

There’s no clearer sign of the promise of AI within the life sciences than the efficient answer of one of the necessary elementary issues in biology – the protein folding drawback – by Deepmind’s programme AlphaFold. Many proteins fold into a novel three dimensional construction, whose exact particulars decide its perform – for instance in catalysing chemical reactions. This three-dimensional construction is set by the (one-dimensional) sequence of various amino acids alongside the protein chain. Given the sequence, can one predict the construction? This drawback had resisted theoretical answer for many years, however AlphaFold, utilizing deep studying to ascertain the correlations between sequence and plenty of experimentally decided constructions, can now predict unknown constructions from sequence knowledge with nice accuracy and reliability.

Given this success in an necessary drawback from biology, it’s pure to ask whether or not AI can be utilized to hurry up the method of growing new medicine – and never stunning that this has prompted a rush of cash from enterprise capitalists. One of the vital excessive profile start-ups within the UK pursuing that is BenevolentAI, floated on the Amsterdam Euronext market in 2021 with €1.5 billion valuation.

Earlier this yr, it was reported that BenevolentAI was shedding 180 workers after considered one of its drug candidates failed in section 2 medical trials. Its share worth has plunged, and its market cap now stands at €90 million. I’ve no cause to assume that BenevolentAI is something however a properly run firm using many glorious scientists, and I hope it recovers from these setbacks. However what classes may be learnt from this disappointment? On condition that AlphaFold was so profitable, why has it been more durable than anticipated to make use of AI to spice up R&D productiveness within the pharma business?

Two components made the success of AlphaFold attainable. Firstly, the issue it was making an attempt to resolve was very properly outlined – given a sure linear sequence of amino acids, what’s the three dimensional construction of the folded protein? Secondly, it had an enormous corpus of well-curated public area knowledge to work on, within the type of experimentally decided protein constructions, generated by many years of labor in academia utilizing x-ray diffraction and different strategies.

What’s been the issue in pharma? AI has been useful in producing new drug candidates – for instance, by figuring out molecules that can match into specific elements of a goal protein molecule. However, in response to pharma analyst Jack Scannell [1], it isn’t figuring out candidate molecules that’s the charge limiting step in drug growth. As an alternative, the issue is the dearth of screening strategies and illness fashions which have good predictive energy.

The lesson right here, then, is that AI is superb on the fixing the issues that it’s properly tailored for – properly posed issues, the place there exist large and well-curated datasets that span the issue area. Its contribution to general productiveness development, although, will depend upon whether or not these AI-susceptible elements of the general drawback are the truth is the rate-limiting steps.

So how is the state of affairs modified by the huge affect of huge language fashions? This new know-how – “generative pre-trained transformers” – consists of textual content prediction fashions primarily based on establishing statistical relationships between the phrases present in a massively multi-parameter regression over a really massive corpus of textual content [3]. This has, in impact, automated the manufacturing of believable, although spinoff and never wholly dependable, prose.

Naturally, sectors for which that is the stock-in-trade really feel threatened by this growth. What’s completely clear is that this know-how has basically solved the issue of machine translation; it additionally raises some fascinating elementary points in regards to the deep construction of language.

What areas of financial life might be most affected by massive language fashions? It’s already clear that these instruments can considerably velocity up writing pc code. Any sector by which it’s essential to generate boiler-plate prose, in advertising, routine authorized companies, and administration consultancy is more likely to be affected. Equally, the assimilation of huge paperwork might be assisted by the capabilities of LLMs to supply synopses of complicated texts.

What does the longer term maintain? There’s a very attention-grabbing dialogue available, on the intersection of know-how, biology and eschatology, in regards to the prospects for “synthetic normal intelligence”, however I’m not going to take that on right here, so I’ll concentrate on the close to time period.

We will anticipate additional enhancements in massive language fashions. There’ll undoubtedly be enhancements in efficiencies as strategies are refined and the basic understanding of how they work is improved. We’ll see extra specialised coaching units, that may enhance the (at present considerably shaky) reliability of the outputs.

There may be one concern that may show limiting. The speedy enchancment we’ve seen within the efficiency of huge language fashions has been pushed by exponential will increase within the quantity of pc useful resource used to coach the fashions, with empirical scaling legal guidelines rising to permit extrapolations. The price of coaching these fashions is now measured in $100 thousands and thousands – with related vitality consumption beginning to be a big contribution to international carbon emissions. So it’s necessary to know the extent to which the price of pc assets might be a limiting issue on the additional growth of this know-how.

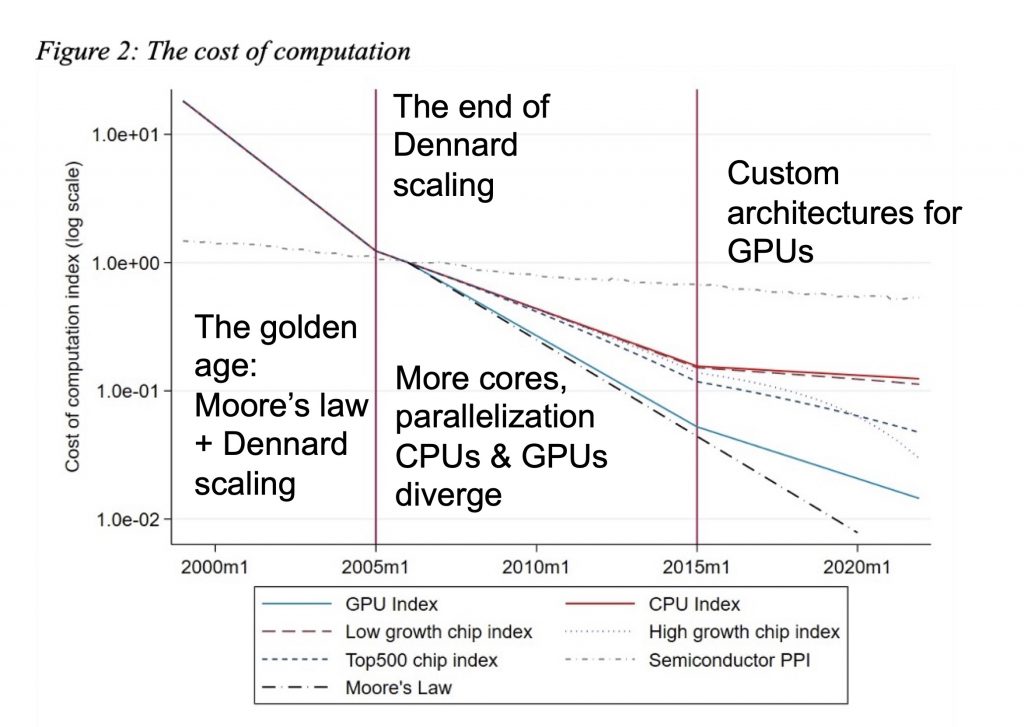

As I’ve mentioned earlier than, the exponential will increase in pc energy given to us by Moore’s regulation, and the corresponding decreases in value, started to gradual within the mid-2000’s. A latest complete examine of the price of computing by Diane Coyle and Lucy Hampton places this in context [2]. That is summarised within the determine beneath:

The price of computing with time. The stable strains signify most closely fits to a really in depth knowledge set collected by Diane Coyle and Lucy Hampton; the determine is taken from their paper [2]; the annotations are my very own.

The extremely specialised built-in circuits which might be utilized in large numbers to coach LLMs – such because the H100 graphics processing models designed by NVIdia and manufactured by TSMC which might be the mainstay of the AI business – are in a regime the place efficiency enhancements come much less from the rising transistor densities that gave us the golden age of Moore’s regulation, and extra from incremental enhancements in task-specific structure design, along with merely multiplying the variety of models.

For greater than two millennia, human cultures in each east and west have used capabilities in language as a sign for wider talents. So it’s not stunning that giant language fashions have seized the creativeness. However it’s necessary to not mistake the map for the territory.

Language and textual content are massively necessary for a way we organise and collaborate to collectively obtain widespread objectives, and for the way in which we protect, transmit and construct on the sum of human information and tradition. So we shouldn’t underestimate the ability of instruments which facilitate that. However equally, lots of the constraints we face require direct engagement with the bodily world – whether or not that’s by the necessity to get the higher understanding of biology that can enable us to develop new medicines extra successfully, or the power to generate considerable zero carbon vitality. That is the place these different areas of machine studying – sample recognition, discovering relationships inside massive knowledge units – might have a much bigger contribution.

Fluency with the written phrase is a vital ability in itself, so the enhancements in productiveness that can come from the brand new know-how of huge language fashions will come up in locations the place velocity in producing and assimilating prose are the speed limiting step within the course of of manufacturing financial worth. For machine studying and synthetic intelligence extra extensively, the speed at which productiveness development might be boosted will rely, not simply on developments within the know-how itself, however on the speed at which different applied sciences and different enterprise processes are tailored to make the most of AI.

I don’t assume we will anticipate massive language fashions, or AI on the whole, to be a magic bullet to immediately clear up our productiveness malaise. It’s a strong new know-how, however as for all new applied sciences, we now have to seek out the locations in our financial system the place they’ll add probably the most worth, and the system itself will take time to adapt, to make the most of the chances the brand new applied sciences supply.

These notes are primarily based on a casual speak I gave on behalf of the Productiveness Institute. It benefitted rather a lot from discussions with Bart van Ark. The opinions, although, are solely my very own and I wouldn’t essentially anticipate him to agree with me.

[1] J.W. Scannell, Eroom’s Regulation and the decline within the productiveness of biopharmaceutical R&D,

in Synthetic Intelligence in Science Challenges, Alternatives and the Way forward for Analysis.

[2] Diane Coyle & Lucy Hampton, Twenty-first century progress in computing.

[3] For a semi-technical account of how massive language fashions work, I discovered this piece by Stephen Wolfram very useful: What’s ChatGPT doing … and why does it work?