By eavesdropping on the brains of residing individuals, scientists have created the highest-resolution map but of the neurons that encode the meanings of assorted phrases1. The outcomes trace that, throughout people, the mind makes use of the identical commonplace classes to categorise phrases — serving to us to show sound into sense.

The examine is predicated on phrases solely in English. Nevertheless it’s a step alongside the best way to figuring out how the mind shops phrases in its language library, says neurosurgeon Ziv Williams on the Massachusetts Institute of Know-how in Cambridge. By mapping the overlapping units of mind cells that reply to numerous phrases, he says, “we are able to attempt to begin constructing a thesaurus of which means”.

The work was printed at present in Nature.

Mapping which means

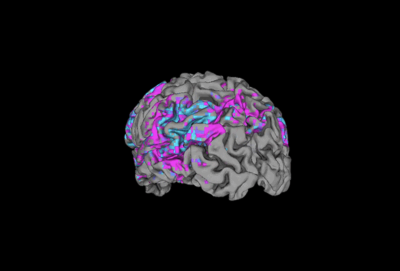

The mind space referred to as the auditory cortex processes the sound of a phrase because it enters the ear. However it’s the mind’s prefrontal cortex, a area the place higher-order mind exercise takes place, that works out a phrase’s ‘semantic which means’ — its essence or gist.

Earlier analysis2 has studied this course of by analysing pictures of blood move within the mind, which is a proxy for mind exercise. This technique allowed researchers to map phrase which means to small areas of the mind.

However Williams and his colleagues discovered a singular alternative to have a look at how particular person neurons encode language in actual time. His group recruited ten individuals about to bear surgical procedure for epilepsy, every of whom had had electrodes implanted of their brains to find out the supply of their seizures. The electrodes allowed the researchers to file exercise from round 300 neurons in every particular person’s prefrontal cortex.

As members listened to a number of quick sentences containing a complete of round 450 phrases, the scientists recorded which neurons fired and when. Williams says that round two or three distinct neurons lit up for every phrase, though he factors out that the staff recorded solely the exercise of a tiny fraction of the prefrontal cortex’s billions of neurons. The researchers then regarded on the similarity between the phrases that activated the identical neuronal exercise.

A neuron for every part

The phrases that the identical set of neurons responded to fell into related classes, similar to actions, or phrases related to individuals. The staff additionally discovered that phrases that the mind may affiliate with each other, similar to ‘duck’ and ‘egg’, triggered a few of the similar neurons. Phrases with related meanings, similar to ‘mouse’ and ‘rat’, triggered patterns of neuronal exercise that had been extra related than the patterns triggered by ‘mouse’ and ‘carrot.’ Different teams of neurons responded to phrases related to more-abstract ideas: relational phrases similar to ‘above’ and ‘behind’, for example.

Thoughts-reading machines are right here: is it time to fret?

The classes that the mind assigns to phrases had been related between members, Williams says, suggesting human brains all group meanings in the identical approach.

The prefrontal cortex neurons didn’t distinguish phrases by their sounds, solely their meanings. When an individual heard the phrase ‘son’ in a sentence, for example, phrases related to relations lit up. However these neurons didn’t reply to ‘Solar’ in a sentence, regardless of these phrases having an equivalent sound.

Thoughts studying

To an extent, the researchers had been capable of decide what individuals had been listening to by watching their neurons fireplace. Though they couldn’t recreate precise sentences, they may inform, for instance, {that a} sentence contained an animal, an motion and a meals, in that order.

“To get this degree of element and have a peek at what’s occurring on the single-neuron degree is fairly cool,” says Vikash Gilja, an engineer on the College of California San Diego and chief scientific officer of the mind–laptop interface firm Paradromics. He was impressed that the researchers might decide not solely the neurons that corresponded to phrases and their classes, but additionally the order through which they had been spoken.

Recording from neurons is way sooner than utilizing imaging; understanding language at its pure velocity, he says, will likely be necessary for future work creating mind–laptop interface units that restore speech to individuals who have misplaced that potential.