Streaming knowledge feeds many real-time analytics functions, from logistics monitoring to real-time personalization. Occasion streams, corresponding to clickstreams, IoT knowledge and different time sequence knowledge, are widespread sources of knowledge into these apps. The broad adoption of Apache Kafka has helped make these occasion streams extra accessible. Change knowledge seize (CDC) streams from OLTP databases, which can present gross sales, demographic or stock knowledge, are one other helpful supply of knowledge for real-time analytics use circumstances. On this put up, we evaluate two choices for real-time analytics on occasion and CDC streams: Rockset and ClickHouse.

Structure

ClickHouse was developed, starting in 2008, to deal with internet analytics use circumstances at Yandex in Russia. The software program was subsequently open sourced in 2016. Rockset was began in 2016 to fulfill the wants of builders constructing real-time knowledge functions. Rockset leverages RocksDB, a high-performance key-value retailer, began as an open-source undertaking at Fb round 2010 and primarily based on earlier work finished at Google. RocksDB is used as a storage engine for databases like Apache Cassandra, CockroachDB. Flink, Kafka and MySQL.

As real-time analytics databases, Rockset and ClickHouse are constructed for low-latency analytics on massive knowledge units. They possess distributed architectures that enable for scalability to deal with efficiency or knowledge quantity necessities. ClickHouse clusters are inclined to scale up, utilizing smaller numbers of enormous nodes, whereas Rockset is a serverless, scale-out database. Each provide SQL help and are able to ingesting streaming knowledge from Kafka.

Storage Format

Whereas Rockset and ClickHouse are each designed for analytic functions, there are important variations of their approaches. The ClickHouse title derives from “Clickstream Knowledge Warehouse” and it was constructed with knowledge warehouses in thoughts, so it’s unsurprising that ClickHouse borrows most of the identical concepts—column orientation, heavy compression and immutable storage—in its implementation. Column orientation is thought to be a greater storage format for OLAP workloads, like large-scale aggregations, and is on the core of ClickHouse’s efficiency.

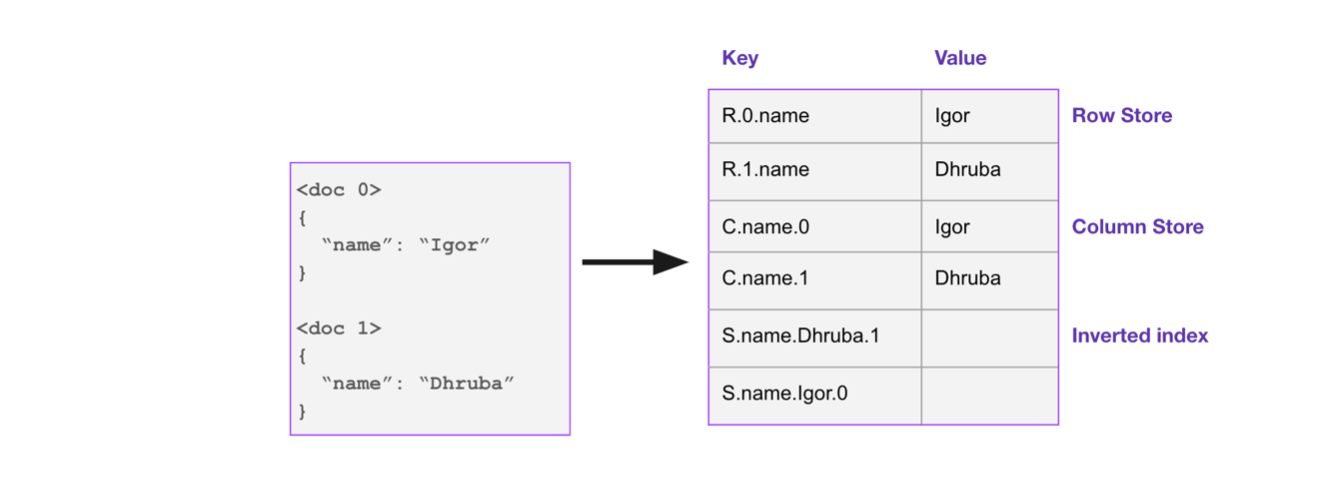

The foundational thought in Rockset, in distinction, is the indexing of knowledge for quick analytics. Rockset builds a Converged Index™ that has traits of a number of kinds of indexes—row, columnar and inverted—on all fields. Not like ClickHouse, Rockset is a mutable database.

Separation of Compute and Storage

Design for the cloud is one other space the place Rockset and ClickHouse diverge. ClickHouse is obtainable as software program, which will be self-managed on-premises or on cloud infrastructure. A number of distributors additionally provide cloud variations of ClickHouse. Rockset is designed solely for the cloud and is obtainable as a totally managed cloud service.

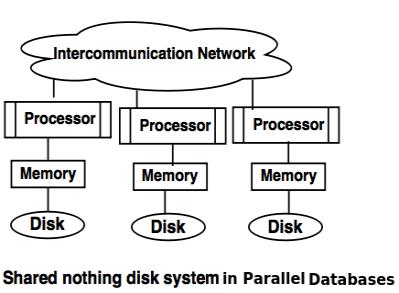

ClickHouse makes use of a shared-nothing structure, the place compute and storage are tightly coupled. This helps scale back rivalry and enhance efficiency as a result of every node within the cluster processes the info in its native storage. That is additionally a design that has been utilized by well-known knowledge warehouses like Teradata and Vertica.

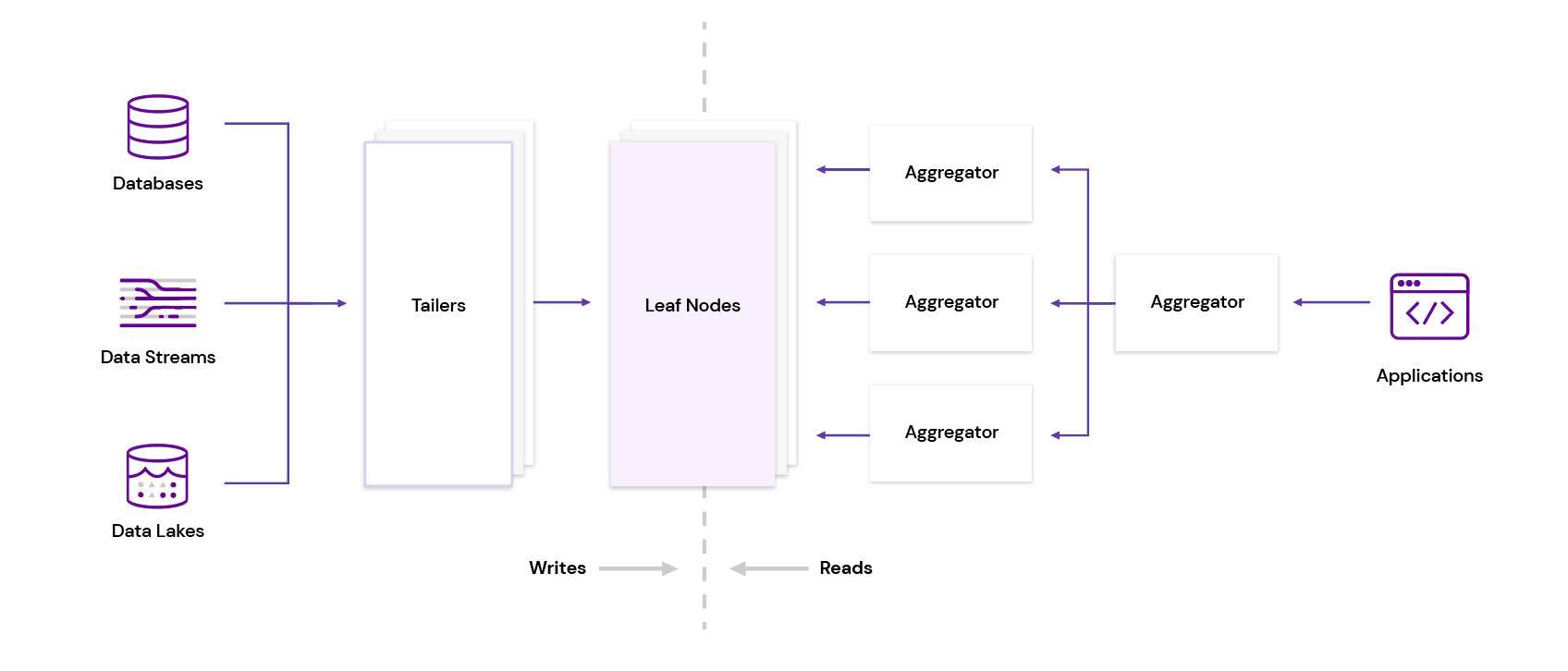

Rockset adopts an Aggregator-Leaf-Tailer (ALT) structure, popularized by internet firms like Fb, LinkedIn and Google. Tailers fetch new knowledge from knowledge sources, Leaves index and retailer the info and Aggregators execute queries in distributed style. Not solely does Rockset separate compute and storage, it additionally disaggregates ingest and question compute, so every tier on this structure will be scaled independently.

Within the following sections, we look at how a few of these architectural variations influence the capabilities of Rockset and ClickHouse.

Knowledge Ingestion

Streaming vs Batch Ingestion

Whereas ClickHouse gives a number of methods to combine with Kafka to ingest occasion streams, together with a local connector, ClickHouse ingests knowledge in batches. For a column retailer to deal with excessive ingest charges, it must load knowledge in sufficiently massive batches with a purpose to decrease overhead and maximize columnar compression. ClickHouse documentation recommends inserting knowledge in packets of at the very least 1000 rows, or not more than a single request per second. This implies customers have to configure their streams to batch knowledge forward of loading into ClickHouse.

Rockset has native connectors that ingest occasion streams from Kafka and Kinesis and CDC streams from databases like MongoDB, DynamoDB, Postgres and MySQL. In all these circumstances, Rockset ingests on a per-record foundation, with out requiring batching, as a result of Rockset is designed to make real-time knowledge out there as shortly as doable. Within the case of streaming ingest, it usually takes 1-2 seconds from when knowledge is produced to when it’s queryable in Rockset.

Knowledge Mannequin

Most often, ClickHouse would require customers to specify a schema for any desk they create. To assist make this simpler, ClickHouse lately launched higher skill to deal with semi-structured knowledge utilizing the JSON Object kind. That is coupled with the added functionality to deduce the schema from the JSON, utilizing a subset of the overall rows within the desk. Dynamically inferred columns have some limitations, corresponding to the shortcoming for use as major or type keys, so customers will nonetheless have to configure some stage of specific schema definition for optimum efficiency.

Rockset will carry out schemaless ingestion for all incoming knowledge, and can settle for fields with blended sorts, nested objects and arrays, sparse fields and null values with out the person having to carry out any guide specification. Rockset robotically generates the schema primarily based on the precise fields and kinds current within the assortment, not on a subset of the info.

ClickHouse knowledge is normally denormalized in order to keep away from having to do JOINs, and customers have commented that the info preparation wanted to take action will be tough. In distinction, there isn’t any advice to denormalize knowledge in Rockset, as Rockset can deal with JOINs nicely.

Updates and Deletes

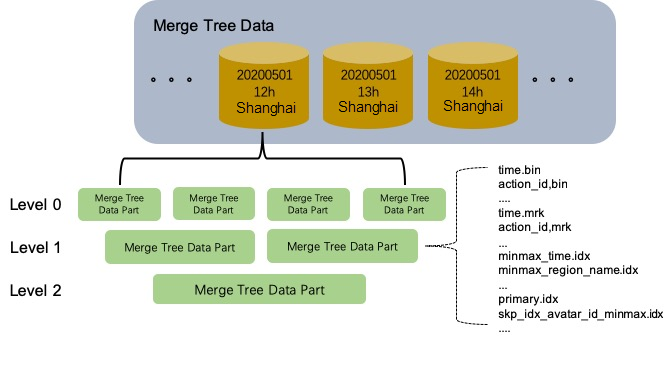

As talked about briefly within the Structure part, ClickHouse writes knowledge to immutable recordsdata, referred to as “elements.” Whereas this design helps ClickHouse obtain quicker reads and writes, it does so at the price of replace efficiency.

ClickHouse helps replace and delete operations, which it refers to as mutations. They don’t straight replace or delete the info however as a substitute rewrite and merge the info elements asynchronously. Any queries that run whereas an asynchronous mutation is in progress might get a mixture of knowledge from mutated and non-mutated elements.

As well as, these mutations can get costly, as even small adjustments will trigger massive rewrites of whole elements. ClickHouse documentation states that these are heavy operations and don’t advise that they be used regularly. For that reason, database CDC streams, which regularly comprise updates and deletes, are dealt with much less effectively by ClickHouse.

In distinction, all paperwork saved in a Rockset assortment are mutable and will be up to date on the subject stage, even when these fields are deeply nested inside arrays and objects. Solely the fields in a doc which can be a part of an replace request have to be reindexed, whereas the remainder of the fields within the doc stay untouched.

Rockset makes use of RocksDB, a high-performance key-value retailer that makes mutations trivial. RocksDB helps atomic writes and deletes throughout totally different keys. Resulting from its design, Rockset is likely one of the few real-time analytics databases that may effectively ingest from database CDC streams.

Ingest Transformations and Rollups

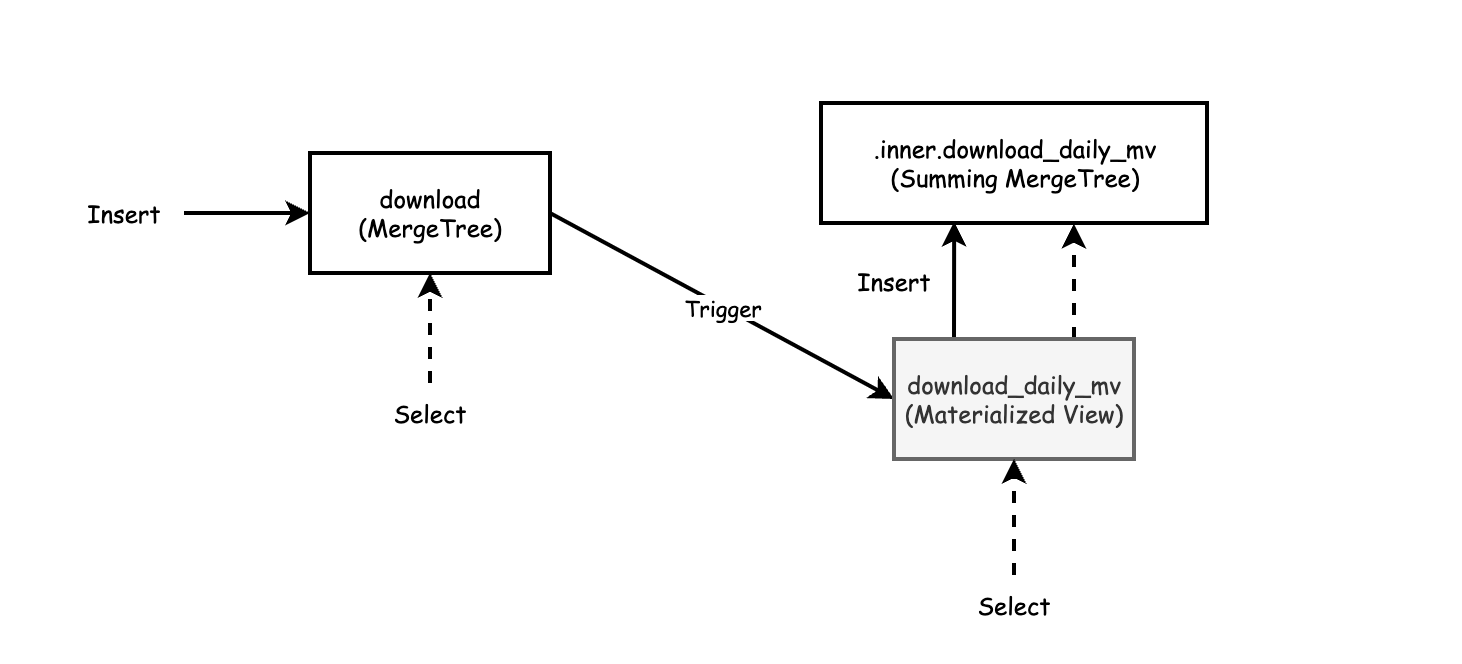

It’s helpful to have the ability to rework and rollup streaming knowledge as it’s being ingested. ClickHouse has a number of storage engines that may pre-aggregate knowledge. The SummingMergeTree sums rows that correspond to the identical major key and shops the outcome as a single row. The AggregatingMergeTree is analogous and applies mixture features to rows with the identical major key to provide a single row as its outcome.

Rockset helps SQL transformations that apply to all paperwork on the level of ingestion. Customers have the flexibility to specify many extra kinds of transformations by means of using SQL. Widespread makes use of for ingest transformation embrace dropping fields, subject masking and hashing, and sort coercion.

Rollups in Rockset are a particular kind of transformation that aggregates knowledge upon ingest. Utilizing rollups reduces storage measurement and improves question efficiency as a result of solely the aggregated knowledge is saved and queried.

Queries and Efficiency

Indexing

ClickHouse’s efficiency stems primarily from storage optimizations corresponding to column orientation, aggressive compression and ordering of knowledge by major key. ClickHouse does use indexing to hurry up queries as nicely, however in a extra restricted style as in comparison with its storage optimizations.

Main indexes in ClickHouse are sparse indexes. They don’t index each row however as a substitute have one index entry per group of rows. As a substitute of returning single rows that match the question, the sparse index is used to find teams of rows which can be doable matches.

Equally, ClickHouse makes use of secondary indexes, generally known as knowledge skipping indexes, to allow ClickHouse to skip studying blocks that won’t match the question. ClickHouse then scans by means of the lowered knowledge set to finish executing the question.

Rockset optimizes for compute effectivity, so indexing is the primary driver behind its question velocity. Rockset’s Converged Index combines a row index, columnar index and inverted index. This permits Rockset’s SQL engine to make use of indexing optimally to speed up numerous sorts of analytical queries, from extremely selective queries to large-scale aggregations. The Converged Index can be a protecting index, that means all queries will be resolved solely by means of the index, with none extra lookup.

There’s a huge distinction in how indexing is managed in ClickHouse and Rockset. In ClickHouse, the onus is on the person to grasp what indexes are wanted with a purpose to configure major and secondary indexes. Rockset, by default, indexes all the info that’s ingested within the other ways supplied by the Converged Index.

Joins

Whereas ClickHouse helps JOIN performance, many customers report efficiency challenges with JOINs, notably on massive tables. ClickHouse doesn’t have the flexibility to optimize these JOINs successfully, so alternate options, like denormalizing knowledge beforehand to keep away from JOINs, are really helpful.

In supporting full-featured SQL, Rockset was designed with JOIN efficiency in thoughts. Rockset partitions the JOINs, and these partitions run in parallel on distributed Aggregators that may be scaled out if wanted. It additionally has a number of methods of performing JOINs:

- Hash Be a part of

- Nested loop Be a part of

- Broadcast Be a part of

- Lookup Be a part of

The power to JOIN knowledge in Rockset is especially helpful when analyzing knowledge throughout totally different database programs and reside knowledge streams. Rockset can be utilized, for instance, to JOIN a Kafka stream with dimension tables from MySQL. In lots of conditions, pre-joining the info is just not an choice as a result of knowledge freshness is vital or the flexibility to carry out advert hoc queries is required.

Operations

Cluster Administration

ClickHouse clusters will be run in self-managed mode or by means of an organization that commercializes ClickHouse as a cloud service. In a self-managed cluster, ClickHouse customers might want to set up and configure the ClickHouse software program in addition to required companies like ZooKeeper or ClickHouse Keeper. The cloud model will assist take away among the {hardware} and software program provisioning burden, however customers nonetheless have to configure nodes, shards, software program variations, replication and so forth. Customers have to intervene to improve the cluster, throughout which they might expertise downtime or efficiency degradation.

In distinction, Rockset is totally managed and serverless. The idea of clusters and servers is abstracted away, so no provisioning is required and customers shouldn’t have to handle any infrastructure themselves. Software program upgrades occur within the background, so customers can simply reap the benefits of the most recent model of software program.

Scaling and Rebalancing

Whereas it’s pretty simple to get began with the single-node model of ClickHouse, scaling the cluster to fulfill efficiency and storage wants takes some effort. As an example, organising distributed ClickHouse entails making a shard desk on every particular person server after which defining the distributed view through one other create command.

As mentioned within the Structure overview, compute and storage are sure to one another in ClickHouse nodes and clusters. Customers have to scale each compute and storage in mounted ratios and lack the pliability to scale assets independently. This may end up in useful resource utilization that’s suboptimal, the place both compute or storage is overprovisioned.

The tight coupling of compute and storage additionally offers rise to conditions the place imbalances or hotspots can happen. A standard situation arises when including nodes to a ClickHouse cluster, which requires rebalancing of knowledge to populate the newly added nodes. ClickHouse documentation calls out that ClickHouse clusters should not elastic as a result of they don’t help automated shard rebalancing. As a substitute, rebalancing is a extremely concerned course of that may embrace manually weighting writes to bias the place new knowledge is written, guide relocation of current knowledge partitions, and even copying and exporting knowledge to a brand new cluster.

One other aspect impact of the shortage of compute-storage separation is that a lot of small queries can have an effect on your complete cluster. ClickHouse recommends bi-level sharding to restrict the influence of those small queries.

Scaling in Rockset entails much less effort due to its separation of compute and storage. Storage autoscales as knowledge measurement grows, whereas compute will be scaled by specifying the Digital Occasion measurement, which governs the overall compute and reminiscence assets out there within the system. Customers can scale assets independently for extra environment friendly useful resource utilization. No rebalancing is required as Rockset’s compute nodes entry knowledge from its shared storage.

Replication

Resulting from ClickHouse’s shared-nothing structure, replicas serve a twin objective: availability and sturdiness. Whereas replicas have the potential to assist with question efficiency, they’re important to protect in opposition to the lack of knowledge, so ClickHouse customers should incur the extra value for replication. Configuring replication in ClickHouse additionally entails deploying ZooKeeper or ClickHouse Keeper, ClickHouse’s model of the service, for coordination.

In Rockset’s cloud-native structure, it makes use of cloud object storage to make sure sturdiness with out requiring extra replicas. A number of replicas can assist question efficiency, however these will be introduced on-line on demand, solely when there’s an lively question request. Through the use of cheaper cloud object storage for sturdiness and solely spinning up compute and quick storage for replicas when wanted for efficiency, Rockset can present higher price-performance.

Abstract

Rockset and ClickHouse are each real-time analytics choices for streaming knowledge, however they’re designed fairly in another way below the hood. Their technical variations manifest themselves within the following methods.

- Effectivity of streaming writes and updates: ClickHouse discourages small, streaming writes and frequent updates as it’s constructed on immutable columnar storage. Rockset, as a mutable database, handles streaming ingest, updates and deletes way more effectively, making it appropriate as a goal for occasion and database CDC streams.

- Knowledge and question flexibility: ClickHouse normally requires knowledge to be denormalized as a result of large-scale JOINs don’t carry out nicely. Rockset operates on semi-structured knowledge, with out the necessity for schema definition or denormalization, and helps full-featured SQL together with JOINs.

- Operations: Rockset was constructed for the cloud from day one, whereas ClickHouse is software program that may be deployed on-premises or on cloud infrastructure. Rockset’s disaggregated cloud-native structure minimizes the operational burden on the person and allows fast and simple scale out.

For these causes, many organizations have opted to construct on Rockset somewhat than spend money on heavier knowledge engineering to make different options work. If you want to strive Rockset for your self, you possibly can arrange a brand new account and connect with a streaming supply in minutes.