(DedMityay/Shutterstock)

Scale AI, which supplies information labeling and annotation software program and companies to organizations like OpenAI, Meta, and the Division of Protection, this week introduced a $1-billion funding spherical at a valuation of almost $14 billion, placing it in a chief place to capitalize on the generative AI revolution.

Alexandr Wang based Scale AI again in 2016 to supply labeled and annotated information, primarily for autonomous driving methods. On the time, self-driving automobiles appeared to be simply across the nook, however getting the automobiles on the highway in a secure method has confirmed to be a harder downside than initially anticipated.

With the explosion of thinking about GenAI over the previous 18 months, the San Francisco-based firm noticed the necessity explode for labeling and annotating textual content information, which is the first enter for big language fashions (LLMs). Scale AI employs a big community of tons of of contractors all over the world who carry out the work of labeling and annotating purchasers’ information, which entails issues like describing items of textual content or dialog, assessing the sentiment, and total establishing the “floor reality” of the info so it may be used for supervised machine studying.

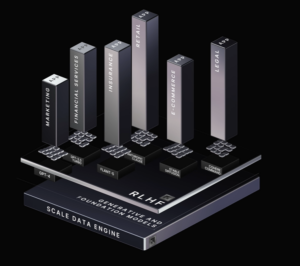

Along with offering information labeling and annotation companies, Scale AI additionally develops software program, together with a product known as the Scale Information Engine that’s geared towards serving to clients create their very own AI-ready information–or in different phrases, to create an information foundry.

The Scale Information Engine supplies a framework for the “end-to-end AI lifecycle,” the corporate says. The software program helps to automate the gathering, curation, and labeling or annotating textual content, picture, video, audio, and sensor information. It supplies information administration for unstructured information, direct integration with LLMs from OpenAI, Cohere, Anthropic, and Meta (amongst others), administration of the reinforcement studying from human suggestions (RLHF) workflow, and “purple teaming” fashions to make sure safety.

ScaleAI additionally develops Scale GenAI Platform, which it payments as a “full stack” GenAI product that helps customers to optimize their LLM efficiency, supplies automated mannequin comparisons, and helps customers implement retrieval augmented era (RAG) to spice up the standard of their LLM functions.

It’s all about increasing clients’ capacity to scale up essentially the most important asset for AI: their information.

“Information abundance will not be the default; it’s a alternative. It requires bringing collectively the perfect minds in engineering, operations, and AI,” Wang mentioned in a press launch. “Our imaginative and prescient is one in every of information abundance, the place we’ve got the technique of manufacturing to proceed scaling frontier LLMs many extra orders of magnitude. We shouldn’t be data-constrained in attending to GPT-10.”

This week’s $1 billion Sequence F spherical solidifies Scale AI as one of many leaders in an rising subject of information administration for GenAI. Corporations are dashing to undertake GenAI, however typically discover their information is ill-prepared to be used with LLMs, both for coaching new fashions, fine-tuning present ones, or simply feeding information into present LLMs utilizing prompts and retrieval-augmented era (RAG) methods.

The spherical consists of almost two dozen traders, together with Nvidia, Meta, Amazon, and the funding arms of Intel, AMD, Cisco, and ServiceNow. The $13.8 billion is almost double the $7.3 billion valuation Scale AI had in 2021, and places the corporate, which reportedly had revenues of $700 million final yr, on observe for an preliminary public providing (IPO).

Scale AI has labored with a variety of corporations, together with iRobot, maker of the Roomba vacuum machine; Toyota, Nuvo, Amazon, and Salesforce. It signed a $249-million contract with the Division of Protection in 2022, and it’s achieved work with the US Airforce.

“As an AI group we’ve exhausted the entire straightforward information, the web information, and now we have to transfer on to extra complicated information,” Wang instructed the Monetary Instances. “The amount issues however the high quality is paramount. We’re now not in a paradigm the place extra feedback off Reddit are going to magically enhance the fashions.”

Associated Objects:

Self-Driving Automobiles vs. Coding Copilots

Informatica CEO: Good Information Administration Not Non-obligatory for AI

The High 5 Information Labeling Corporations In response to Everest Group