Analysis reveals survey individuals duped by AI-generated photographs practically 40 p.c of the time.

If you happen to just lately had hassle determining if a picture of an individual is actual or generated via synthetic intelligence (AI), you’re not alone.

A brand new research from College of Waterloo researchers discovered that individuals had extra issue than was anticipated distinguishing who’s an actual individual and who’s artificially generated.

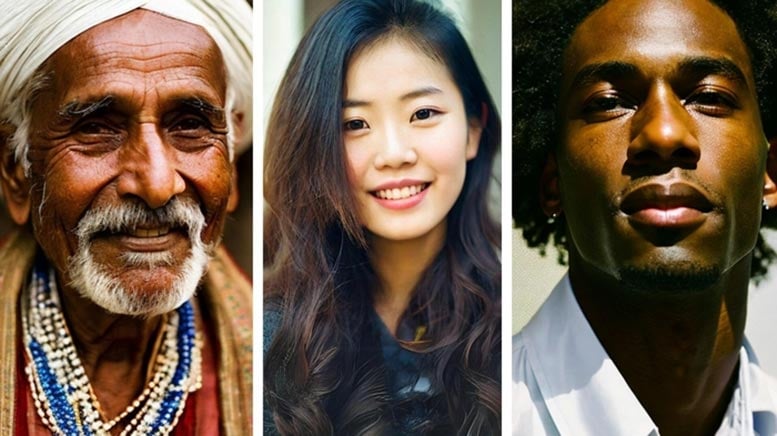

The Waterloo research noticed 260 individuals supplied with 20 unlabeled footage: 10 of which had been of actual folks obtained from Google searches, and the opposite 10 generated by Secure Diffusion or DALL-E, two generally used AI applications that generate photographs.

Individuals had been requested to label every picture as actual or AI-generated and clarify why they made their determination. Solely 61 p.c of individuals may inform the distinction between AI-generated folks and actual ones, far under the 85 p.c threshold that researchers anticipated.

Deceptive Indicators and Fast AI Growth

“Persons are not as adept at making the excellence as they suppose they’re,” mentioned Andreea Pocol, a PhD candidate in Laptop Science on the College of Waterloo and the research’s lead writer.

Individuals paid consideration to particulars akin to fingers, enamel, and eyes as attainable indicators when on the lookout for AI-generated content material – however their assessments weren’t at all times appropriate.

Pocol famous that the character of the research allowed individuals to scrutinize photographs at size, whereas most web customers take a look at photographs in passing.

“People who find themselves simply doomscrolling or don’t have time gained’t choose up on these cues,” Pocol mentioned.

Pocol added that the extraordinarily speedy fee at which AI know-how is creating makes it significantly obscure the potential for malicious or nefarious motion posed by AI-generated photographs. The tempo of educational analysis and laws isn’t typically in a position to sustain: AI-generated photographs have turn into much more reasonable for the reason that research started in late 2022.

The Menace of AI-Generated Disinformation

These AI-generated photographs are significantly threatening as a political and cultural instrument, which may see any person create faux photographs of public figures in embarrassing or compromising conditions.

“Disinformation isn’t new, however the instruments of disinformation have been consistently shifting and evolving,” Pocol mentioned. “It could get to some extent the place folks, irrespective of how skilled they are going to be, will nonetheless wrestle to distinguish actual photographs from fakes. That’s why we have to develop instruments to determine and counter this. It’s like a brand new AI arms race.”

The research, “Seeing Is No Longer Believing: A Survey on the State of Deepfakes, AI-Generated People, and Different Nonveridical Media,” was revealed within the journal Advances in Laptop Graphics.

Reference: “Seeing Is No Longer Believing: A Survey on the State of Deepfakes, AI-Generated People, and Different Nonveridical Media” by Andreea Pocol, Lesley Istead, Sherman Siu, Sabrina Mokhtari and Sara Kodeiri, 29 December 2023, Advances in Laptop Graphics.

DOI: 10.1007/978-3-031-50072-5_34