Deep neural networks have gotten more and more related throughout varied industries, and for good purpose. When skilled utilizing supervised studying, they are often extremely efficient at fixing varied issues; nevertheless, to attain optimum outcomes, a big quantity of coaching knowledge is required. The information have to be of a top quality and consultant of the manufacturing setting.

Whereas giant quantities of knowledge can be found on-line, most of it’s unprocessed and never helpful for machine studying (ML). Let’s assume we wish to construct a site visitors mild detector for autonomous driving. Coaching pictures ought to include site visitors lights and bounding bins to precisely seize the borders of those site visitors lights. However reworking uncooked knowledge into organized, labeled, and helpful knowledge is time-consuming and difficult.

To optimize this course of, I developed Cortex: The Largest AI Dataset, a brand new SaaS product that focuses on picture knowledge labeling and laptop imaginative and prescient however could be prolonged to various kinds of knowledge and different synthetic intelligence (AI) subfields. Cortex has varied use circumstances that profit many fields and picture sorts:

- Bettering mannequin efficiency for fine-tuning of customized knowledge units: Pretraining a mannequin on a big and numerous knowledge set like Cortex can considerably enhance the mannequin’s efficiency when it’s fine-tuned on a smaller, specialised knowledge set. For example, within the case of a cat breed identification app, pretraining a mannequin on a various assortment of cat pictures helps the mannequin shortly acknowledge varied options throughout totally different cat breeds. This improves the app’s accuracy in classifying cat breeds when fine-tuned on a selected knowledge set.

- Coaching a mannequin for common object detection: As a result of the information set accommodates labeled pictures of varied objects, a mannequin could be skilled to detect and determine sure objects in pictures. One frequent instance is the identification of vehicles, helpful for purposes reminiscent of automated parking programs, site visitors administration, regulation enforcement, and safety. Moreover automobile detection, the method for common object detection could be prolonged to different MS COCO courses (the information set at present handles solely MS COCO courses).

- Coaching a mannequin for extracting object embeddings: Object embeddings check with the illustration of objects in a high-dimensional house. By coaching a mannequin on Cortex, you possibly can train it to generate embeddings for objects in pictures, which might then be used for purposes reminiscent of similarity search or clustering.

- Producing semantic metadata for pictures: Cortex can be utilized to generate semantic metadata for pictures, reminiscent of object labels. This will empower utility customers with further insights and interactivity (e.g., clicking on objects in a picture to study extra about them or seeing associated pictures in a information portal). This characteristic is especially advantageous for interactive studying platforms, by which customers can discover objects (animals, autos, home items, and so forth.) in better element.

Our Cortex walkthrough will concentrate on the final use case, extracting semantic metadata from web site pictures and creating clickable bounding bins over these pictures. When a person clicks on a bounding field, the system initiates a Google seek for the MS COCO object class recognized inside it.

The Significance of Excessive-quality Information for Trendy AI

Many subfields of recent AI have lately seen vital breakthroughs in laptop imaginative and prescient, pure language processing (NLP), and tabular knowledge evaluation. All these subfields share a typical reliance on high-quality knowledge. AI is just nearly as good as the information it’s skilled on, and, as such, data-centric AI has develop into an more and more vital space of analysis. Methods like switch studying and artificial knowledge era have been developed to handle the problem of knowledge shortage, whereas knowledge labeling and cleansing stay vital for making certain knowledge high quality.

Particularly, labeled knowledge performs an important function within the growth of recent AI fashions reminiscent of fine-tuned LLMs or laptop imaginative and prescient fashions. It’s simple to acquire trivial labels for pretraining language fashions, reminiscent of predicting the subsequent phrase in a sentence. Nonetheless, gathering labeled knowledge for conversational AI fashions like ChatGPT is extra sophisticated; these labels should display the specified conduct of the mannequin to make it seem to create significant conversations. The challenges multiply when coping with picture labeling. To create fashions like DALL-E 2 and Steady Diffusion, an unlimited knowledge set with labeled pictures and textual descriptions was vital to coach them to generate pictures primarily based on person prompts.

Low-quality knowledge for programs like ChatGPT would result in poor conversational skills, and low-quality knowledge for picture object bounding bins would result in inaccurate predictions, reminiscent of assigning the fallacious courses to the fallacious bounding bins, failing to detect objects, and so forth. Low-quality picture knowledge also can include noise and blur pictures. Cortex goals to make high-quality knowledge available to builders creating or coaching their picture fashions, making the coaching course of sooner, extra environment friendly, and predictable.

An Overview of Massive Information Set Processing

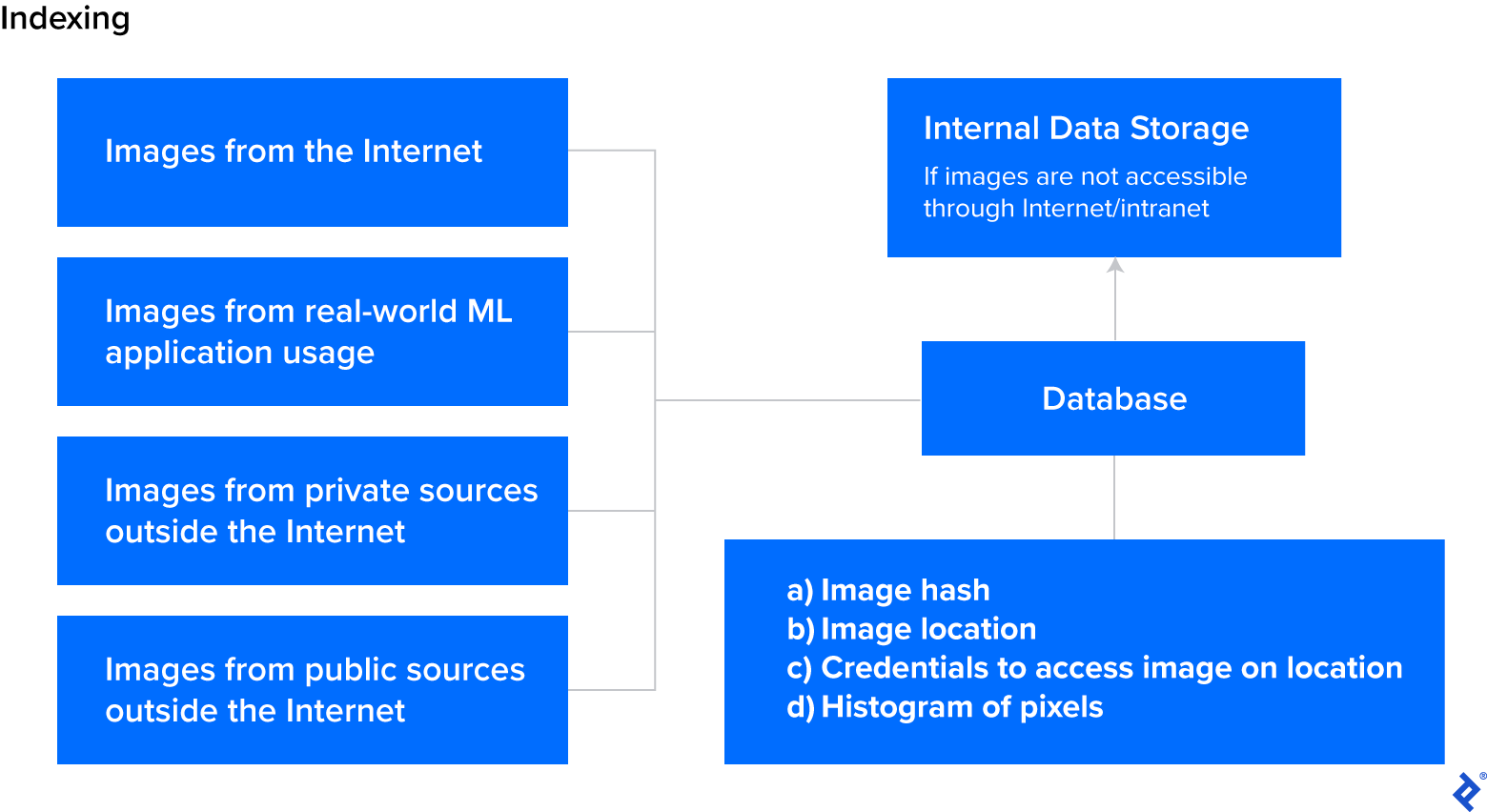

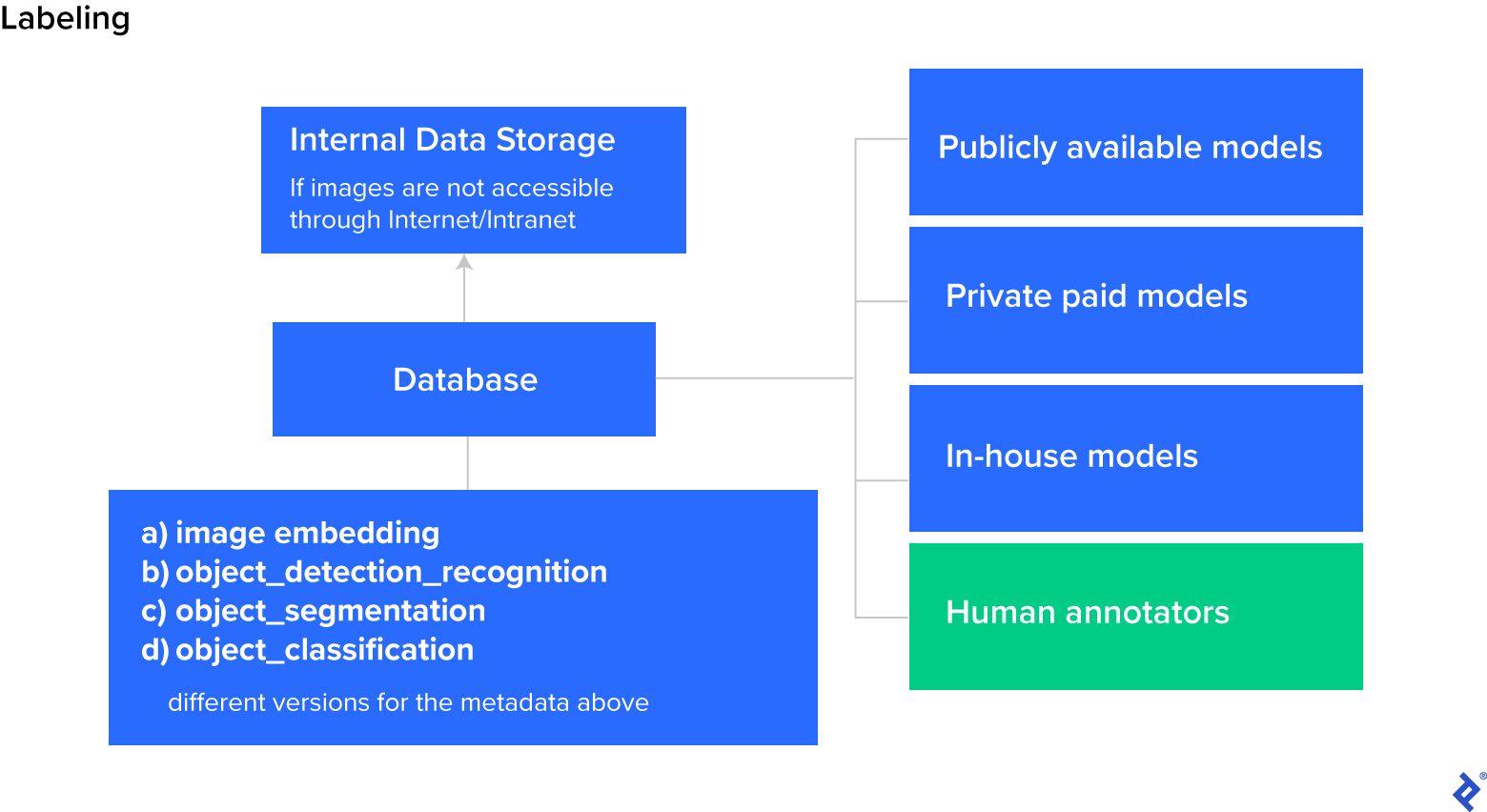

Creating a big AI knowledge set is a strong course of that entails a number of phases. Usually, within the knowledge assortment part, pictures are scraped from the Web with saved URLs and structural attributes (e.g., picture hash, picture width and top, and histogram). Subsequent, fashions carry out computerized picture labeling so as to add semantic metadata (e.g., picture embeddings, object detection labels) to photographs. Lastly, high quality assurance (QA) efforts confirm the accuracy of labels by means of rule-based and ML-based approaches.

Information Assortment

There are numerous strategies of acquiring knowledge for AI programs, every with its personal set of benefits and downsides:

-

Labeled knowledge units: These are created by researchers to unravel particular issues. These knowledge units, reminiscent of MNIST and ImageNet, already include labels for mannequin coaching. Platforms like Kaggle present an area for sharing and discovering such knowledge units, however these are sometimes supposed for analysis, not business use.

-

Personal knowledge: This kind is proprietary to organizations and is often wealthy in domain-specific info. Nonetheless, it typically wants further cleansing, knowledge labeling, and presumably consolidation from totally different subsystems.

-

Public knowledge: This knowledge is freely accessible on-line and collectible through net crawlers. This method could be time-consuming, particularly if knowledge is saved on high-latency servers.

-

Crowdsourced knowledge: This kind entails partaking human employees to gather real-world knowledge. The standard and format of the information could be inconsistent resulting from variations in particular person employees’ output.

-

Artificial knowledge: This knowledge is generated by making use of managed modifications to current knowledge. Artificial knowledge methods embody generative adversarial networks (GANs) or easy picture augmentations, proving particularly helpful when substantial knowledge is already out there.

When constructing AI programs, acquiring the proper knowledge is essential to make sure effectiveness and accuracy.

Information Labeling

Information labeling refers back to the strategy of assigning labels to knowledge samples in order that the AI system can study from them. The most typical knowledge labeling strategies are the next:

-

Guide knowledge labeling: That is probably the most simple method. A human annotator examines every knowledge pattern and manually assigns a label to it. This method could be time-consuming and costly, however it’s typically vital for knowledge that requires particular area experience or is very subjective.

-

Rule-based labeling: That is a substitute for handbook labeling that entails making a algorithm or algorithms to assign labels to knowledge samples. For instance, when creating labels for video frames, as a substitute of manually annotating each attainable body, you possibly can annotate the primary and final body and programmatically interpolate for frames in between.

-

ML-based labeling: This method entails utilizing current machine studying fashions to supply labels for brand spanking new knowledge samples. For instance, a mannequin may be skilled on a big knowledge set of labeled pictures after which used to robotically label pictures. Whereas this method requires an incredible many labeled pictures for coaching, it may be significantly environment friendly, and a latest paper means that ChatGPT is already outperforming crowdworkers for textual content annotation duties.

The selection of labeling technique relies on the complexity of the information and the out there sources. By fastidiously deciding on and implementing the suitable knowledge labeling technique, researchers and practitioners can create high-quality labeled knowledge units to coach more and more superior AI fashions.

High quality Assurance

High quality assurance ensures that the information and labels used for coaching are correct, constant, and related to the duty at hand. The most typical QA strategies mirror knowledge labeling strategies:

-

Guide QA: This method entails manually reviewing knowledge and labels to examine for accuracy and relevance.

-

Rule-based QA: This technique employs predefined guidelines to examine knowledge and labels for accuracy and consistency.

-

ML-based QA: This technique makes use of machine studying algorithms to detect errors or inconsistencies in knowledge and labels robotically.

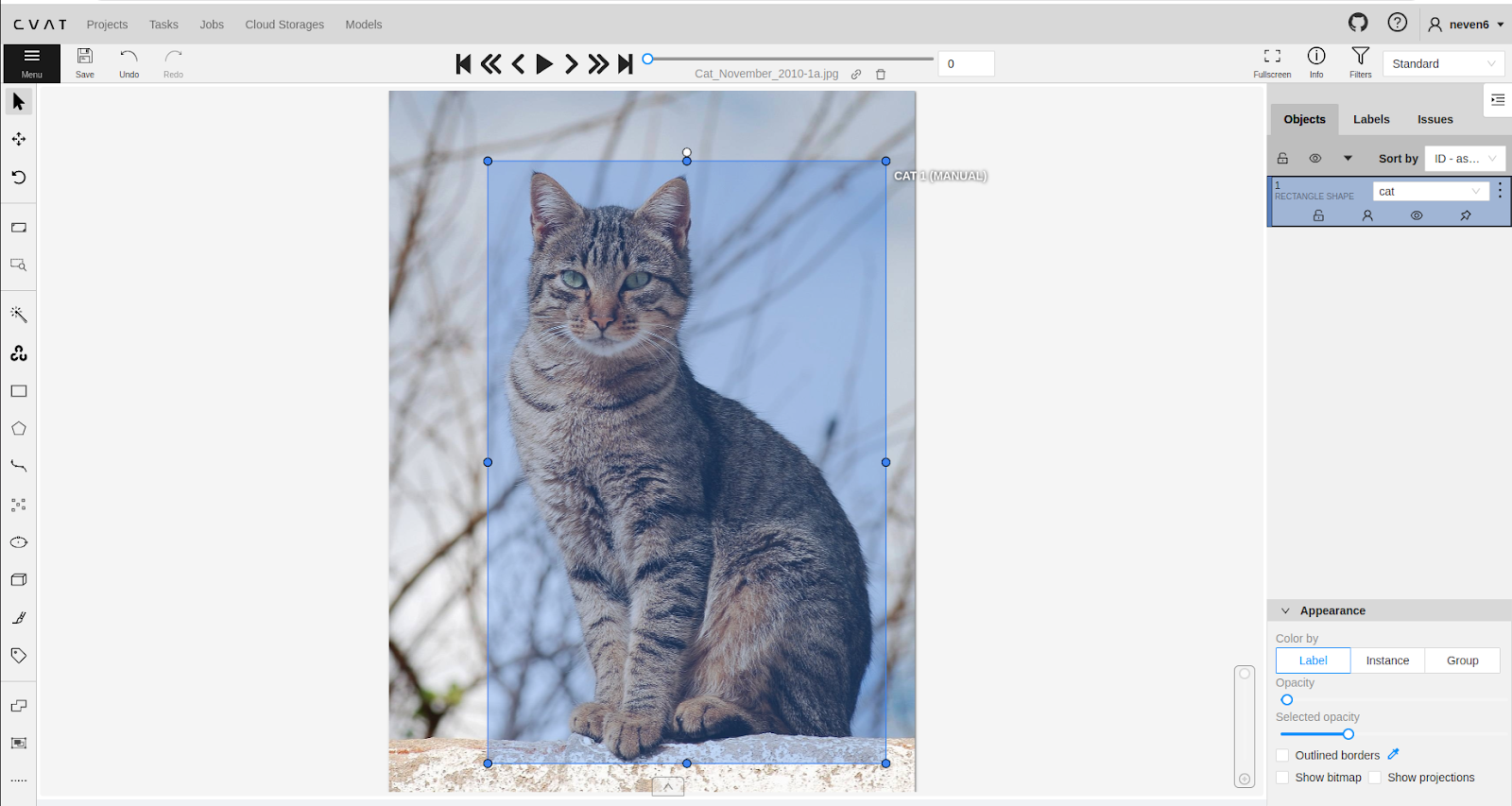

One of many ML-based instruments out there for QA is FiftyOne, an open-source toolkit for constructing high-quality knowledge units and laptop imaginative and prescient fashions. For handbook QA, human annotators can use instruments like CVAT to enhance effectivity. Counting on human annotators is the most costly and least fascinating possibility, and may solely be achieved if computerized annotators don’t produce high-quality labels.

When validating knowledge processing efforts, the extent of element required for labeling ought to match the wants of the duty at hand. Some purposes might require precision right down to the pixel degree, whereas others could also be extra forgiving.

QA is an important step in constructing high-quality neural community fashions; it verifies that these fashions are efficient and dependable. Whether or not you utilize handbook, rule-based, or ML-based QA, you will need to be diligent and thorough to make sure the very best end result.

Cortex Walkthrough: From URL to Labeled Picture

Cortex makes use of each handbook and automatic processes to gather and label the information and carry out QA; nevertheless, the objective is to cut back handbook work by feeding human outputs to rule-based and ML algorithms.

Cortex samples encompass URLs that reference the unique pictures, that are scraped from the Frequent Crawl database. Information factors are labeled with object bounding bins. Object courses are MS COCO courses, like “particular person,” “automobile,” or “site visitors mild.” To make use of the information set, customers should obtain the pictures they’re excited by from the given URLs utilizing img2dataset. Labels within the context of Cortex are referred to as semantic metadata as they provide the information that means and expose helpful data hidden in each single knowledge pattern (e.g., picture width and top).

The Cortex knowledge set additionally features a filtering characteristic that allows customers to look the database to retrieve particular pictures. Moreover, it affords an interactive picture labeling characteristic that enables customers to offer hyperlinks to photographs that aren’t listed within the database. The system then dynamically annotates the pictures and presents the semantic metadata and structural attributes for the pictures at that particular URL.

Code Examples and Implementation

Cortex lives on RapidAPI and permits free semantic metadata and structural attribute extraction for any URL on the Web. The paid model permits customers to get batches of scraped labeled knowledge from the Web utilizing filters for bulk picture labeling.

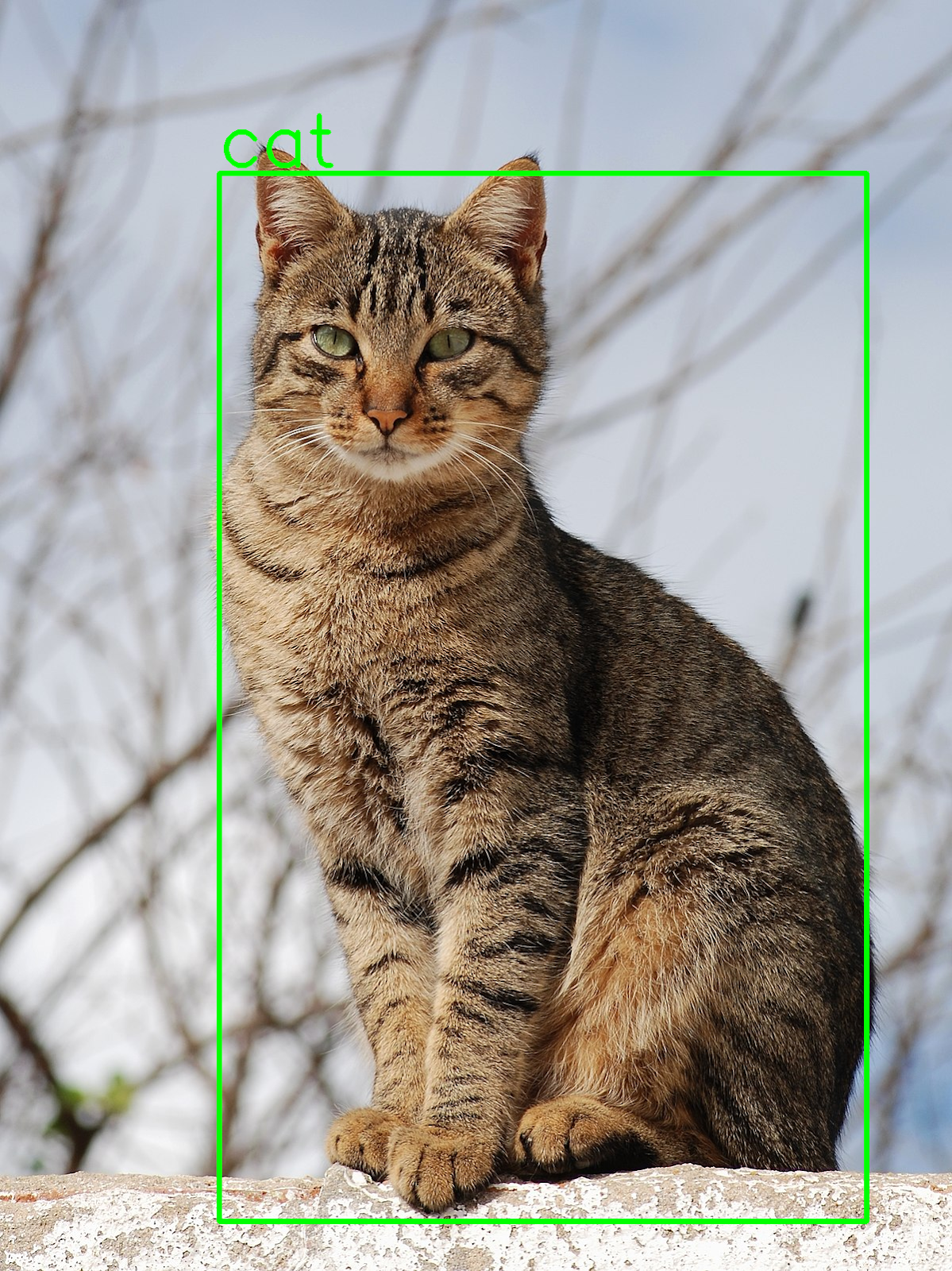

The Python code instance introduced on this part demonstrates methods to use Cortex to get semantic metadata and structural attributes for a given URL and draw bounding bins for object detection. Because the system evolves, performance shall be expanded to incorporate extra attributes, reminiscent of a histogram, pose estimation, and so forth. Each further attribute provides worth to the processed knowledge and makes it appropriate for extra use circumstances.

import cv2

import json

import requests

import numpy as np

cortex_url = 'https://cortex-api.piculjantechnologies.ai/add'

img_url =

'https://add.wikimedia.org/wikipedia/commons/thumb/4/4d/Cat_November_2010-1a.jpg/1200px-Cat_November_2010-1a.jpg'

req = requests.get(img_url)

png_as_np = np.frombuffer(req.content material, dtype=np.uint8)

img = cv2.imdecode(png_as_np, -1)

knowledge = {'url_or_id': img_url}

response = requests.submit(cortex_url, knowledge=json.dumps(knowledge), headers={'Content material-Kind': 'utility/json'})

content material = json.hundreds(response.content material)

object_analysis = content material['object_analysis'][0]

for i in vary(len(object_analysis)):

x1 = object_analysis[i]['x1']

y1 = object_analysis[i]['y1']

x2 = object_analysis[i]['x2']

y2 = object_analysis[i]['y2']

classname = object_analysis[i]['classname']

cv2.rectangle(img, (x1, y1), (x2, y2), (0, 255, 0), 5)

cv2.putText(img, classname,

(x1, y1 - 10),

cv2.FONT_HERSHEY_SIMPLEX, 3, (0, 255, 0), 5)

cv2.imwrite('visualization.png', img)

The contents of the response seem like this:

{

"_id":"PT::63b54db5e6ca4c53498bb4e5",

"url":"https://add.wikimedia.org/wikipedia/commons/thumb/4/4d/Cat_November_2010-1a.jpg/1200px-Cat_November_2010-1a.jpg",

"datetime":"2023-01-04 09:58:14.082248",

"object_analysis_processed":"true",

"pose_estimation_processed":"false",

"face_analysis_processed":"false",

"kind":"picture",

"top":1602,

"width":1200,

"hash":"d0ad50c952a9a153fd7b0f9765dec721f24c814dbe2ca1010d0b28f0f74a2def",

"object_analysis":[

[

{

"classname":"cat",

"conf":0.9876543879508972,

"x1":276,

"y1":218,

"x2":1092,

"y2":1539

}

]

],

"label_quality_estimation":2.561230587616592e-7

}

Let’s take a better look and description what each bit of knowledge can be utilized for:

-

_idis the inner identifier used for indexing the information and is self-explanatory. -

urlis the URL of the picture, which permits us to see the place the picture originated and to probably filter pictures from sure sources. -

datetimeshows the date and time when the picture was seen by the method for the primary time. This knowledge could be essential for time-sensitive purposes, e.g., when processing pictures from a real-time supply reminiscent of a livestream. -

object_analysis_processed,pose_estimation_processed, andface_analysis_processedflags inform if the labels for object evaluation, pose estimation, and face evaluation have been created. -

kinddenotes the kind of knowledge (e.g., picture, audio, video). Since Cortex is at present restricted to picture knowledge, this flag shall be expanded with different kinds of knowledge sooner or later. -

topandwidthare self-explanatory structural attributes and supply the peak and width of the pattern. -

hashis self-explanatory and shows the hashed key. -

object_analysisaccommodates details about object evaluation labels and shows essential semantic metadata info, reminiscent of the category title and degree of confidence. -

label_quality_estimationaccommodates the label high quality rating, ranging in worth from 0 (poor high quality) to 1 (good high quality). The rating is calculated utilizing ML-based QA for labels.

That is what the visualization.png picture created by the Python code snippet seems like:

The following code snippet exhibits methods to use the paid model of Cortex to filter and get URLs of pictures scraped from the Web:

import json

import requests

url = 'https://cortex4.p.rapidapi.com/get-labeled-data'

querystring = {'web page': '1',

'q': '{"object_analysis": {"$elemMatch": {"$elemMatch": {"classname": "cat"}}}, "width": {"$gt": 100}}'}

headers = {

'X-RapidAPI-Key': 'SIGN-UP-FOR-KEY',

'X-RapidAPI-Host': 'cortex4.p.rapidapi.com'

}

response = requests.request("GET", url, headers=headers, params=querystring)

content material = json.hundreds(response.content material)

The endpoint makes use of a MongoDB Question Language question ( q) to filter the database primarily based on semantic metadata and accesses the web page quantity within the physique parameter named web page.

The instance question returns pictures containing object evaluation semantic metadata with the classname cat and a width better than 100 pixels. The content material of the response seems like this:

{

"output":[

{

"_id":"PT::639339ad4552ef52aba0b372",

"url":"https://teamglobalasset.com/rtp/PP/31.png",

"datetime":"2022-12-09 13:35:41.733010",

"object_analysis_processed":"true",

"pose_estimation_processed":"false",

"face_analysis_processed":"false",

"source":"commoncrawl",

"type":"image",

"height":234,

"width":325,

"hash":"bf2f1a63ecb221262676c2650de5a9c667ef431c7d2350620e487b029541cf7a",

"object_analysis":[

[

{

"classname":"cat",

"conf":0.9602264761924744,

"x1":245,

"y1":65,

"x2":323,

"y2":176

},

{

"classname":"dog",

"conf":0.8493766188621521,

"x1":68,

"y1":18,

"x2":255,

"y2":170

}

]

],

“label_quality_estimation”:3.492028982676312e-18

}, … <as much as 25 knowledge factors in whole>

]

"size":1454

}

The output accommodates as much as 25 knowledge factors on a given web page, together with semantic metadata, structural attributes, and details about the supply from the place the picture is scraped (commoncrawl on this case). It additionally exposes the whole question size within the size key.

Basis Fashions and ChatGPT Integration

Basis fashions, or AI fashions skilled on a considerable amount of unlabeled knowledge by means of self-supervised studying, have revolutionized the sector of AI since their introduction in 2018. Basis fashions could be additional fine-tuned for specialised functions (e.g., mimicking a sure particular person’s writing type) utilizing small quantities of labeled knowledge, permitting them to be tailored to quite a lot of totally different duties.

Cortex’s labeled knowledge units can be utilized as a dependable supply of knowledge to make pretrained fashions a good higher place to begin for all kinds of duties, and people fashions are one step above basis fashions that also use labels for pretraining in a self-supervised method. By leveraging huge quantities of knowledge labeled by Cortex, AI fashions could be pretrained extra successfully and produce extra correct outcomes when fine-tuned. What units Cortex other than different options is its scale and variety—the information set always grows, and new knowledge factors with numerous labels are added recurrently. On the time of publication, the whole variety of knowledge factors was greater than 20 million.

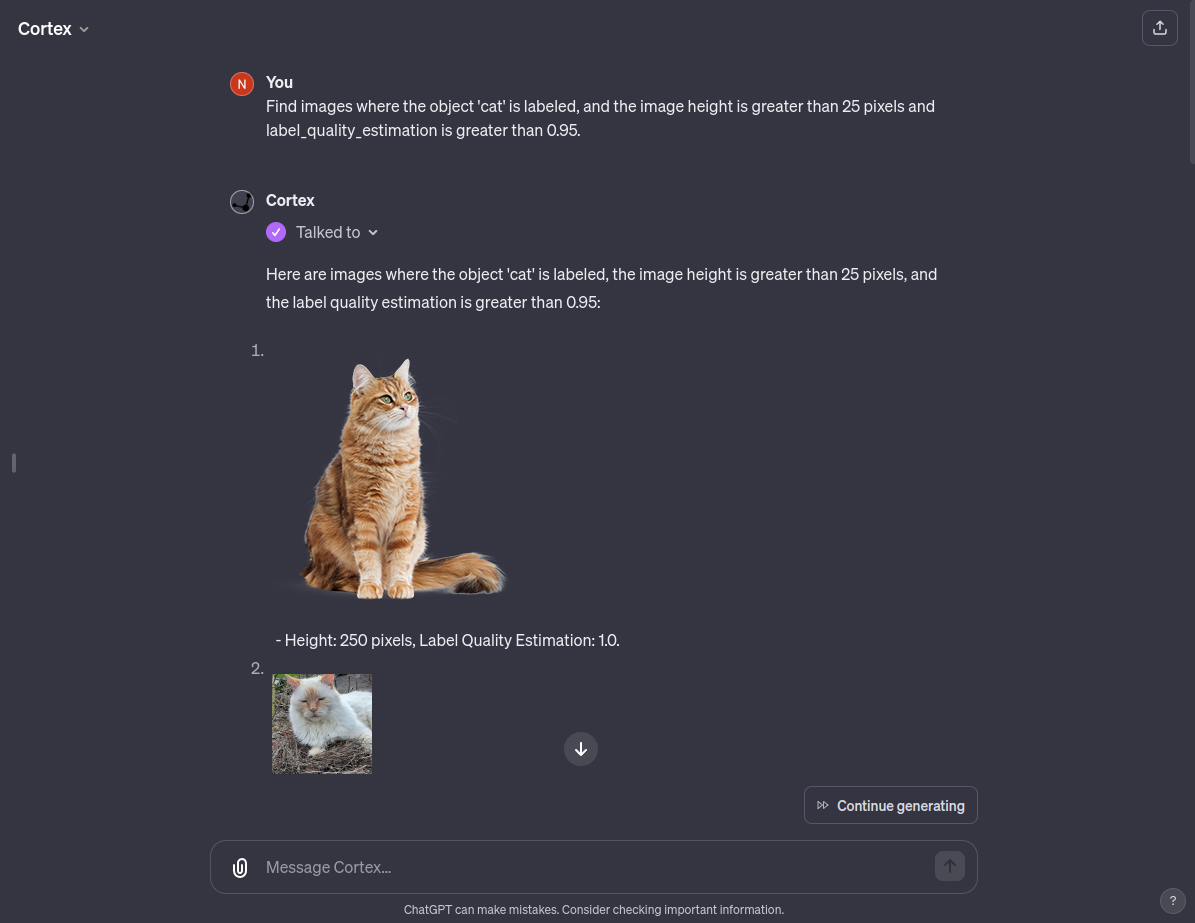

Cortex additionally affords a custom-made ChatGPT chatbot, giving customers unparalleled entry to and utilization of a complete database crammed with meticulously labeled knowledge. This user-friendly performance improves ChatGPT’s capabilities, offering it with deep entry to each semantic and structural metadata for pictures, however we plan to increase it to totally different knowledge past pictures.

With the present state of Cortex, customers can ask this custom-made ChatGPT to offer a listing of pictures containing sure objects that devour many of the picture’s house or pictures containing a number of objects. Personalized ChatGPT can perceive deep semantics and seek for particular kinds of pictures primarily based on a easy immediate. With future refinements that can introduce numerous object courses to Cortex, the customized GPT might act as a robust picture search chatbot.

Picture Information Labeling because the Spine of AI Programs

We’re surrounded by giant quantities of knowledge, however unprocessed uncooked knowledge is usually irrelevant from a coaching perspective, and must be refined to construct profitable AI programs. Cortex tackles this problem by serving to rework huge portions of uncooked knowledge into helpful knowledge units. The power to shortly refine uncooked knowledge reduces reliance on third-party knowledge and providers, quickens coaching, and permits the creation of extra correct, custom-made AI fashions.

The system at present returns semantic metadata for object evaluation together with a top quality estimate, however will finally help face evaluation, pose estimation, and visible embeddings. There are additionally plans to help modalities aside from pictures, reminiscent of video, audio, and textual content knowledge. The system at present returns width and top structural attributes, however it will help a histogram of pixels as properly.

As AI programs develop into extra commonplace, demand for high quality knowledge is sure to go up, and the way in which we acquire and course of knowledge will evolve. Present AI options are solely nearly as good as the information they’re skilled on, and could be extraordinarily efficient and highly effective when meticulously skilled on giant quantities of high quality knowledge. The last word objective is to make use of Cortex to index as a lot publicly out there knowledge as attainable and assign semantic metadata and structural attributes to it, making a helpful repository of high-quality labeled knowledge wanted to coach the AI programs of tomorrow.

The editorial group of the Toptal Engineering Weblog extends its gratitude to Shanglun Wang for reviewing the code samples and different technical content material introduced on this article.

All knowledge set pictures and pattern pictures courtesy of Pičuljan Applied sciences.