Detection engineers and risk hunters perceive that focusing on adversary behaviors is a necessary a part of an efficient detection technique (suppose Pyramid of Ache). But, inherent in focusing analytics on adversary behaviors is that malicious conduct will usually sufficient overlap with benign conduct in your setting, particularly as adversaries attempt to mix in and more and more reside off the land. Think about you’re making ready to deploy a behavioral analytic to enhance your detection technique. Doing so may embrace customized improvement, attempting out a brand new Sigma rule, or new behavioral detection content material out of your safety info and occasion administration (SIEM) vendor. Maybe you’re contemplating automating a earlier hunt, however sadly you discover that the goal conduct is widespread in your setting.

Is that this a foul detection alternative? Not essentially. What are you able to do to make the analytic outputs manageable and never overwhelm the alert queue? It’s usually mentioned that it’s essential to tune the analytic on your setting to scale back the false constructive fee. However are you able to do it with out sacrificing analytic protection? On this submit, I talk about a course of for tuning and associated work you are able to do to make such analytics extra viable in your setting. I additionally briefly talk about correlation, another and complementary means to handle noisy analytic outputs.

Tuning the Analytic

As you’re creating and testing the analytic, you’re inevitably assessing the next key questions, the solutions to which finally dictate the necessity for tuning:

- Does the analytic appropriately determine the goal conduct and its variations?

- Does the analytic determine different conduct totally different than the intention?

- How widespread is the conduct in your setting?

Right here, let’s assume the analytic is correct and pretty sturdy as a way to give attention to the final query. Given these assumptions, let’s depart from the colloquial use of the time period false constructive and as a substitute use benign constructive. This time period refers to benign true constructive occasions wherein the analytic appropriately identifies the goal conduct, however the conduct displays benign exercise.

If the conduct mainly by no means occurs, or occurs solely sometimes, then the variety of outputs will sometimes be manageable. You would possibly settle for these small numbers and proceed to documenting and deploying the analytic. Nonetheless, on this submit, the goal conduct is widespread in your setting, which implies it’s essential to tune the analytic to stop overwhelming the alert queue and to maximise the potential sign of its outputs. At this level, the essential goal of tuning is to scale back the variety of outcomes produced by the analytic. There are usually two methods to do that:

- Filter out the noise of benign positives (our focus right here).

- Alter the specificity of the analytic.

Whereas not the main focus of this submit, let’s briefly talk about adjusting the specificity of the analytic. Adjusting specificity means narrowing the view of the analytic, which entails adjusting its telemetry supply, logical scope, and/or environmental scope. Nonetheless, there are protection tradeoffs related to doing this. Whereas there’s at all times a steadiness to be struck as a result of useful resource constraints, typically it’s higher (for detection robustness and sturdiness) to forged a large internet; that’s, select telemetry sources and assemble analytics that broadly determine the goal conduct throughout the broadest swath of your setting. Basically, you might be selecting to simply accept a bigger variety of attainable outcomes as a way to keep away from false negatives (i.e., fully lacking doubtlessly malicious cases of the goal conduct). Due to this fact, it’s preferable to first focus tuning efforts on filtering out benign positives over adjusting specificity, if possible.

Filtering Out Benign Positives

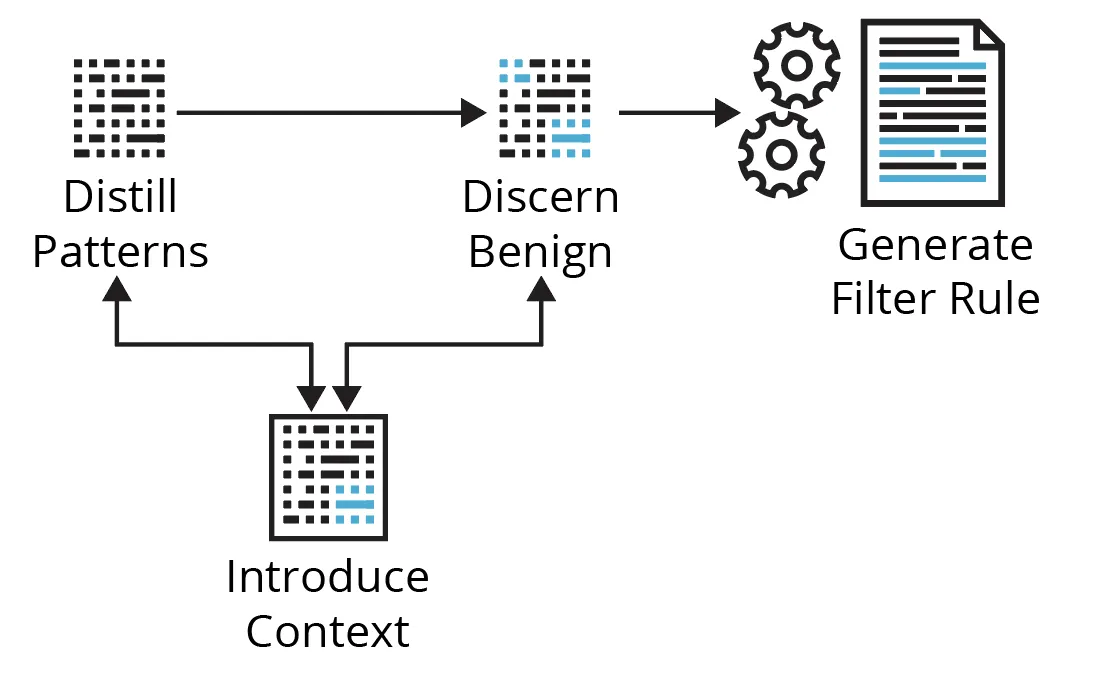

Working the analytic during the last, say, week of manufacturing telemetry, you might be introduced with a desk of quite a few outcomes. Now what? Determine 1 under reveals the cyclical course of we’ll stroll by means of utilizing a few examples focusing on Kerberoasting and Non-Normal Port strategies.

Determine 1: A Fundamental Course of for Filtering Out Benign Positives

Distill Patterns

Coping with quite a few analytic outcomes doesn’t essentially imply it’s a must to observe down every one individually or have a filter for every consequence—the sheer quantity makes that impractical. A whole bunch of outcomes can doubtlessly be distilled to a couple filters—it depends upon the out there context. Right here, you’re seeking to discover the info to get a way of the highest entities concerned, the number of related contextual values (context cardinality), how usually these change (context velocity), and which related fields could also be summarized. Begin with entities or values related to probably the most outcomes; that’s, attempt to handle the biggest chunks of associated occasions first.

Examples

- Kerberoasting—Say this Sigma rule returns outcomes with many alternative AccountNames and ClientAddresses (excessive context cardinality), however most outcomes are related to comparatively few ServiceNames (of sure legacy gadgets; low context cardinality) and TicketOptions. You increase the search to the final 30 days and discover the ServiceNames and TicketOptions are a lot the identical (low context velocity), however different related fields have extra and/or totally different values (excessive context velocity). You’d give attention to these ServiceNames and/or TicketOptions, verify it’s anticipated/identified exercise, then handle a giant chunk of the outcomes with a single filter towards these ServiceNames.

- Non-Normal Port—On this instance, you discover there’s excessive cardinality and excessive velocity in nearly each occasion/community movement subject, apart from the service/software label, which signifies that solely SSL/TLS is getting used on non-standard ports. Once more, you increase the search and see a variety of totally different supply IPs that might be summarized by a single Classless Inter-Area Routing (CIDR) block, thus abstracting the supply IP into a chunk of low-cardinality, low-velocity context. You’d give attention to this obvious subnet, attempting to know what it’s and any related controls round it, verify its anticipated and/or identified exercise, then filter accordingly.

Happily, there are often patterns within the information which you can give attention to. You usually need to goal context with low cardinality and low velocity as a result of it impacts the long-term effectiveness of your filters. You don’t need to continually be updating your filter guidelines by counting on context that adjustments too usually should you can assist it. Nonetheless, generally there are lots of high-cardinality, high-velocity fields, and nothing fairly stands out from fundamental stacking, counting, or summarizing. What should you can’t slim the outcomes as is? There are too many outcomes to analyze every one individually. Is that this only a dangerous detection alternative? Not but.

Discern Benign

The primary concern on this exercise is shortly gathering ample context to disposition analytic outputs with a suitable stage of confidence. Context is any information or info that meaningfully contributes to understanding and/or decoding the circumstances/situations wherein an occasion/alert happens, to discern conduct as benign, malicious, or suspicious/unknown. Desk 1 under describes the commonest forms of context that you should have or search to assemble.

Desk 1: Widespread Varieties of Context

|

Sort |

Description |

Typical Sources |

Instance(s) |

| Occasion | fundamental properties/parameters of the occasion that assist outline it | uncooked telemetry, log fields |

course of creation fields, community movement fields, course of community connection fields, Kerberos service ticket request fields |

| Environmental | information/details about the monitored setting or belongings within the monitored setting |

CMDB /ASM/IPAM, ticket system, documentation, the brains of different analysts, admins, engineers, system/community homeowners |

enterprise processes, community structure, routing, proxies, NAT, insurance policies, authorized change requests, companies used/uncovered, identified vulnerabilities, asset possession, {hardware}, software program, criticality, location, enclave, and so on. |

| Entity | information/details about the entities (e.g., id, supply/vacation spot host, course of, file) concerned within the occasion |

IdP /IAM, EDR, CMDB /ASM/IPAM, Third-party APIs |

• enriching a public IP handle with geolocation, ASN information, passive DNS, open ports/protocols/companies, certificates info

• enriching an id with description, kind, function, privileges, division, location, and so on. |

| Historic | • how usually the occasion occurs

• how usually the occasion occurs with sure traits or entities, and/or • how usually there’s a relationship between choose entities concerned within the occasion |

baselines | • profiling the final 90 days of DNS requests per top-level area (TLD)

• profiling the final 90 days of HTTP on non-standard ports •profiling course of lineage |

| Menace | • assault (sub-)method(s)

• instance process(s) • doubtless assault stage • particular and/or kind of risk actor/malware/device identified to exhibit the conduct • repute, scoring, and so on. |

risk intelligence platform (TIP), MITRE ATT&CK, risk intelligence APIs, documentation |

repute/detection scores, Sysmon-modular annotations; ADS instance |

| Analytic | • how and why this occasion was raised

• any related values produced/derived by the analytic itself • the analytic logic, identified/widespread benign instance(s) • really useful follow-on actions • scoring, and so on. |

analytic processing,

documentation, runbooks |

“occasion”: { “processing”: { “time_since_flow_start”: “0:04:08.641718”, “length”: 0.97 }, “cause”: “SEEN_BUT_RARELY_OCCURRING”, “consistency_score”: 95 } |

| Correlation | information/info from related occasions/alerts (mentioned under in Aggregating the Sign ) |

SIEM/SOAR, customized correlation layer |

risk-based alerting, correlation guidelines |

| Open-source | information/info usually out there through Web search engines like google and yahoo | Web | vendor documentation states what service names they use, what different folks have seen relating to TCP/2323 |

Upon preliminary assessment, you’ve got the occasion context, however you sometimes find yourself searching for environmental, entity, and/or historic context to ideally reply (1) which identities and software program brought about this exercise, and (2) is it reliable? That’s, you might be searching for details about the provenance, expectations, controls, belongings, and historical past relating to the noticed exercise. But, that context might or will not be out there or too gradual to amass. What should you can’t inform from the occasion context? How else would possibly you inform these occasions are benign or not? Is that this only a dangerous detection alternative? Not but. It depends upon your choices for gathering further context and the pace of these choices.

Introduce Context

If there aren’t apparent patterns and/or the out there context is inadequate, you possibly can work to introduce patterns/context through automated enrichments and baselines. Enrichments could also be from inside or exterior information sources and are often automated lookups based mostly on some entity within the occasion (e.g., id, supply/vacation spot host, course of, file, and so on.). Even when enrichment alternatives are scarce, you possibly can at all times introduce historic context by constructing baselines utilizing the info you’re already amassing.

With the multitude of monitoring and detection suggestions utilizing phrases reminiscent of new, uncommon, surprising, uncommon, unusual, irregular, anomalous, by no means been seen earlier than, surprising patterns and metadata, doesn’t usually happen, and so on., you’ll should be constructing and sustaining baselines anyway. Nobody else can do these for you—baselines will at all times be particular to your setting, which is each a problem and a bonus for defenders.

Kerberoasting

Except you’ve got programmatically accessible and up-to-date inside information sources to counterpoint the AccountName (id), ServiceName/ServiceID (id), and/or ClientAddress (supply host; sometimes RFC1918), there’s not a lot enrichment to do besides, maybe, to translate TicketOptions, TicketEncryptionType, and FailureCode to pleasant names/values. Nonetheless, you possibly can baseline these occasions. For instance, you would possibly observe the next over a rolling 90-day interval:

- % days seen per ServiceName per AccountName → determine new/uncommon/widespread user-service relationships

- imply and mode of distinctive ServiceNames per AccountName per time interval → determine uncommon variety of companies for which a consumer makes service ticket requests

You can increase the search (solely to develop a baseline metric) to all related TicketEncryption Sorts and moreover observe

- % days seen per TicketEncryptionType per ServiceName → determine new/uncommon/widespread service-encryption kind relationships

- % days seen per TicketOptions per AccountName → determine new/uncommon/widespread user-ticket choices relationships

- % days seen per TicketOptions per ServiceName → determine new/uncommon/widespread service-ticket choices relationships

Non-Normal Port

Enrichment of the vacation spot IP addresses (all public) is an effective place to begin, as a result of there are lots of free and industrial information sources (already codified and programmatically accessible through APIs) relating to Web-accessible belongings. You enrich analytic outcomes with geolocation, ASN, passive DNS, hosted ports, protocols, and companies, certificates info, major-cloud supplier info, and so on. You now discover that all the connections are going to a couple totally different netblocks owned by a single ASN, they usually all correspond to a single cloud supplier’s public IP ranges for a compute service in two totally different areas. Furthermore, passive DNS signifies quite a few development-related subdomains all on a well-known father or mother area. Certificates info is constant over time (which signifies one thing about testing) and has acquainted organizational identifiers.

Newness is well derived—the connection is both traditionally there or it isn’t. Nonetheless, you’ll want to find out and set a threshold as a way to say what is taken into account uncommon and what’s thought of widespread. Having some codified and programmatically accessible inside information sources out there wouldn’t solely add doubtlessly priceless context however increase the choices for baseline relationships and metrics. The artwork and science of baselining entails figuring out thresholds and which baseline relationships/metrics will give you significant sign.

Total, with some additional engineering and evaluation work, you’re in a a lot better place to distill patterns, discern which occasions are (most likely) benign, and to make some filtering selections. Furthermore, whether or not you construct automated enrichments and/or baseline checks into the analytic pipeline, or construct runbooks to assemble this context on the level of triage, this work feeds straight into supporting detection documentation and enhances the general pace and high quality of triage.

Generate Filter Rule

You need to well apply filters with out having to handle too many guidelines, however you need to accomplish that with out creating guidelines which are too broad (which dangers filtering out malicious occasions, too). With filter/enable checklist guidelines, quite than be overly broad, it’s higher to lean towards a extra exact description of the benign exercise and probably must create/handle a number of extra guidelines.

Kerberoasting

The baseline info helps you perceive that these few ServiceNames do the truth is have a typical and constant historical past of occurring with the opposite related entities/properties of the occasions proven within the outcomes. You establish these are OK to filter out, and also you accomplish that with a single, easy filter towards these ServiceNames.

Non-Normal Port

Enrichments have supplied priceless context to assist discern benign exercise and, importantly, additionally enabled the abstraction of the vacation spot IP, a high-cardinality, high-velocity subject, from many alternative, altering values to a couple broader, extra static values described by ASN, cloud, and certificates info. Given this context, you establish these connections are most likely benign and transfer to filter them out. See Desk 2 under for instance filter guidelines, the place app=443 signifies SSL/TLS and major_csp=true signifies the vacation spot IP of the occasion is in one of many printed public IP ranges of a serious cloud service supplier:

|

Sort |

Filter Rule |

Motive |

|---|---|---|

|

Too broad |

sip=10.2.16.0/22; app=443; asn=16509; major_csp=true |

You don’t need to enable all non-standard port encrypted connections from the subnet to all cloud supplier public IP ranges in all the ASN. |

|

Nonetheless too broad |

sip=10.2.16.0/22; app=443; asn=16509; major_csp=true; cloud_provider=aws; cloud_service=EC2; cloud_region=us-west-1,us-west-2 |

You don’t know the character of the inner subnet. You don’t need to enable all non-standard port encrypted visitors to have the ability to hit simply any EC2 IPs throughout two whole areas. Cloud IP utilization adjustments as totally different clients spin up/down assets. |

|

Finest possibility |

sip=10.2.16.0/22; app=443; asn=16509; major_csp=true; cloud_provider=aws; cloud_service=EC2; cloud_region=us-west-1,us-west-2; cert_subject_dn=‘L=Earth|O=Your Org|OU=DevTest|CN=dev.your.org’ |

It is particular to the noticed testing exercise on your org, however broad sufficient that it shouldn’t change a lot. You’ll nonetheless learn about another non-standard port visitors that doesn’t match all of those traits. |

An essential corollary right here is that the filtering mechanism/enable checklist must be utilized in the fitting place and be versatile sufficient to deal with the context that sufficiently describes the benign exercise. A easy filter on ServiceNames depends solely on information within the uncooked occasions and could be filtered out merely utilizing an additional situation within the analytic itself. Alternatively, the Non-Normal Port filter rule depends on information from the uncooked occasions in addition to enrichments, wherein case these enrichments have to have been carried out and out there within the information earlier than the filtering mechanism is utilized. It’s not at all times ample to filter out benign positives utilizing solely fields out there within the uncooked occasions. There are numerous methods you might account for these filtering eventualities. The capabilities of your detection and response pipeline, and the way in which it’s engineered, will affect your capacity to successfully tune at scale.

Mixture the Sign

Up to now, I’ve talked a couple of course of for tuning a single analytic. Now, let’s briefly talk about a correlation layer, which operates throughout all analytic outputs. Typically an recognized conduct simply isn’t a powerful sufficient sign in isolation; it might solely turn into a powerful sign in relation to different behaviors, recognized by different analytics. Correlating the outputs from a number of analytics can tip the sign sufficient to meaningfully populate the alert queue in addition to present priceless further context.

Correlation is commonly entity-based, reminiscent of aggregating analytic outputs based mostly on a shared entity like an id, host, or course of. These correlated alerts are sometimes prioritized through scoring, the place you assign a danger rating to every analytic output. In flip, correlated alerts may have an mixture rating that’s often the sum, or some normalized worth, of the scores of the related analytic outputs. You’d kind correlated alerts by the mixture rating, the place increased scores point out entities with probably the most, or most extreme, analytic findings.

The outputs out of your analytic don’t essentially must go on to the primary alert queue. Not every analytic output wants be triaged. Maybe the efficacy of the analytic primarily exists in offering further sign/context in relation to different analytic outputs. As correlated alerts bubble as much as analysts solely when there’s robust sufficient sign between a number of related analytic outputs, correlation serves instead and complementary means to make the variety of outputs from a loud analytic much less of a nuisance and total outputs extra manageable.

Enhancing Availability and Pace of Related Context

All of it activates context and the necessity to shortly collect ample context. Pace issues. Previous to operational deployment, the extra shortly and confidently you possibly can disposition analytic outputs, the extra outputs you possibly can cope with, the sooner and higher the tuning, the upper the potential sign of future analytic outputs, and the earlier you’ll have a viable analytic in place working for you. After deployment, the extra shortly and confidently you possibly can disposition analytic outputs, the sooner and higher the triage and the earlier acceptable responses could be pursued. In different phrases, the pace of gathering ample context straight impacts your imply time to detect and imply time to reply. Inversely, obstacles to shortly gathering ample context are obstacles to tuning/triage; are obstacles to viable, efficient, and scalable deployment of proactive/behavioral safety analytics; and are obstacles to early warning and danger discount. Consequently, something you are able to do to enhance the provision and/or pace of gathering related context is a worthwhile effort on your detection program. These issues embrace:

- constructing and sustaining related baselines

- constructing and sustaining a correlation layer

- investing in automation by getting extra contextual info—particularly inside entities and environmental context—that’s codified, made programmatically accessible, and built-in

- constructing relationships and tightening up safety reporting/suggestions loops with related stakeholders—a holistic folks, course of, and expertise effort; contemplate one thing akin to these automated safety bot use circumstances

- constructing relationships with safety engineering and admins so they’re extra prepared to help in tweaking the sign

- supporting information engineering, infrastructure, and processing for automated enrichments, baseline checks, and upkeep

- tweaking configurations for detection, e.g., deception engineering, this instance with ticket occasions, and so on.

- tweaking enterprise processes for detection, e.g., hooks into sure authorized change requests, admins at all times do that little additional particular factor to let it’s actually them, and so on.

Abstract

Analytics focusing on adversary behaviors will usually sufficient require tuning on your setting because of the identification of each benign and malicious cases of that conduct. Simply because a conduct could also be widespread in your setting doesn’t essentially imply it’s a foul detection alternative or not well worth the analytic effort. One of many major methods of coping with such analytic outputs, with out sacrificing protection, is through the use of context (usually greater than is contained within the uncooked occasions) and versatile filtering to tune out benign positives. I advocate for detection engineers to carry out most of this work, primarily conducting an information research and a few pre-operational triage of their very own analytic outcomes. This work usually entails a cycle of evaluating analytic outcomes to distill patterns, discerning benign conduct, introducing context as needed, and eventually filtering out benign occasions. We used a pair fundamental examples to point out how that cycle would possibly play out.

If the fast context is inadequate to distill patterns and/or discern benign conduct, detection engineers can nearly at all times complement it with automated enrichments and/or baselines. Automated enrichments are extra widespread for exterior, Web-accessible belongings and could also be more durable to return by for inside entities, however baselines can sometimes be constructed utilizing the info you’re already amassing. Plus, historic/entity-based context is among the most helpful context to have.

In looking for to supply viable, high quality analytics, detection engineers ought to exhaust, or not less than attempt, these choices earlier than dismissing an analytic effort or sacrificing its protection. It’s additional work, however doing this work not solely improves pre-operational tuning however pays dividends on post-operational deployment as analysts triage alerts/leads utilizing the additional context and well-documented analysis. Analysts are then in a greater place to determine and escalate findings but additionally to supply tuning suggestions. Apart from, tuning is a steady course of and a two-pronged effort between detection engineers and analysts, if solely as a result of threats and environments usually are not static.

The opposite major means of coping with such analytic outputs, once more with out sacrificing protection, is by incorporating a correlation layer into your detection pipeline. Correlation can be extra work as a result of it provides one other layer of processing, and it’s a must to rating analytic outputs. Scoring could be tough as a result of there are lots of issues to contemplate, reminiscent of how dangerous every analytic output is within the grand scheme of issues, if/how it is best to weight and/or increase scores to account for varied circumstances (e.g., asset criticality, time), how it is best to normalize scores, whether or not it is best to calculate scores throughout a number of entities and which one takes priority, and so on. Nonetheless, the advantages of correlation make it a worthwhile effort and an incredible possibility to assist prioritize throughout all analytic outputs. Additionally, it successfully diminishes the issue of noisier analytics since not each analytic output is supposed to be triaged.

In the event you need assistance doing any of these items, or want to talk about your detection engineering journey, please contact us.