Picture by Freepik

Giant Language Fashions (LLMs) like OpenAI’s GPT and Mistral’s Mixtral are more and more taking part in an necessary position within the growth of AI-powered purposes. The flexibility of those fashions to generate human-like outcomes makes them the proper assistants for content material creation, code debugging, and different time-intensive duties.

Nonetheless, one frequent problem confronted when working with LLMs is the potential of encountering factually incorrect data, popularly often called hallucinations. The rationale for these occurrences isn’t far-fetched. LLMs are educated to offer passable solutions to prompts; in circumstances the place they will’t present one, they conjure up one. Hallucinations will also be influenced by the kind of inputs and biases employed in coaching these fashions.

On this article, we are going to discover three research-backed superior prompting methods which have emerged as promising approaches to lowering the incidence of hallucinations whereas bettering the effectivity and velocity of outcomes produced by LLMs.

To raised comprehend the enhancements these superior methods carry, it’s necessary we speak concerning the fundamentals of immediate writing. Prompts within the context of AI (and on this article, LLMs) consult with a gaggle of characters, phrases, tokens, or a set of directions that information the AI mannequin as to the intent of the human person.

Immediate engineering refers back to the artwork of making prompts with the aim of higher directing the habits and ensuing output of the LLM in query. By utilizing totally different methods to convey human intent higher, builders can improve fashions’ outcomes by way of accuracy, relevance, and coherence.

Listed below are some important ideas you need to observe when crafting a immediate:

- Be concise

- Present construction by specifying the specified output format

- Give references or examples if doable.

All these will assist the mannequin higher perceive what you want and improve the possibilities of getting a passable reply.

Under is an effective instance that queries an AI mannequin with a immediate utilizing all the information talked about above:

Immediate = “You are an knowledgeable AI immediate engineer. Please generate a 2 sentence abstract of the most recent developments in immediate era, specializing in the challenges of hallucinations and the potential of utilizing superior prompting methods to deal with these challenges. The output ought to be in markdown format.”

Nonetheless, following these important ideas mentioned earlier doesn’t all the time assure optimum outcomes, particularly when coping with complicated duties.

Main researchers from distinguished AI establishments like Microsoft and Google have divested a whole lot of assets into LLM optimization, i.e., actively learning the frequent causes for hallucinations and discovering efficient methods to deal with them. The next prompting methods have been discovered to offer higher and context-aware directions to the studied LLMs, thereby rising the possibilities of getting higher related outcomes and in addition lowering the chance of getting inaccurate or nonsensical data.

Listed below are some examples of research-driven superior prompting methods:

1. Emotional Persuasion Prompting

A 2023 research by Microsoft researchers discovered that utilizing emotional language and persuasive prompts, known as “EmotionPrompts,” can enhance LLM efficiency by over 10%.

This fashion provides a private, emotional ingredient to the given immediate, reworking the request into one which’s extremely necessary with important penalties for the outcomes. It is virtually like speaking to a human; utilizing an emotional angle helps talk the significance of the duty, stimulating deeper focus and dedication. This technique will be helpful for duties requiring greater problem-solving and creativity expertise.

Let’s check out a easy instance the place emotion is used to boost the immediate:

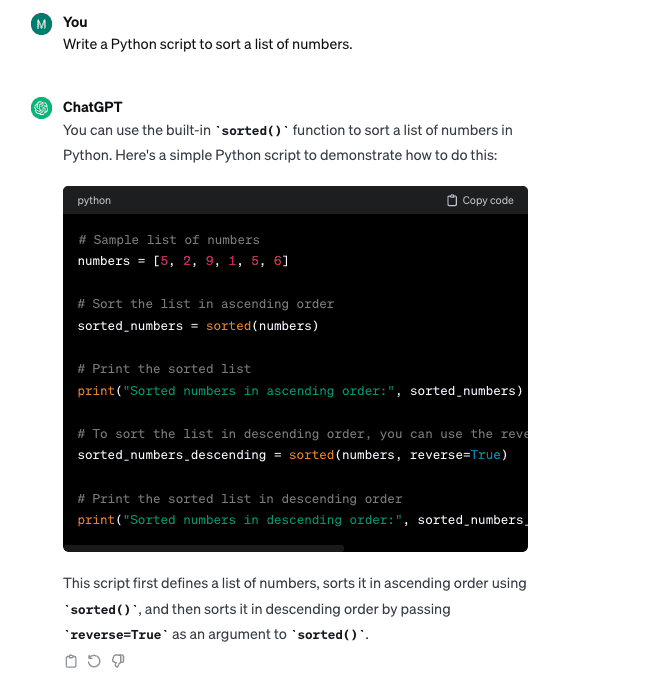

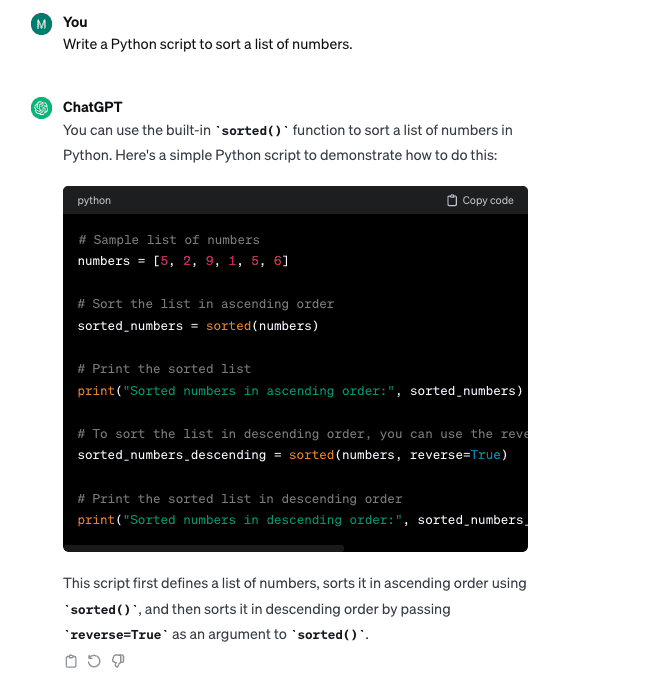

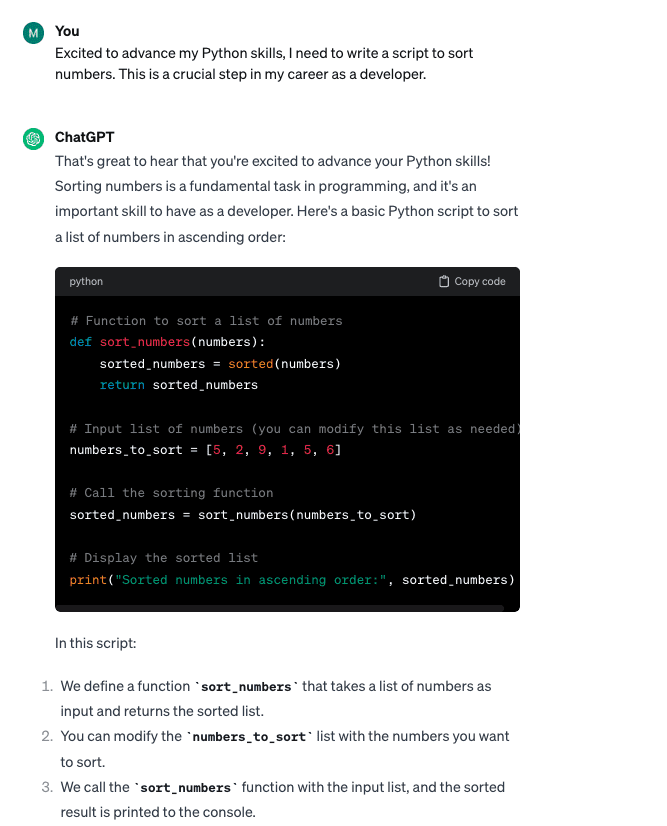

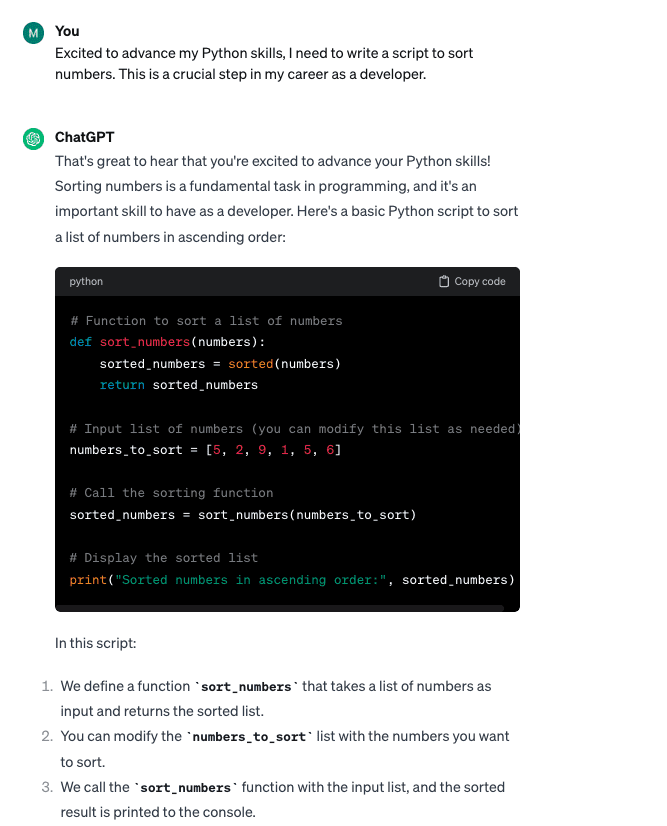

Fundamental Immediate: “Write a Python script to kind a listing of numbers.”

Immediate with Emotional Persuasion: “Excited to advance my Python expertise, I would like to put in writing a script to kind numbers. It is a essential step in my profession as a developer.”

Whereas each immediate variations produced comparable code outcomes, the “EmotionPrompts” method helped create a cleaner code and supplied extra explanations as a part of the generated consequence.

One other fascinating experiment by Finxter discovered that offering financial tricks to the LLMs may enhance their efficiency – virtually like interesting to a human’s monetary incentive.

2. Chain-of-Thought Prompting

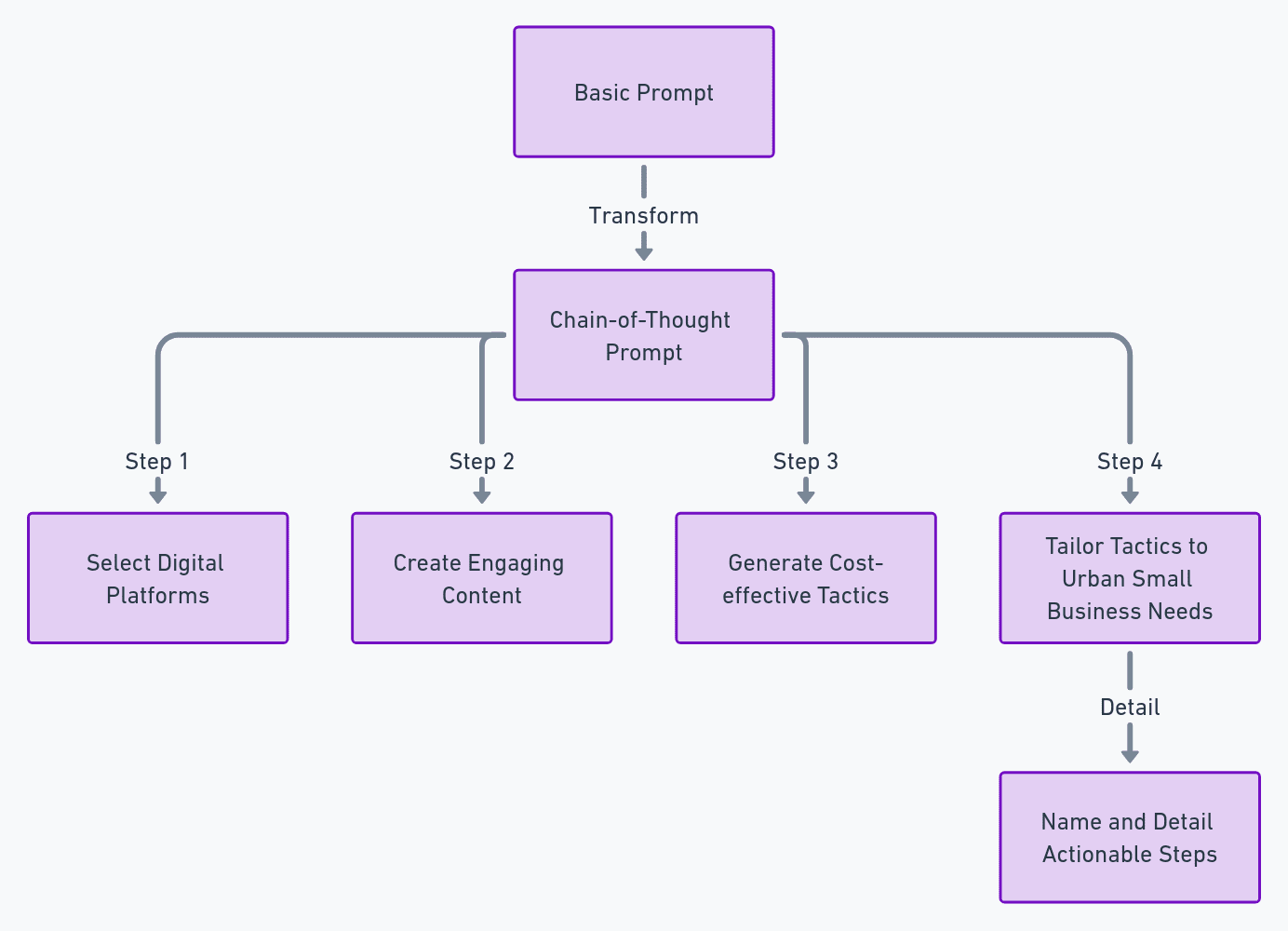

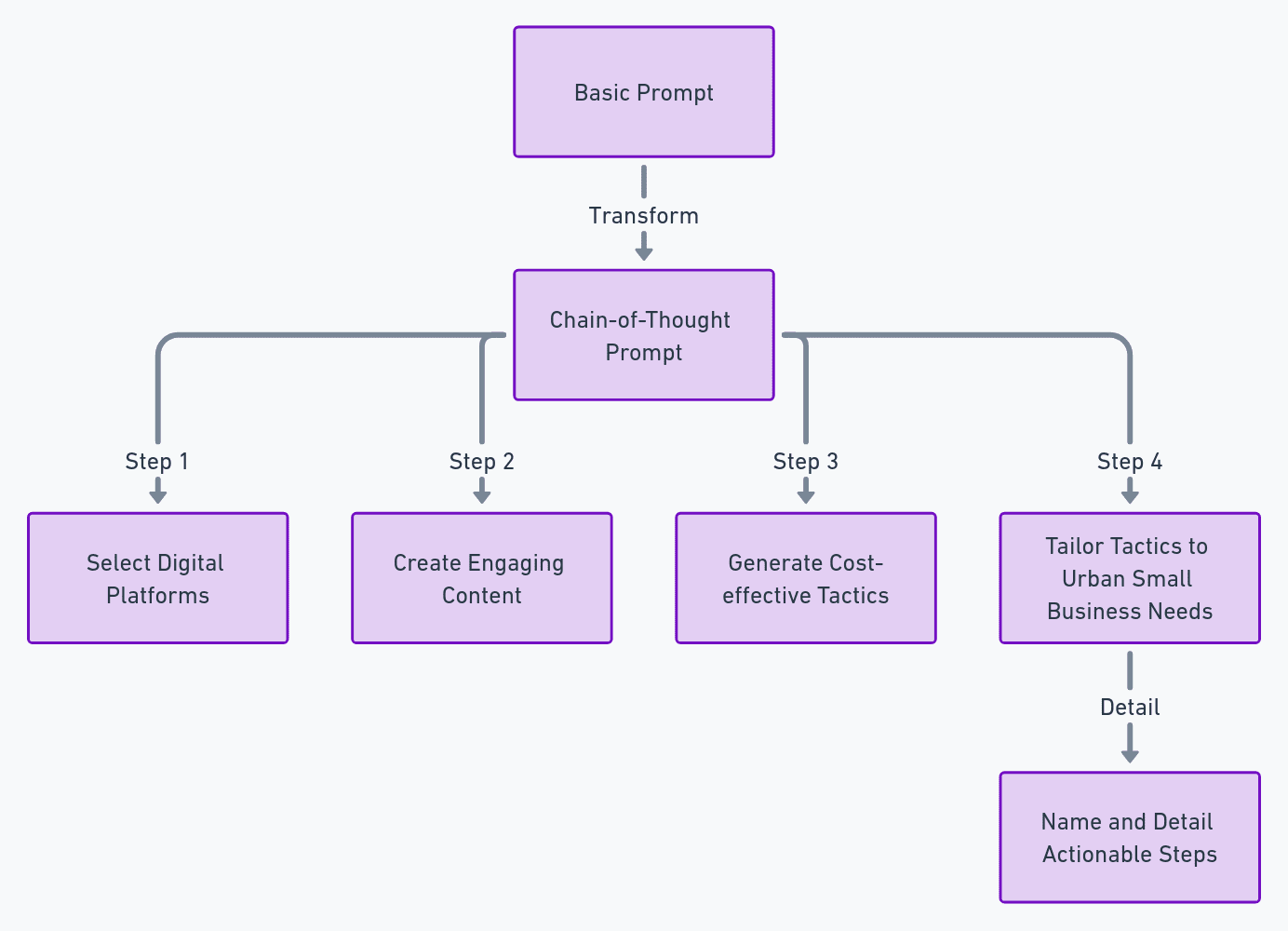

One other prompting method found for its effectiveness by a group of College of Pittsburgh researchers is the Chain-of-Thought fashion. This method employs a step-by-step method that walks the mannequin by the specified output construction. This logical method helps the mannequin craft a extra related and structured response to a fancy activity or query.

Here is an instance of learn how to create a Chain-of-Thought fashion immediate based mostly on the given template (utilizing OpenAI’s ChatGPT with GPT-4):

Fundamental Immediate: “Draft a digital advertising and marketing plan for a finance app geared toward small enterprise house owners in massive cities.”

Chain of Thought Immediate:

“Define a digital advertising and marketing technique for a finance app for small enterprise house owners in massive cities. Deal with:

- Deciding on digital platforms which might be standard amongst this enterprise demographic.

- Creating participating content material like webinars or different related instruments.

- Producing cost-effective ways distinctive from conventional advertisements.

- Tailoring these ways to city small enterprise wants in a means that will increase buyer conversion charges.

Title and element every a part of the plan with distinctive, actionable steps.”

The Chain of immediate method generated a extra exact and actionable consequence from a cursory look.

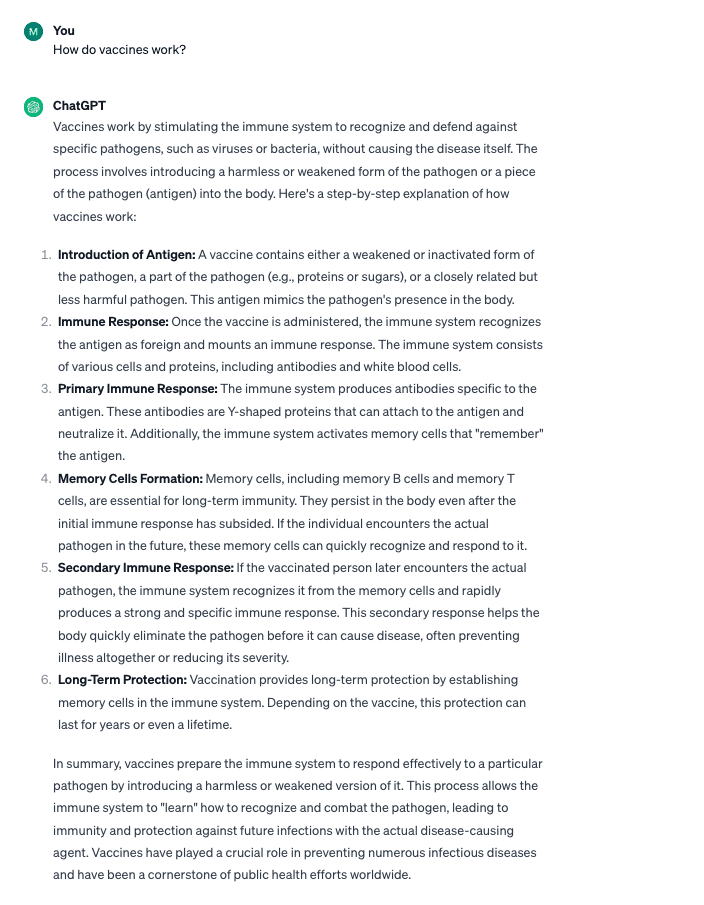

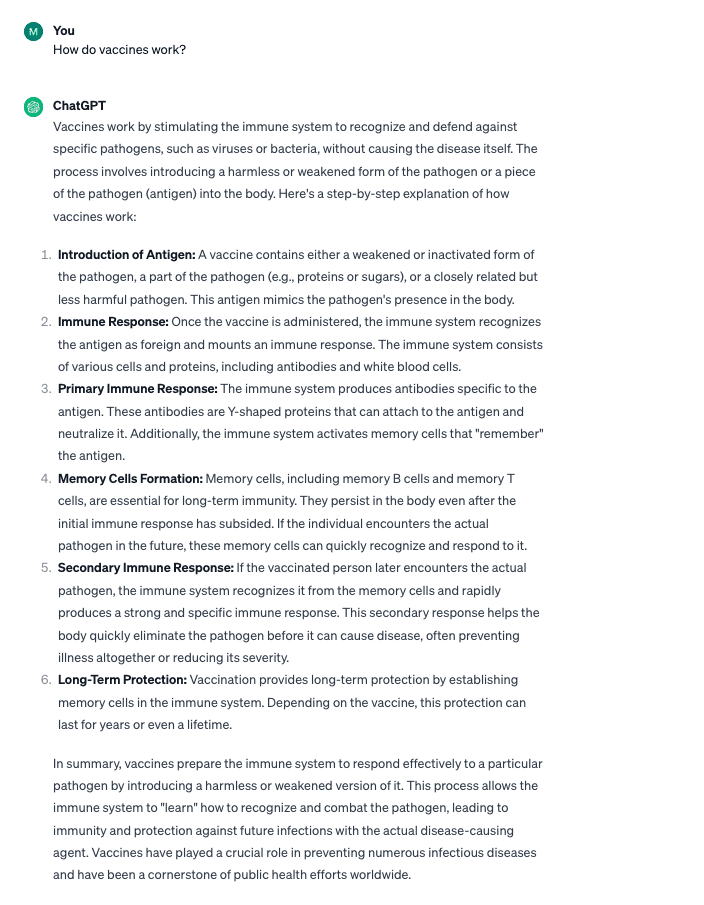

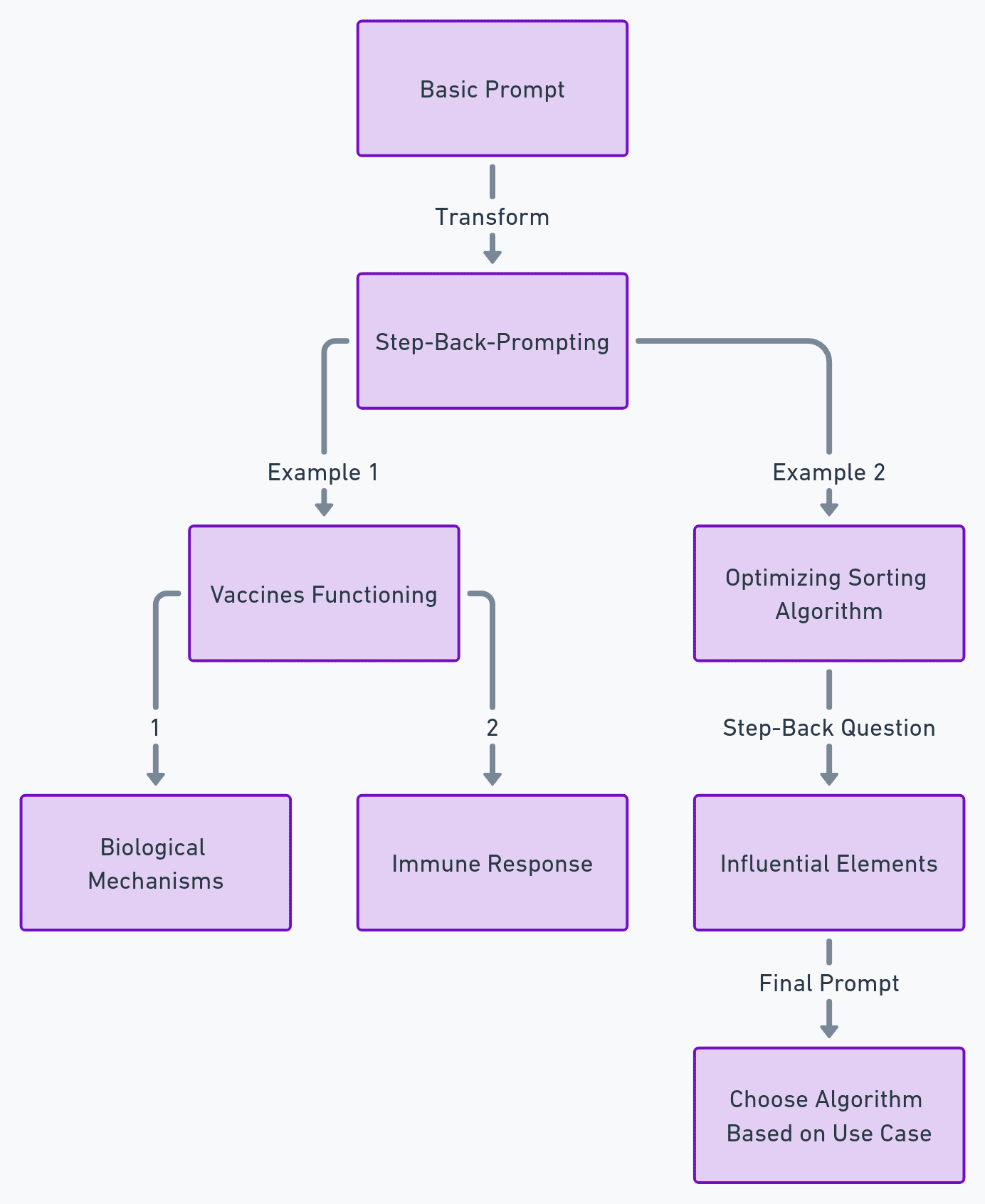

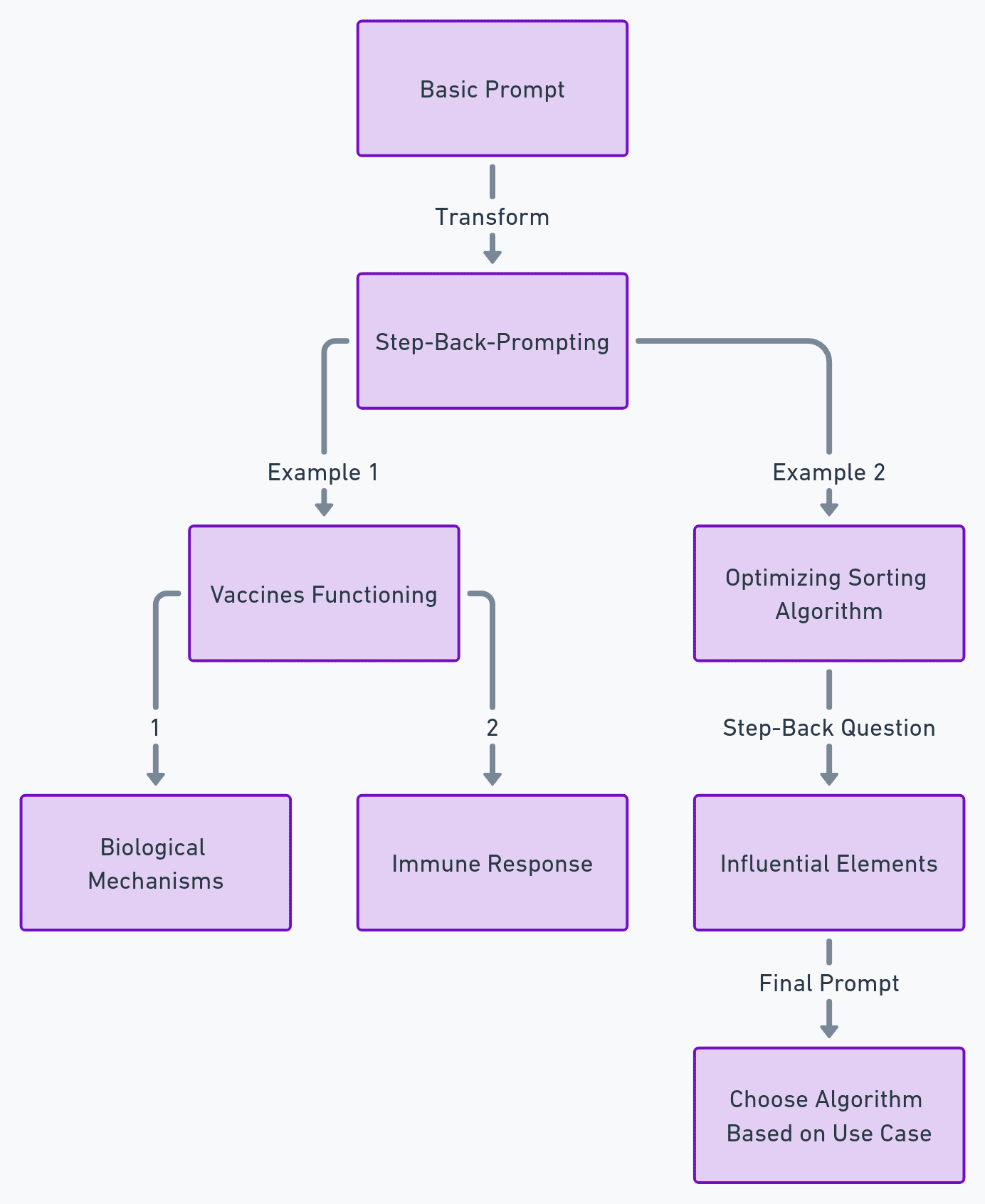

The Step-Again-Prompting method, introduced by seven of Google’s Deepmind Researchers, is designed to simulate reasoning when coping with LLMs. That is just like instructing a scholar the underlying ideas of an idea earlier than fixing a fancy drawback.

To use this system, you’ll want to level out the underlying precept behind a query earlier than requesting the mannequin to offer a solution. This ensures the mannequin will get a sturdy context, which is able to assist it give a technically right and related reply.

Let’s look at two examples (utilizing OpenAI’s ChatGPT with GPT-4):

Instance 1:

Fundamental Immediate: “How do vaccines work?”

Prompts utilizing the Step-Again Approach

- “What organic mechanisms permit vaccines to guard in opposition to illnesses?”

- “Are you able to clarify the physique’s immune response triggered by vaccination?”

Whereas the fundamental immediate supplied a passable reply, utilizing the Step-Again Approach supplied an in-depth, extra technical reply. This might be particularly helpful for technical questions you may need.

As builders proceed to construct novel purposes for current AI fashions, there may be an rising want for superior prompting methods that may improve the talents of Giant Language Fashions to grasp not simply our phrases however the intent and emotion behind them to generate extra correct and contextually related outputs.