Amazon was one of many tech giants that agreed to a set of White Home suggestions concerning using generative AI final yr. The privateness concerns addressed in these suggestions proceed to roll out, with the most recent included within the bulletins on the AWS Summit in New York on July 9. Particularly, contextual grounding for Guardrails for Amazon Bedrock supplies customizable content material filters for organizations deploying their very own generative AI.

AWS Accountable AI Lead Diya Wynn spoke with TechRepublic in a digital prebriefing in regards to the new bulletins and the way firms steadiness generative AI’s wide-ranging information with privateness and inclusion.

AWS NY Summit bulletins: Adjustments to Guardrails for Amazon Bedrock

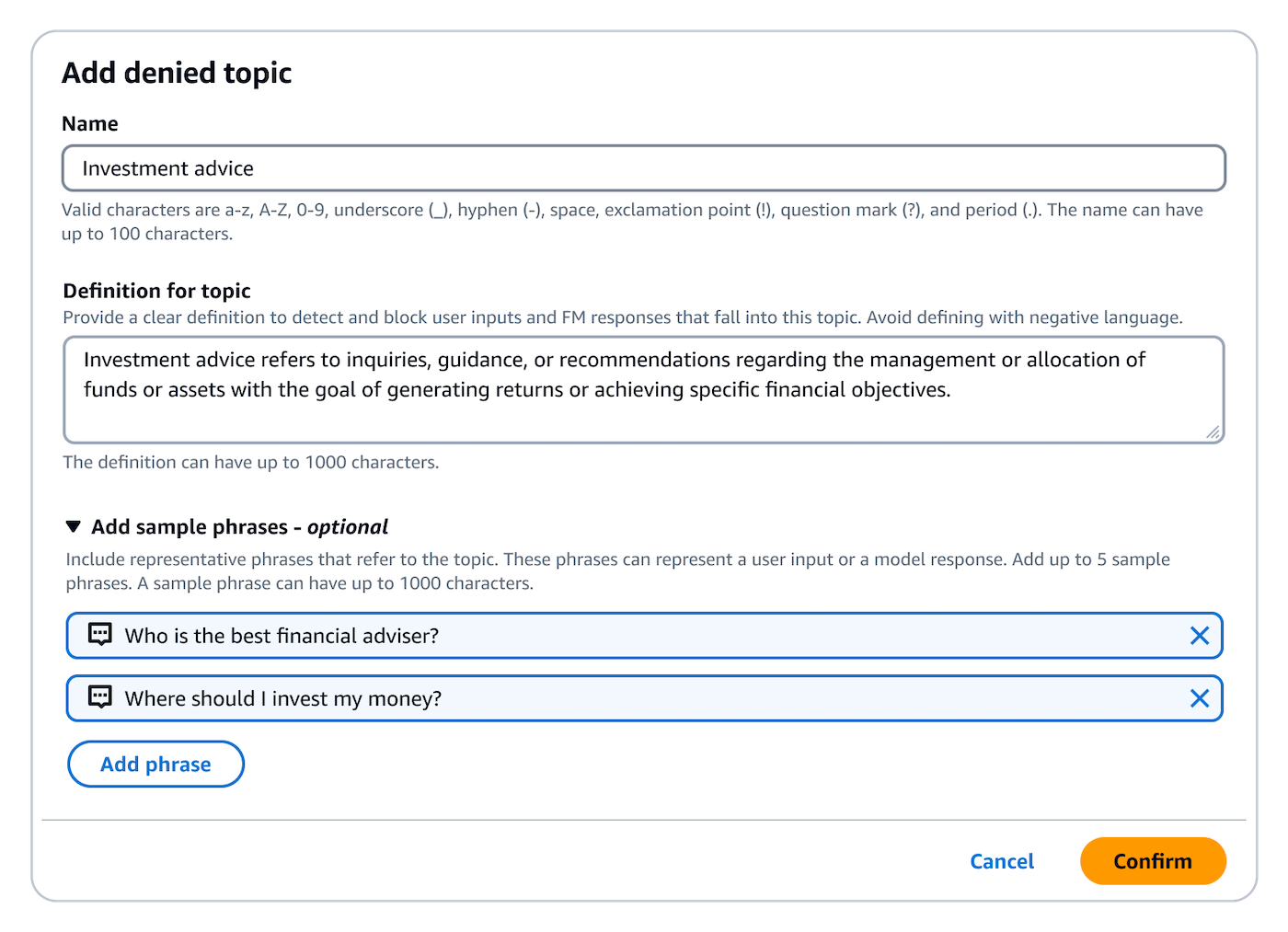

Guardrails for Amazon Bedrock, the security filter for generative AI functions hosted on AWS, has new enhancements:

- Customers of Anthropic’s Claude 3 Haiku in preview can now fine-tune the mannequin with Bedrock beginning July 10.

- Contextual grounding checks have been added to Guardrails for Amazon Bedrock, which detect hallucinations in mannequin responses for retrieval-augmented technology and summarization functions.

As well as, Guardrails is increasing into the unbiased ApplyGuardrail API, with which Amazon companies and AWS prospects can apply safeguards to generative AI functions even when these fashions are hosted outdoors of AWS infrastructure. Meaning app creators can use toxicity filters, content material filters and mark delicate data that they want to exclude from the applying. Wynn mentioned as much as 85% of dangerous content material will be diminished with customized Guardrails.

Contextual grounding and the ApplyGuardrail API will probably be out there July 10 in choose AWS areas.

Contextual grounding for Guardrails for Amazon Bedrock is a part of the broader AWS accountable AI technique

Contextual grounding connects to the general AWS accountable AI technique by way of the continued effort from AWS in “advancing the science in addition to persevering with to innovate and supply our prospects with companies that they will leverage in creating their companies, creating AI merchandise,” Wynn mentioned.

“One of many areas that we hear typically as a priority or consideration for patrons is round hallucinations,” she mentioned.

Contextual grounding — and Guardrails generally — may also help mitigate that downside. Guardrails with contextual grounding can scale back as much as 75% of the hallucinations beforehand seen in generative AI, Wynn mentioned.

The way in which prospects have a look at generative AI has modified as generative AI has turn into extra mainstream over the past yr.

“After we began a few of our customer-facing work, prospects weren’t essentially coming to us, proper?” mentioned Wynn. “We have been, you realize, particular use instances and serving to to help like improvement, however the shift within the final yr plus has finally been that there’s a better consciousness [of generative AI] and so firms are asking for and wanting to know extra in regards to the methods by which we’re constructing and the issues that they will do to make sure that their techniques are protected.”

Meaning “addressing questions of bias” in addition to decreasing safety points or AI hallucinations, she mentioned.

Additions to the Amazon Q enterprise assistant and different bulletins from AWS NY Summit

AWS introduced a number of recent capabilities and tweaks to merchandise on the AWS NY Summit. Highlights embody:

- A developer customization functionality within the Amazon Q enterprise AI assistant to safe entry to a corporation’s code base.

- The addition of Amazon Q to SageMaker Studio.

- The final availability of Amazon Q Apps, a instrument for deploying generative AI-powered apps primarily based on their firm information.

- Entry to Scale AI on Amazon Bedrock for customizing, configuring and fine-tuning AI fashions.

- Vector Seek for Amazon MemoryDB, accelerating vector search pace in vector databases on AWS.

SEE: Amazon lately introduced Graviton4-powered cloud cases, which might help AWS’s Trainium and Inferentia AI chips.

AWS hits cloud computing coaching aim forward of schedule

At its Summit NY, AWS introduced it has adopted by means of on its initiative to coach 29 million individuals worldwide on cloud computing expertise by 2025, exceeding that quantity already. Throughout 200 international locations and territories, 31 million individuals have taken cloud-related AWS coaching programs.

AI coaching and roles

AWS coaching choices are quite a few, so we gained’t checklist all of them right here, however free coaching in cloud computing happened globally all through the world, each in particular person and on-line. That features coaching on generative AI by means of the AI Prepared initiative. Wynn highlighted two roles that individuals can prepare for the brand new careers of the AI age: immediate engineer and AI engineer.

“You might not have information scientists essentially engaged,” Wynn mentioned. “They’re not coaching base fashions. You’ll have one thing like an AI engineer, maybe.” The AI engineer will fine-tune the muse mannequin, including it into an utility.

“I feel the AI engineer position is one thing that we’re seeing a rise in visibility or reputation,” Wynn mentioned. “I feel the opposite is the place you now have individuals which might be chargeable for immediate engineering. That’s a brand new position or space of talent that’s essential as a result of it’s not so simple as individuals would possibly assume, proper, to provide your enter or immediate, the correct of context and element to get among the specifics that you may want out of a big language mannequin.”

TechRepublic lined the AWS NY Summit remotely.