Introduction

LlamaParse is a doc parsing library developed by Llama Index to effectively and successfully parse paperwork resembling PDFs, PPTs, and many others.

Creating RAG purposes on prime of PDF paperwork presents a big problem many people face, particularly with the complicated job of parsing embedded objects resembling tables, figures, and many others. The character of those objects usually implies that standard parsing strategies wrestle to interpret and extract the knowledge encoded inside them precisely.

The software program improvement neighborhood has launched varied libraries and frameworks in response to this widespread problem. Examples of those options embrace LLMSherpa and unstructured.io. These instruments present sturdy and versatile options to a number of the most persistent points when parsing complicated PDFs.

The most recent addition to this checklist of invaluable instruments is LlamaParse. LlamaParse was developed by Llama Index, one of the well-regarded LLM frameworks at the moment obtainable. Due to this, LlamaParse will be straight built-in with the Llama Index. This seamless integration represents a big benefit, because it simplifies the implementation course of and ensures a better degree of compatibility between the 2 instruments. In conclusion, LlamaParse is a promising new instrument that makes parsing complicated PDFs much less daunting and extra environment friendly.

Studying Goals

- Acknowledge Doc Parsing Challenges: Perceive the difficulties in parsing complicated PDFs with embedded objects.

- Introduction to LlamaParse: Be taught what LlamaParse is and its seamless integration with Llama Index.

- Setup and Initialization: Create a LlamaCloud account, receive an API key, and set up the mandatory libraries.

- Implementing LlamaParse: Comply with the steps to initialize the LLM, load, and parse paperwork.

- Making a Vector Index and Querying Knowledge: Be taught to create a vector retailer index, arrange a question engine, and extract particular info from parsed paperwork.

This text was printed as part of the Knowledge Science Blogathon.

Steps to create a RAG software on prime of PDF utilizing LlamaParse

Step 1: Get the API key

LlamaParse is part of LlamaCloud platform, therefore you might want to have a LlamaCloud account to get an api key.

First, you have to create an account on LlamaCloud and log in to create an API key.

Step 2: Set up the required libraries

Now open your Jupyter Pocket book/Colab and set up the required libraries. Right here, we solely want to put in two libraries: llama-index and llama-parse. We shall be utilizing OpenAI’s mannequin for querying and embedding.

!pip set up llama-index

!pip set up llama-parseStep 3: Set the surroundings variables

import os

os.environ['OPENAI_API_KEY'] = 'sk-proj-****'

os.environ["LLAMA_CLOUD_API_KEY"] = 'llx-****'Step 4: Initialize the LLM and embedding mannequin

Right here, I’m utilizing gpt-3.5-turbo-0125 because the LLM and OpenAI’s text-embedding-3-small because the embedding mannequin. We are going to use the Settings module to switch the default LLM and the embedding mannequin.

from llama_index.llms.openai import OpenAI

from llama_index.embeddings.openai import OpenAIEmbedding

from llama_index.core import Settings

embed_model = OpenAIEmbedding(mannequin="text-embedding-3-small")

llm = OpenAI(mannequin="gpt-3.5-turbo-0125")

Settings.llm = llm

Settings.embed_model = embed_modelStep 5: Parse the Doc

Now, we’ll load our doc and convert it to the markdown sort. It’s then parsed utilizing MarkdownElementNodeParser.

The desk I used is taken from ncrb.gov.in and will be discovered right here: https://ncrb.gov.in/accidental-deaths-suicides-in-india-adsi. It has information embedded at completely different ranges.

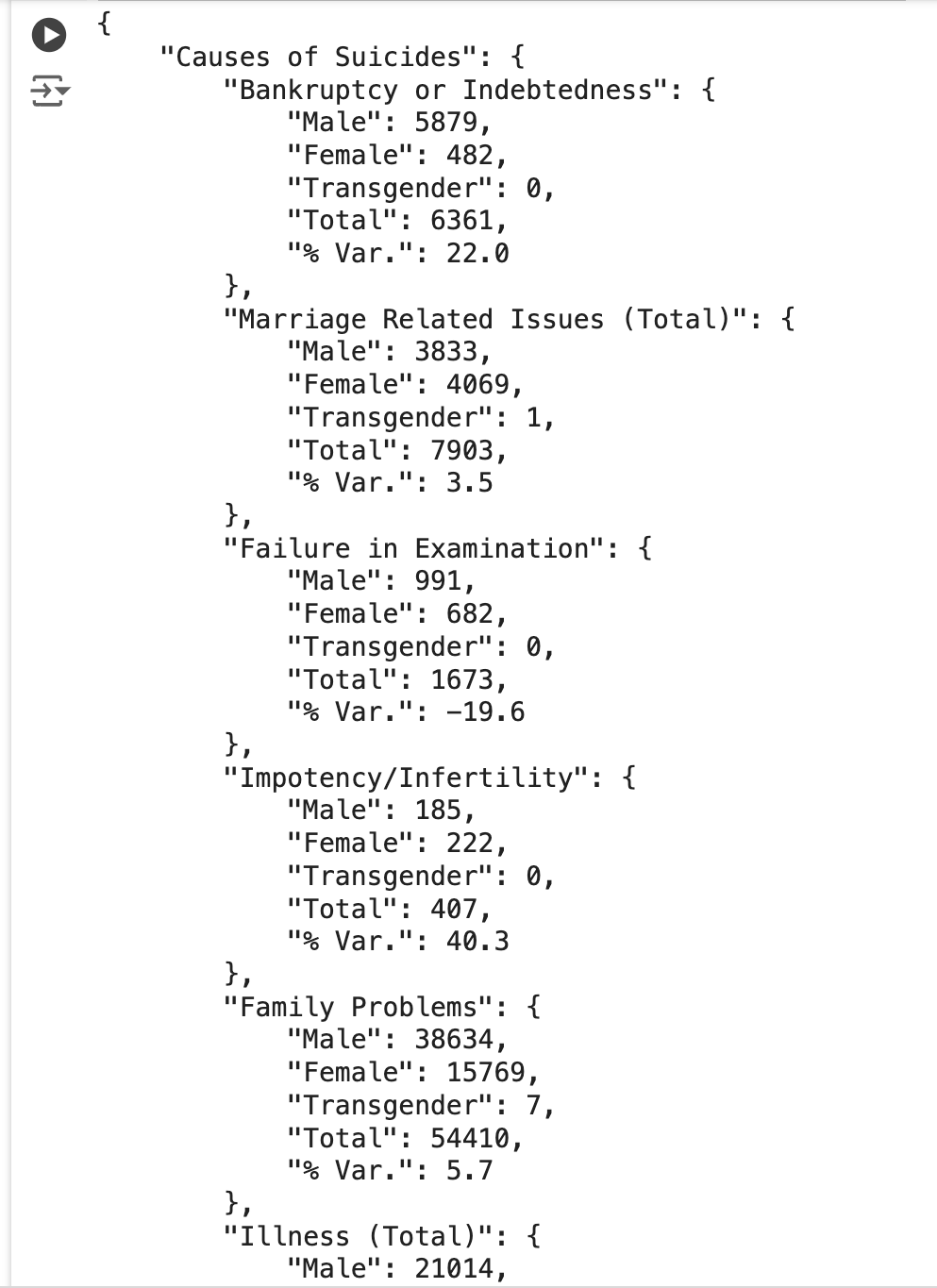

Under is the snapshot of the desk that i’m making an attempt to parse.

from llama_parse import LlamaParse

from llama_index.core.node_parser import MarkdownElementNodeParser

paperwork = LlamaParse(result_type="markdown").load_data("./Table_2021.pdf")

node_parser = MarkdownElementNodeParser(

llm=llm, num_workers=8

)

nodes = node_parser.get_nodes_from_documents(paperwork)

base_nodes, objects = node_parser.get_nodes_and_objects(nodes)Step 6: Create the vector index and question engine

Now, we’ll create a vector retailer index utilizing the llama index’s built-in implementation to create a question engine on prime of it. We are able to additionally use vector shops resembling chromadb, pinecone for this.

from llama_index.core import VectorStoreIndex

recursive_index = VectorStoreIndex(nodes=base_nodes + objects)

recursive_query_engine = recursive_index.as_query_engine(

similarity_top_k=5

)Step 7: Querying the Index

question = 'Extract the desk as a dict and exclude any details about 2020. Additionally embrace % var'

response = recursive_query_engine.question(question)

print(response)The above person question will question the underlying vector index and return the embedded contents within the PDF doc in JSON format, as proven within the picture beneath.

As you’ll be able to see within the screenshot, the desk was extracted in a clear JSON format.

Step 8: Placing all of it collectively

from llama_index.llms.openai import OpenAI

from llama_index.embeddings.openai import OpenAIEmbedding

from llama_index.core import Settings

from llama_parse import LlamaParse

from llama_index.core.node_parser import MarkdownElementNodeParser

from llama_index.core import VectorStoreIndex

embed_model = OpenAIEmbedding(mannequin="text-embedding-3-small")

llm = OpenAI(mannequin="gpt-3.5-turbo-0125")

Settings.llm = llm

Settings.embed_model = embed_model

paperwork = LlamaParse(result_type="markdown").load_data("./Table_2021.pdf")

node_parser = MarkdownElementNodeParser(

llm=llm, num_workers=8

)

nodes = node_parser.get_nodes_from_documents(paperwork)

base_nodes, objects = node_parser.get_nodes_and_objects(nodes)

recursive_index = VectorStoreIndex(nodes=base_nodes + objects)

recursive_query_engine = recursive_index.as_query_engine(

similarity_top_k=5

)

question = 'Extract the desk as a dict and exclude any details about 2020. Additionally embrace % var'

response = recursive_query_engine.question(question)

print(response)Conclusion

LlamaParse is an environment friendly instrument for extracting complicated objects from varied doc sorts, resembling PDF recordsdata with few strains of code. Nevertheless, it is very important notice {that a} sure degree of experience in working with LLM frameworks, such because the llama index, is required to make the most of this instrument absolutely.

LlamaParse proves priceless in dealing with duties of various complexity. Nevertheless, like every other instrument within the tech subject, it’s not solely resistant to errors. Due to this fact, performing a radical software analysis is very really useful independently or leveraging obtainable analysis instruments. Analysis libraries, resembling Ragas, Truera, and many others., present metrics to evaluate the accuracy and reliability of your outcomes. This step ensures potential points are recognized and resolved earlier than the appliance is pushed to a manufacturing surroundings.

Key Takeaways

- LlamaParse is a instrument created by the Llama Index group. It extracts complicated embedded objects from paperwork like PDFs with only a few strains of code.

- LlamaParse presents each free and paid plans. The free plan permits you to parse as much as 1000 pages per day.

- LlamaParse at the moment helps 10+ file sorts (.pdf, .pptx, .docx, .html, .xml, and extra).

- LlamaParse is a part of the LlamaCloud platform, so that you want a LlamaCloud account to get an API key.

- With LlamaParse, you’ll be able to present directions in pure language to format the output. It even helps picture extraction.

The media proven on this article will not be owned by Analytics Vidhya and is used on the Creator’s discretion.

Continuously requested questions(FAQ)

A. LlamaIndex is the main LLM framework, together with LangChain, for constructing LLM purposes. It helps join customized information sources to giant language fashions (LLMs) and is a broadly used instrument for constructing RAG purposes.

A. LlamaParse is an providing from Llama Index that may extract complicated tables and figures from paperwork like PDF, PPT, and many others. Due to this, LlamaParse will be straight built-in with the Llama Index, permitting us to make use of it together with the big variety of brokers and instruments that the Llama Index presents.

A. Llama Index is an LLM framework for constructing customized LLM purposes and offers varied instruments and brokers. LlamaParse is specifically targeted on extracting complicated embedded objects from paperwork like PDF, PPT, and many others.

A. The significance of LlamaParse lies in its capability to transform complicated unstructured information into tables, pictures, and many others., right into a structured format, which is essential within the fashionable world the place most respected info is on the market in unstructured type. This transformation is important for analytics functions. As an example, finding out an organization’s financials from its SEC filings, which might span round 100-200 pages, could be difficult with out such a instrument. LlamaParse offers an environment friendly approach to deal with and construction this huge quantity of unstructured information, making it extra accessible and helpful for evaluation.

A. Sure, LLMSherpa and unstructured.io are the options obtainable to LlamaParse.