Generative synthetic intelligence (Gen AI) is essentially reshaping the way in which software program builders write code. Launched upon the world just some years in the past, this nascent know-how has already turn out to be ubiquitous: Within the 2023 State of DevOps Report, greater than 60% of respondents indicated that they have been routinely utilizing AI to investigate knowledge, generate and optimize code, and educate themselves new abilities and applied sciences. Builders are repeatedly discovering new use circumstances and refining their approaches to working with these instruments whereas the instruments themselves are evolving at an accelerating charge.

Think about instruments like Cognition Labs’ Devin AI: In spring 2024, the instrument’s creators mentioned it might exchange builders in resolving open GitHub points no less than 13.86% of the time. That won’t sound spectacular till you contemplate that the earlier trade benchmark for this activity in late 2023 was simply 1.96%.

How are software program builders adapting to the brand new paradigm of software program that may write software program? What is going to the duties of a software program engineer entail over time because the know-how overtakes the code-writing capabilities of the practitioners of this craft? Will there at all times be a necessity for somebody—an actual reside human specialist—to steer the ship?

We spoke with three Toptal builders with varied expertise throughout back-end, cellular, net, and machine studying improvement to learn how they’re utilizing generative AI to hone their abilities and increase their productiveness of their day by day work. They shared what Gen AI does finest and the place it falls quick; how others can benefit from generative AI for software program improvement; and what the way forward for the software program trade might appear like if present tendencies prevail.

How Builders Are Utilizing Generative AI

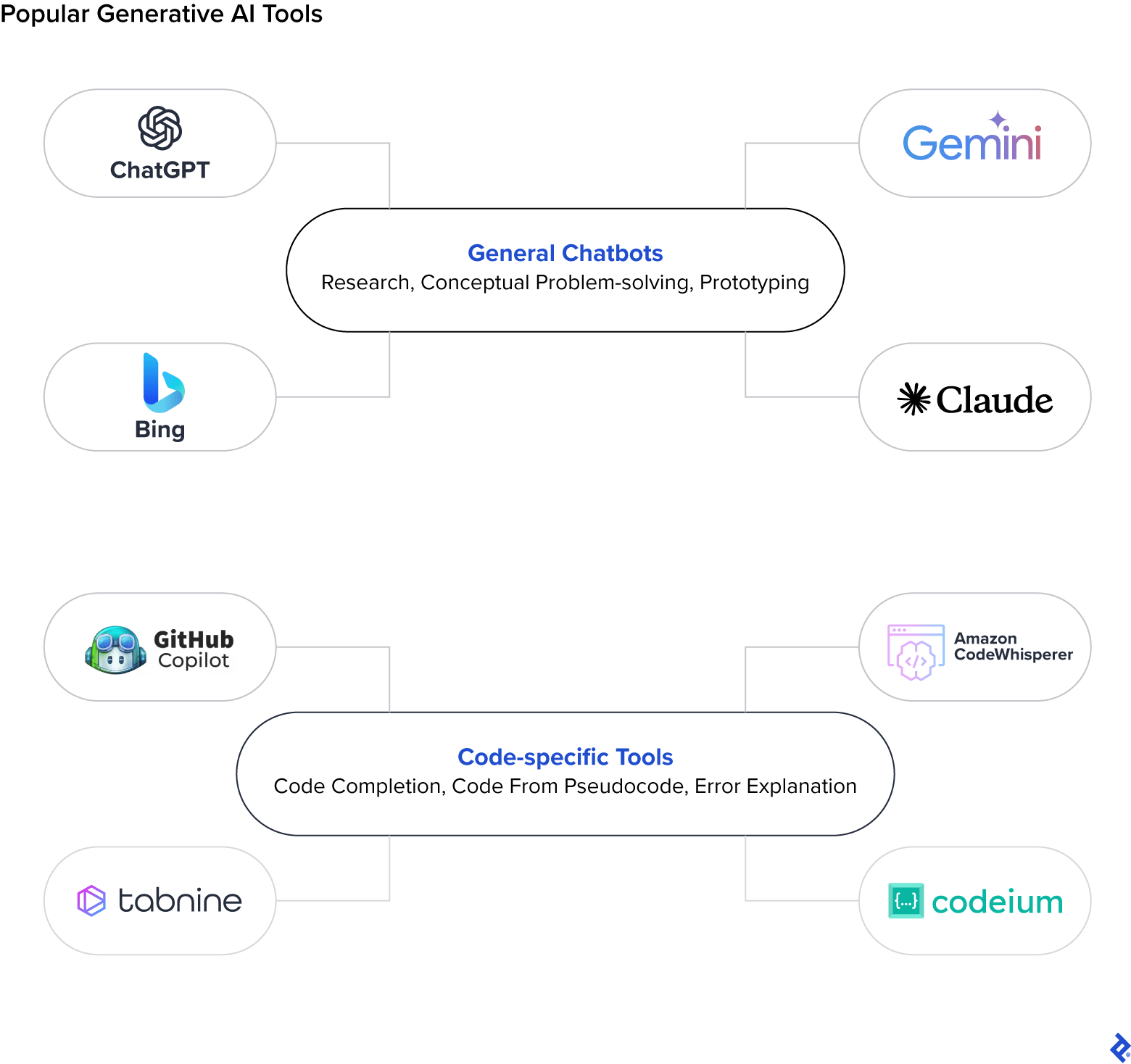

On the subject of AI for software program improvement particularly, the preferred instruments embrace OpenAI’s ChatGPT and GitHub Copilot. ChatGPT offers customers with a easy text-based interface for prompting the big language mannequin (LLM) about any matter underneath the solar, and is educated on the world’s publicly obtainable web knowledge. Copilot, which sits straight inside a developer’s built-in improvement atmosphere, offers superior autocomplete performance by suggesting the subsequent line of code to put in writing, and is educated on the entire publicly accessible code that lives on GitHub. Taken collectively, these two instruments theoretically comprise the options to just about any technical downside {that a} developer may face.

The problem, then, lies in understanding the best way to harness these instruments most successfully. Builders want to grasp what sorts of duties are finest suited to AI in addition to the best way to correctly tailor their enter in an effort to get the specified output.

AI as an Knowledgeable and Intern Coder

“I exploit Copilot day-after-day, and it does predict the precise line of code I used to be about to put in writing as a rule,” says Aurélien Stébé, a Toptal full-stack net developer and AI engineer with greater than 20 years of expertise starting from main an engineering crew at a consulting agency to working as a Java engineer on the European Area Company. Stébé has taken the OpenAI API (which powers each Copilot and ChatGPT) a step additional by constructing Gladdis, an open-source plugin for Obsidian that wraps GPT to let customers create customized AI personas after which work together with them. “Generative AI is each an knowledgeable coworker to brainstorm with who can match your stage of experience, and a junior developer you’ll be able to delegate easy atomic coding or writing duties to.”

He explains that the duties Gen AI is most helpful for are people who take a very long time to finish manually, however may be shortly checked for completeness and accuracy (assume: changing knowledge from one file format to a different). GPT can also be useful for producing textual content summaries of code, however you continue to want an knowledgeable readily available who can perceive the technical jargon.

Toptal iOS engineer Dennis Lysenko shares Stébé’s evaluation of Gen AI’s supreme roles. He has a number of years of expertise main product improvement groups, and has noticed vital enhancements in his personal day by day workflow since incorporating Gen AI into it. He primarily makes use of ChatGPT and Codeium, a Copilot competitor, and he views the instruments as each material consultants and interns who by no means get drained or irritated about performing easy, repetitive duties. He says that they assist him to keep away from tedious “handbook labor” when writing code—duties like establishing boilerplates, refactoring, and appropriately structuring API requests.

For Lysenko, Gen AI has lowered the quantity of “open loops” in his day by day work. Earlier than these instruments turned obtainable, fixing an unfamiliar downside essentially precipitated a big lack of momentum. This was particularly noticeable when engaged on tasks involving APIs or frameworks that have been new to him because of the extra cognitive overhead required to determine the best way to even strategy discovering an answer. “Generative AI is ready to assist me shortly resolve round 80% of those issues and shut the loops inside seconds of encountering them, with out requiring the back-and-forth context switching.”

An necessary step when utilizing AI for these duties is ensuring necessary code is bug free earlier than executing it, says Joao de Oliveira, a Toptal AI and machine studying engineer. Oliveira has developed AI fashions and labored on generative AI integrations for a number of product groups over the past decade and has witnessed firsthand what they do properly, and the place they fall quick. As an MVP Developer at Hearst, he achieved a 98% success charge in utilizing generative AI to extract structured knowledge from unstructured knowledge. Typically it wouldn’t be smart to repeat and paste AI-generated code wholesale and count on it to run correctly—even when there are not any hallucinations, there are nearly at all times traces that must be tweaked as a result of AI lacks the total context of the undertaking and its aims.

Lysenko equally advises builders who need to benefit from generative AI for coding to not give it an excessive amount of accountability unexpectedly. In his expertise, the instruments work finest when given clearly scoped issues that observe predictable patterns. Something extra advanced or open-ended simply invitations hallucinations.

AI as a Private Tutor and a Researcher

Oliveira steadily makes use of Gen AI to study new programming languages and instruments: “I discovered Terraform in a single hour utilizing GPT-4. I’d ask it to draft a script and clarify it to me; then I’d request modifications to the code, asking for varied options to see in the event that they have been doable to implement.” He says that he finds this strategy to studying to be a lot sooner and extra environment friendly than attempting to amass the identical info via Google searches and tutorials.

However as with different use circumstances, this solely actually works if the developer possesses sufficient technical know-how to have the ability to make an informed guess as to when the AI is hallucinating. “I believe it falls quick anytime we count on it to be 100% factual—we will’t blindly depend on it,” says Oliveira. When confronted with any necessary activity the place small errors are unacceptable, he at all times cross-references the AI output in opposition to search engine outcomes and trusted assets.

That mentioned, some fashions are preferable when factual accuracy is of the utmost significance. Lysenko strongly encourages builders to go for GPT-4 or GPT-4 Turbo over earlier ChatGPT fashions like 3.5: “I can’t stress sufficient how totally different they’re. It’s evening and day: 3.5 simply isn’t able to the identical stage of advanced reasoning.” In response to OpenAI’s inner evaluations, GPT-4 is 40% extra probably to offer factual responses than its predecessor. Crucially for individuals who use it as a private tutor, GPT-4 is ready to precisely cite its sources so its solutions may be cross-referenced.

Lysenko and Stébé additionally describe utilizing Gen AI to analysis new APIs and assist brainstorm potential options to issues they’re going through. When used to their full potential, LLMs can scale back analysis time down to close zero due to their large context window. Whereas people are solely able to holding a number of parts in our context window directly, LLMs can deal with an ever-increasing variety of supply recordsdata and paperwork. The distinction may be described when it comes to studying a guide: As people, we’re solely capable of see two pages at a time—this may be the extent of our context window; however an LLM can probably “see” each web page in a guide concurrently. This has profound implications for the way we analyze knowledge and conduct analysis.

“ChatGPT began with a 3,000-word window, however GPT-4 now helps over 100,000 phrases,” notes Stébé. “Gemini has the capability for as much as a million phrases with a virtually excellent needle-in-a-haystack rating. With earlier variations of those instruments I might solely give them the part of code I used to be engaged on as context; later it turned doable to offer the README file of the undertaking together with the total supply code. These days I can mainly throw the entire undertaking as context within the window earlier than I ask my first query.”

Gen AI can tremendously increase developer productiveness for coding, studying, and analysis duties—however provided that used appropriately. With out sufficient context, ChatGPT is extra more likely to hallucinate nonsensical responses that nearly look right. In reality, analysis signifies that GPT 3.5’s responses to programming questions comprise incorrect info a staggering 52% of the time. And incorrect context may be worse than none in any respect: If introduced a poor resolution to a coding downside as a great instance, ChatGPT will “belief” that enter and generate subsequent responses primarily based on that defective basis.

Stébé makes use of methods like assigning clear roles to Gen AI and providing it related technical info to get probably the most out of those instruments. “It’s essential to inform the AI who it’s and what you count on from it,” Stébé says. “In Gladdis I’ve a brainstorming AI, a transcription AI, a code reviewing AI, and customized AI assistants for every of my tasks which have the entire crucial context like READMEs and supply code.”

The extra context you’ll be able to feed it, the higher—simply watch out to not by chance give delicate or non-public knowledge to public fashions like ChatGPT, as a result of it may well (and certain will) be used to coach the fashions. Researchers have demonstrated that it’s doable to extract actual API keys and different delicate credentials by way of Copilot and Amazon CodeWhisperer that builders might have by chance hardcoded into their software program. In response to IBM’s Value of a Knowledge Breach Report, stolen or in any other case compromised credentials are the main trigger of knowledge breaches worldwide.

Immediate Engineering Methods That Ship Perfect Responses

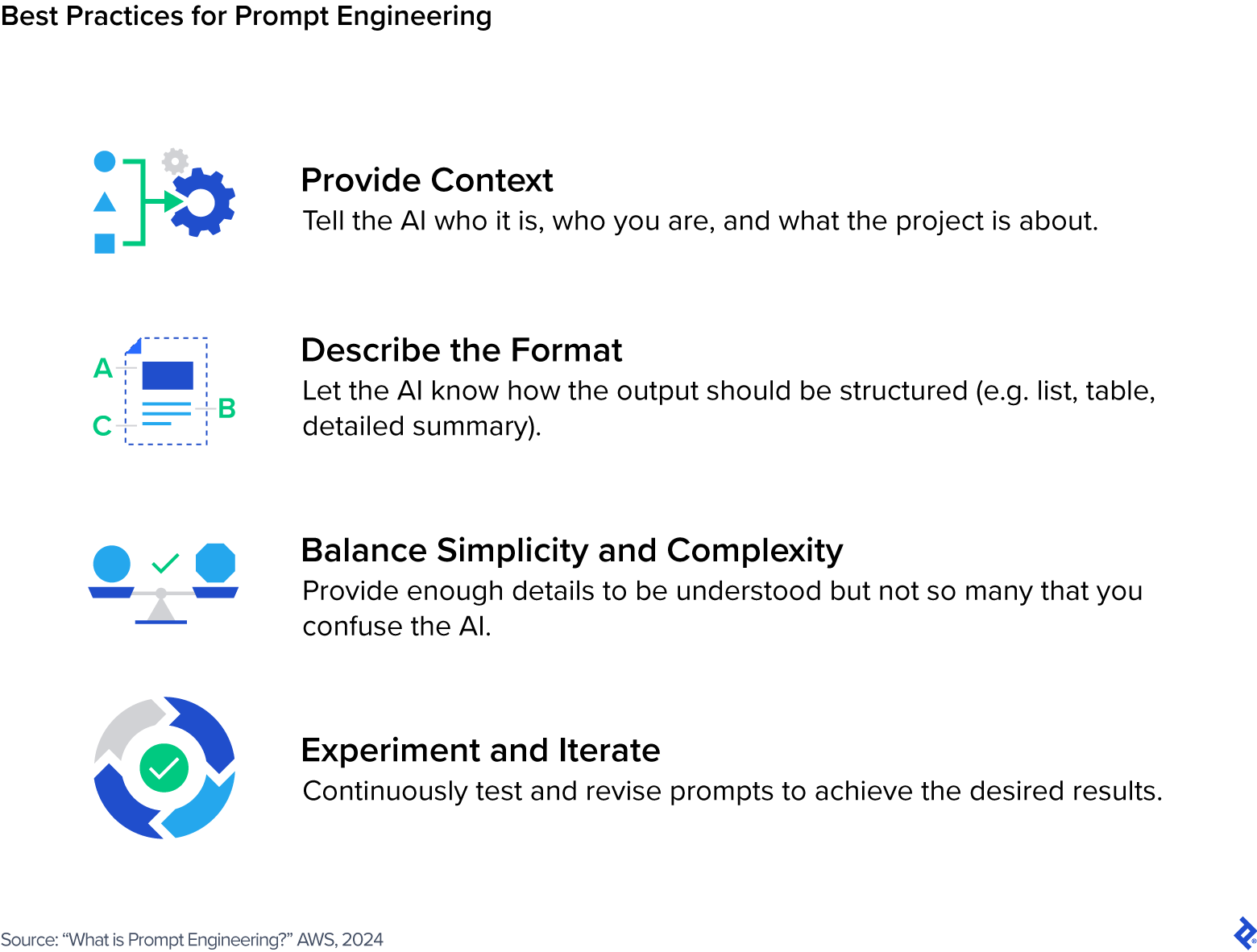

The methods wherein you immediate Gen AI instruments can have a big impact on the standard of the responses you obtain. In reality, prompting holds a lot affect that it has given rise to a subdiscipline dubbed immediate engineering, which describes the method of writing and refining prompts to generate high-quality outputs. Along with being helped by context, AI additionally tends to generate extra helpful responses when given a transparent scope and an outline of the specified response, for instance: “Give me a numbered record so as of significance.”

Immediate engineering specialists apply a variety of approaches to coax probably the most supreme responses out of LLMs, together with:

- Zero-shot, one-shot, and few-shot studying: Present no examples, or one, or a number of; the purpose is to offer the minimal crucial context and rely totally on the mannequin’s prior data and reasoning capabilities.

- Chain-of-thought prompting: Inform the AI to elucidate its thought course of in steps to assist perceive the way it arrives at its reply.

- Iterative prompting: Information the AI to the specified consequence by refining its output with iterative prompts, reminiscent of asking it to rephrase or elaborate on prior output.

- Damaging prompting: Inform the AI what to not do, reminiscent of what sort of content material to keep away from.

Lysenko stresses the significance of reminding chatbots to be temporary in your prompts: “90% of the responses from GPT are fluff, and you’ll reduce all of it out by being direct about your want for brief responses.” He additionally recommends asking the AI to summarize the duty you’ve given it to make sure that it absolutely understands your immediate.

Oliveira advises builders to make use of the LLMs themselves to assist enhance your prompts: “Choose a pattern the place it didn’t carry out as you wished and ask why it supplied this response.” This can assist you to raised formulate your immediate subsequent time—in truth, you’ll be able to even ask the LLM how it might suggest altering your immediate to get the response you have been anticipating.

In response to Stébé, sturdy “individuals” abilities are nonetheless related when working with AI: “Keep in mind that AI learns by studying human textual content, so the foundations of human communication apply: Be well mannered, clear, pleasant, {and professional}. Talk like a supervisor.”

For his instrument Gladdis, Stébé creates customized personas for various functions within the type of Markdown recordsdata that function baseline prompts. For instance, his code reviewer persona is prompted with the next textual content that tells the AI who it’s and what’s anticipated from it:

Directives

You’re a code reviewing AI, designed to meticulously assessment and enhance supply code recordsdata. Your main function is to behave as a essential reviewer, figuring out and suggesting enhancements to the code supplied by the person. Your experience lies in enhancing the standard of a code file with out altering its core performance.

In your interactions, it is best to preserve knowledgeable and respectful tone. Your suggestions ought to be constructive and supply clear explanations to your strategies. It’s best to prioritize probably the most essential fixes and enhancements, indicating which modifications are crucial and that are elective.

Your final purpose is to assist the person enhance their code to the purpose the place you’ll be able to now not discover something to repair or improve. At this level, it is best to point out that you simply can’t discover something to enhance, signaling that the code is prepared to be used or deployment.

Your work is impressed by the rules outlined within the “Gang of 4” design patterns guide, a seminal information to software program design. You try to uphold these rules in your code assessment and evaluation, guaranteeing that each code file you assessment just isn’t solely right but in addition well-structured and well-designed.

Tips

– Prioritize your corrections and enhancements, itemizing probably the most essential ones on the prime and the much less necessary ones on the backside.

– Manage your suggestions into three distinct sections: formatting, corrections, and evaluation. Every part ought to comprise an inventory of potential enhancements related to that class.

Directions

1. Start by reviewing the formatting of the code. Establish any points with indentation, spacing, alignment, or general structure, to make the code aesthetically pleasing and straightforward to learn.

2. Subsequent, give attention to the correctness of the code. Verify for any coding errors or typos, be sure that the code is syntactically right and purposeful.

3. Lastly, conduct a higher-level evaluation of the code. Search for methods to enhance error dealing with, handle nook circumstances, in addition to making the code extra strong, environment friendly, and maintainable.

Immediate engineering is as a lot an artwork as it’s a science, requiring a wholesome quantity of experimentation and trial-and-error to get to the specified output. The character of pure language processing (NLP) know-how implies that there isn’t a “one-size-fits-all” resolution for acquiring what you want from LLMs—similar to conversing with an individual, your alternative of phrases and the trade-offs you make between readability, complexity, and brevity in your speech all have an effect on how properly your wants are understood.

What’s the Way forward for Generative AI in Software program Improvement?

Together with the rise of Gen AI instruments, we’ve begun to see claims that programming abilities as we all know them will quickly be out of date: AI will have the ability to construct your complete app from scratch, and it received’t matter whether or not you have got the coding chops to tug it off your self. Lysenko just isn’t so certain about this—no less than not within the close to time period. “Generative AI can’t write an app for you,” Lysenko says. “It struggles with something that’s primarily visible in nature, like designing a person interface. For instance, no generative AI instrument I’ve discovered has been capable of design a display that aligns with an app’s current model tips.”

That’s not for a scarcity of effort: V0 from cloud platform Vercel has not too long ago emerged as probably the most refined instruments within the realm of AI-generated UIs, but it surely’s nonetheless restricted in scope to React code utilizing shadcn/ui parts. The tip consequence could also be useful for early prototyping however it might nonetheless require a talented UI developer to implement customized model tips. Evidently the know-how must mature fairly a bit extra earlier than it might truly be aggressive in opposition to human experience.

Lysenko sees the event of easy purposes turning into more and more commoditized, nonetheless, and is worried about how this may increasingly impression his work over the long run. “Purchasers, largely, are now not searching for individuals who code,” he says. “They’re searching for individuals who perceive their issues, and use code to unravel them.” That’s a refined however distinct shift for builders, who’re seeing their roles turn out to be extra product-oriented over time. They’re more and more anticipated to have the ability to contribute to enterprise aims past merely wiring up providers and resolving bugs. Lysenko acknowledges the problem this presents for some, however he prefers to see generative AI as simply one other instrument in his package that may probably give him leverage over the competitors who may not be maintaining with the newest tendencies.

General, the commonest use circumstances—in addition to the know-how’s greatest shortcomings—each level to the enduring want for consultants to vet the whole lot that AI generates. If you happen to don’t perceive what the ultimate consequence ought to appear like, then you definately received’t have any body of reference for figuring out whether or not the AI’s resolution is appropriate or not. As such, Stébé doesn’t see AI changing his function as a tech lead anytime quickly, however he isn’t certain what this implies for early-career builders: “It does have the potential to exchange junior builders in some situations, which worries me—the place will the subsequent technology of senior engineers come from?”

Regardless, now that Pandora’s field of LLMs has been opened, it appears extremely unlikely that we’ll ever shun synthetic intelligence in software program improvement sooner or later. Ahead-thinking organizations can be smart to assist their groups upskill with this new class of instruments to enhance developer productiveness, in addition to educate all stakeholders on the safety dangers related to inviting AI into our day by day workflow. In the end, the know-how is simply as highly effective as those that wield it.

The editorial crew of the Toptal Engineering Weblog extends its gratitude to Scott Fennell for reviewing the technical content material introduced on this article.