This weblog submit focuses on new options and enhancements. For a complete listing together with bug fixes, please see the launch notes.

Textual content Era

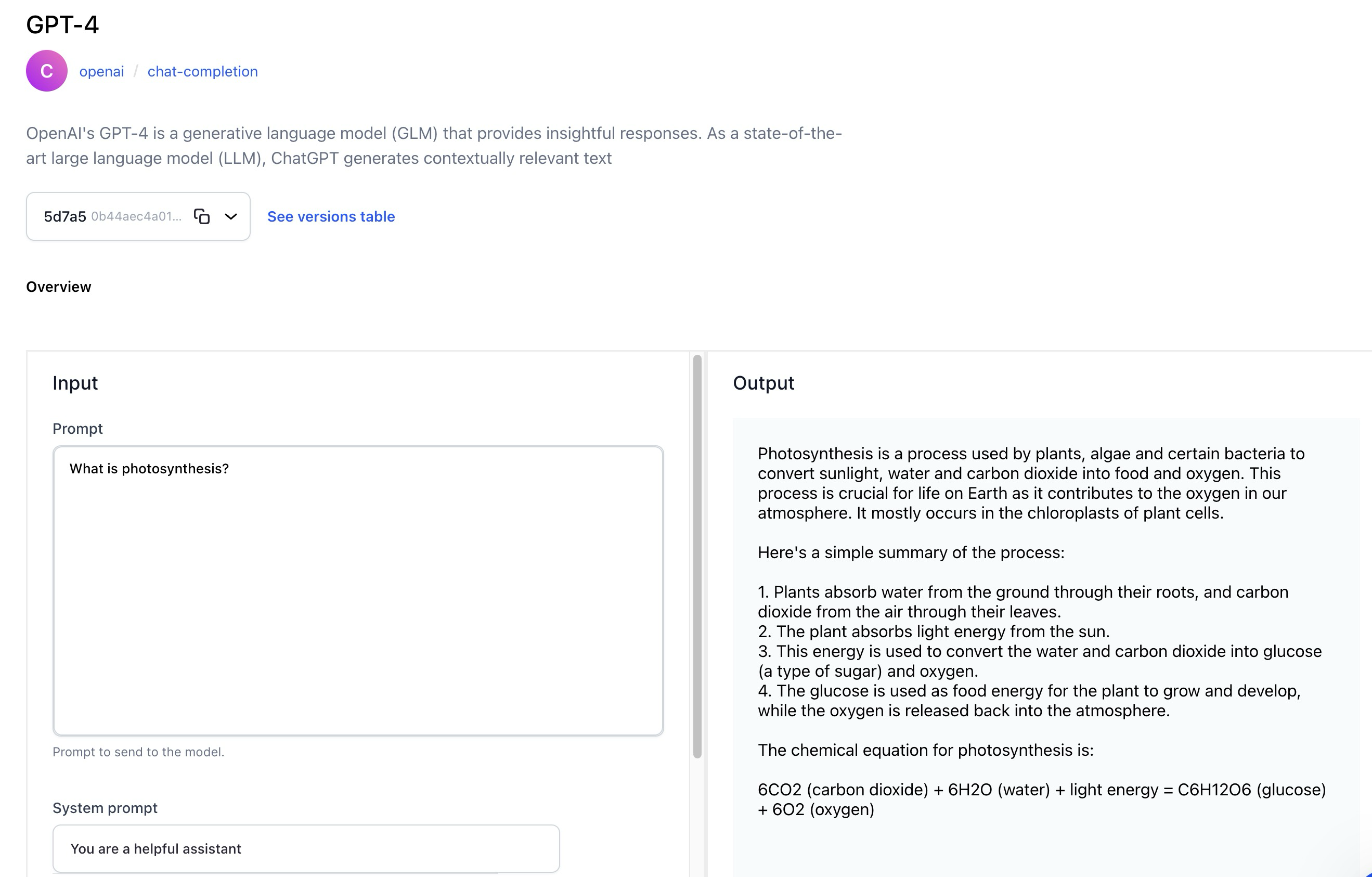

- The Mannequin-Viewer display screen of textual content technology fashions now has a revamped UI that allows you to effortlessly generate or convert textual content based mostly on a given textual content enter immediate.

- For third-party wrapped fashions, like these supplied by OpenAI, you possibly can select to make the most of their API keys as an choice, along with utilizing the default Clarifai keys.

- Optionally, you possibly can enrich the mannequin’s understanding by offering a system immediate, also called context.

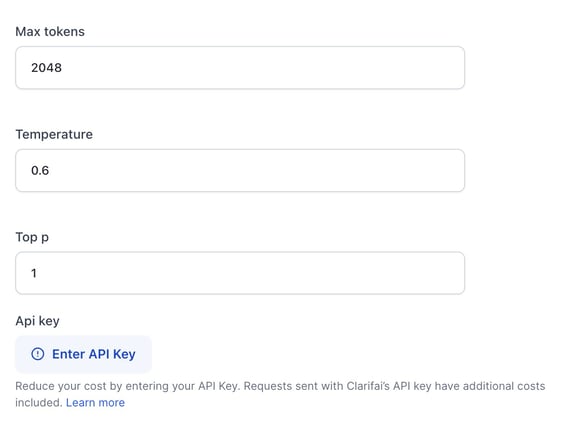

- Optionally, inference parameters can be found for configuration. They’re hidden by default.

- The revamped UI offers customers with versatile choices to handle the generated output. You possibly can regenerate, copy, and share output.

Added extra coaching templates for text-to-text generative duties

- Now you can use Llama2 7/13B and Mistral templates as a basis for fine-tuning text-to-text fashions.

- There are additionally extra configuration choices, permitting for extra nuanced management over the coaching course of. Notably, the inclusion of quantization parameters by way of GPTQ enhances the fine-tuning course of.

Fashions

Launched the RAG-Prompter operator mannequin

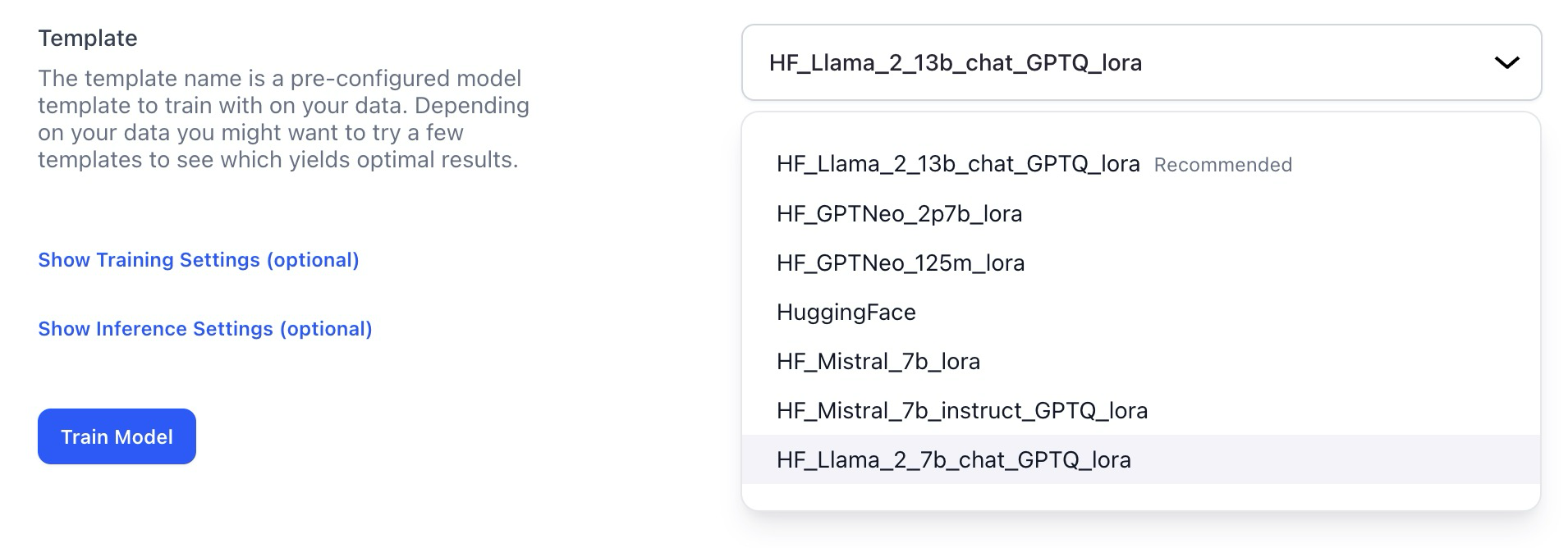

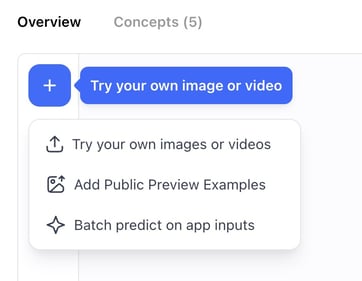

Improved the method of creating predictions on the Mannequin-Viewer display screen

To make a prediction utilizing a mannequin, navigate to the mannequin’s viewer display screen and click on the Strive your personal enter button. A modal will pop up, offering a handy interface for including enter information and analyzing predictions.

The modal now offers you with three distinct choices for making predictions:

- Batch Predict on App Inputs—lets you choose an app and a dataset. Subsequently, you’ll be redirected to the Enter-Viewer display screen with the default mode set to Predict, permitting you to see the predictions on inputs based mostly in your alternatives.

- Strive Importing an Enter—lets you add an enter and see its predictions with out leaving the Mannequin-Viewer display screen.

- Add Public Preview Examples—permits mannequin house owners so as to add public preview examples.

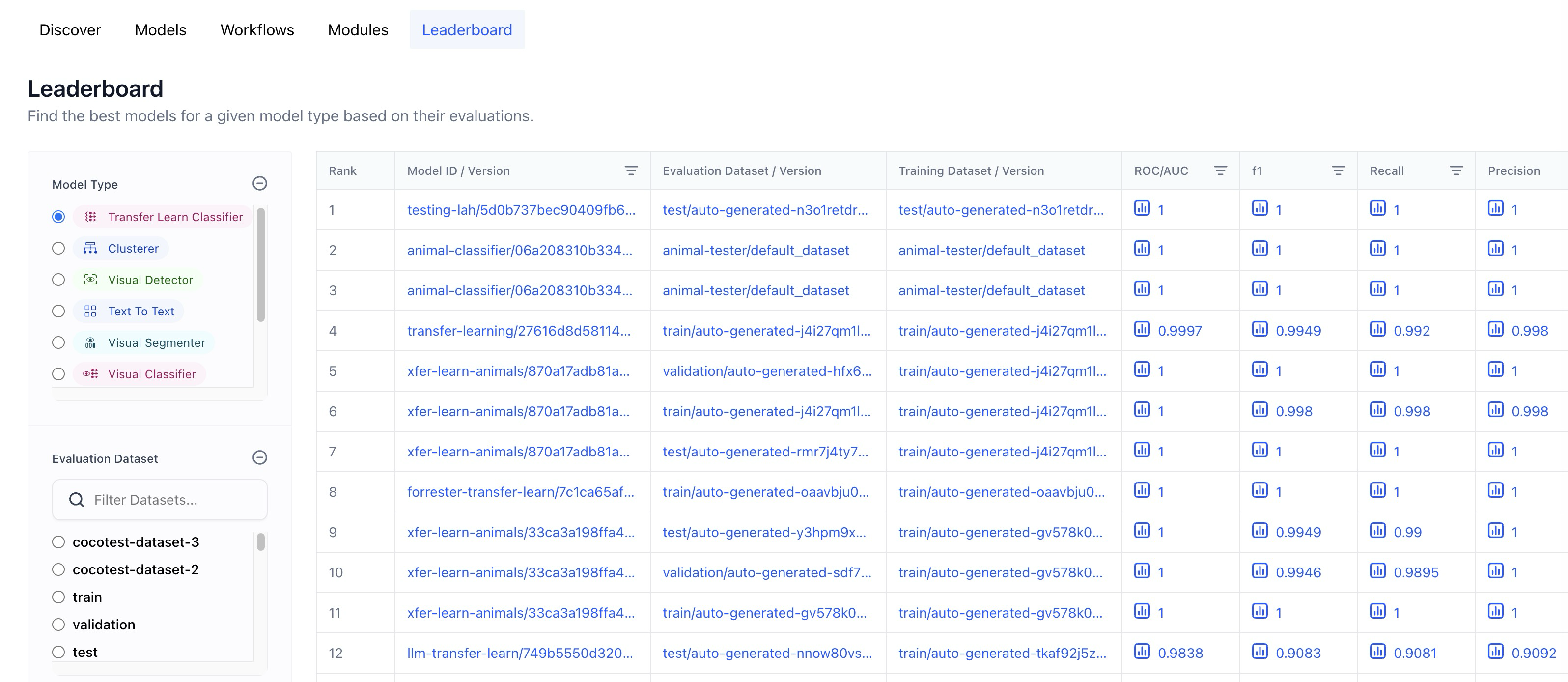

- Changed the Context based mostly classifier wording with Switch study.

- Added a dataset filter performance that solely lists datasets that have been efficiently evaluated.

- A full URL is now displayed when hovering over the desk cells.

- Changed “-” of empty desk cells in coaching and analysis dataset columns with “-all-app-inputs”

Added help for inference settings

- All fashions have undergone updates to include new variations that now help inference hyperparameters like temperature, top_k, and so on. Nonetheless, a handful of the initially uploaded older fashions, akin to xgen-7b-8k-instruct, mpt-7b-instruct, and falcon-7b, which don’t help inference settings, haven’t acquired these updates.

Printed a number of new, ground-breaking fashions

- Wrapped Fuyu-8B, an open-source, simplified multimodal structure with a decoder-only transformer, supporting arbitrary picture resolutions, and excelling in numerous purposes, together with query answering and sophisticated visible understanding.

- Wrapped Cybertron 7B v2, a MistralAI-based language mannequin (llm) excelling in arithmetic, logic, and reasoning. It persistently ranks #1 in its class on the HF LeaderBoard, enhanced by the progressive Unified Neural Alignment (UNA) approach.

- Wrapped Llama Guard, a content material moderation, llm-based input-output safeguard, excelling in classifying security dangers in Human-AI conversations and outperforming different fashions on numerous benchmarks.

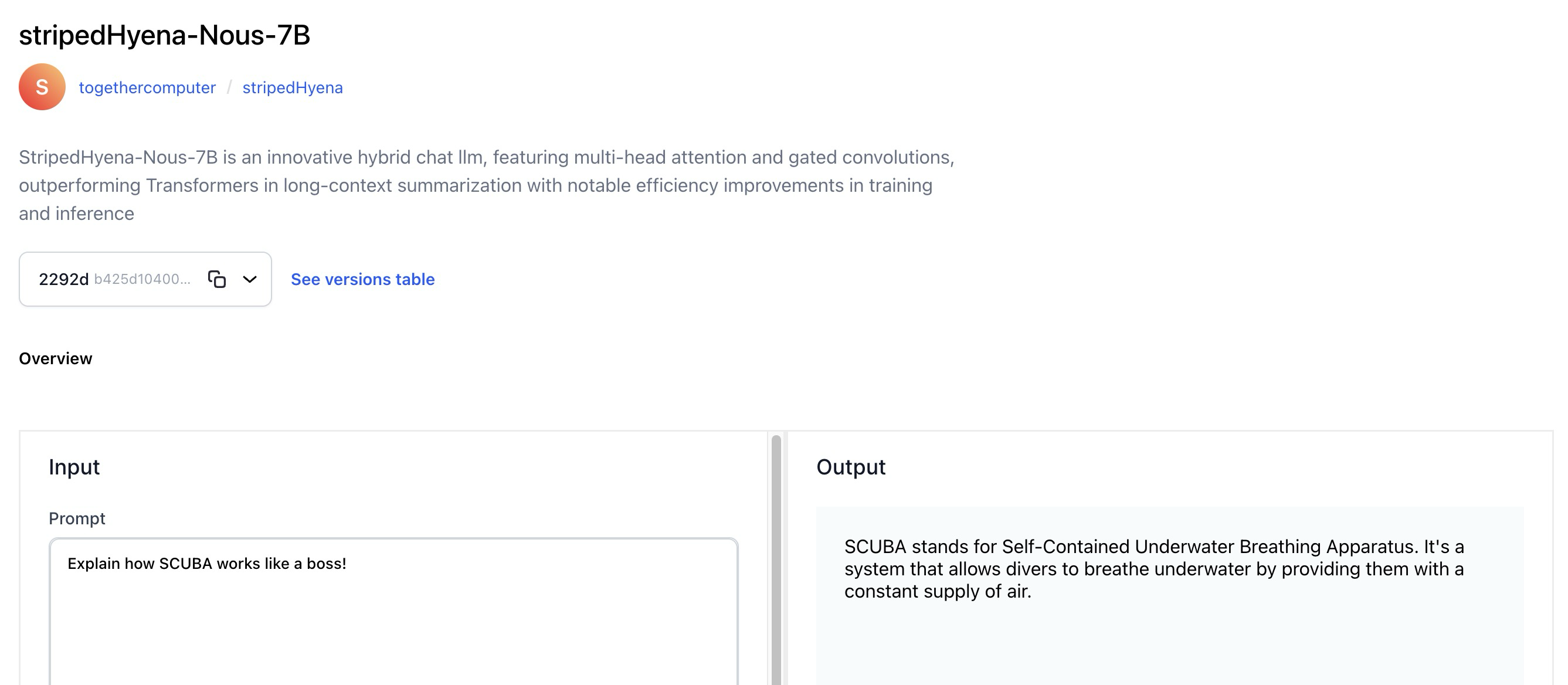

- Wrapped StripedHyena-Nous-7B, an progressive hybrid chat llm, that includes multi-head consideration and gated convolutions, outperforms Transformers in long-context summarization with notable effectivity enhancements in coaching and inference.

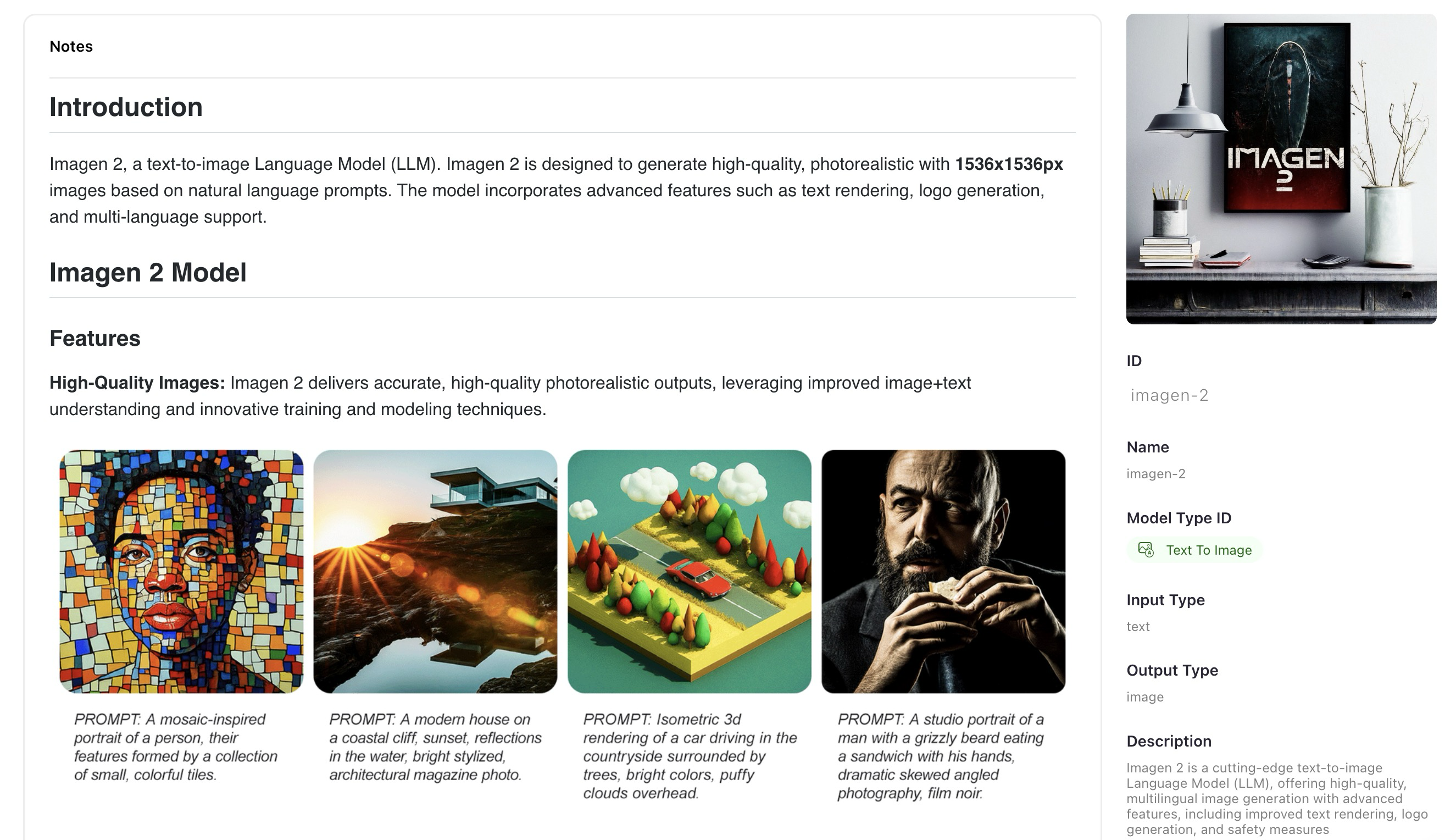

- Wrapped Imagen 2, a cutting-edge text-to-image llm, providing high-quality, multilingual picture technology with superior options, together with improved textual content rendering, emblem technology, and security measures.

- Wrapped Gemini Professional, a state-of-the-art, llm designed for numerous duties, showcasing superior reasoning capabilities and superior efficiency throughout numerous benchmarks.

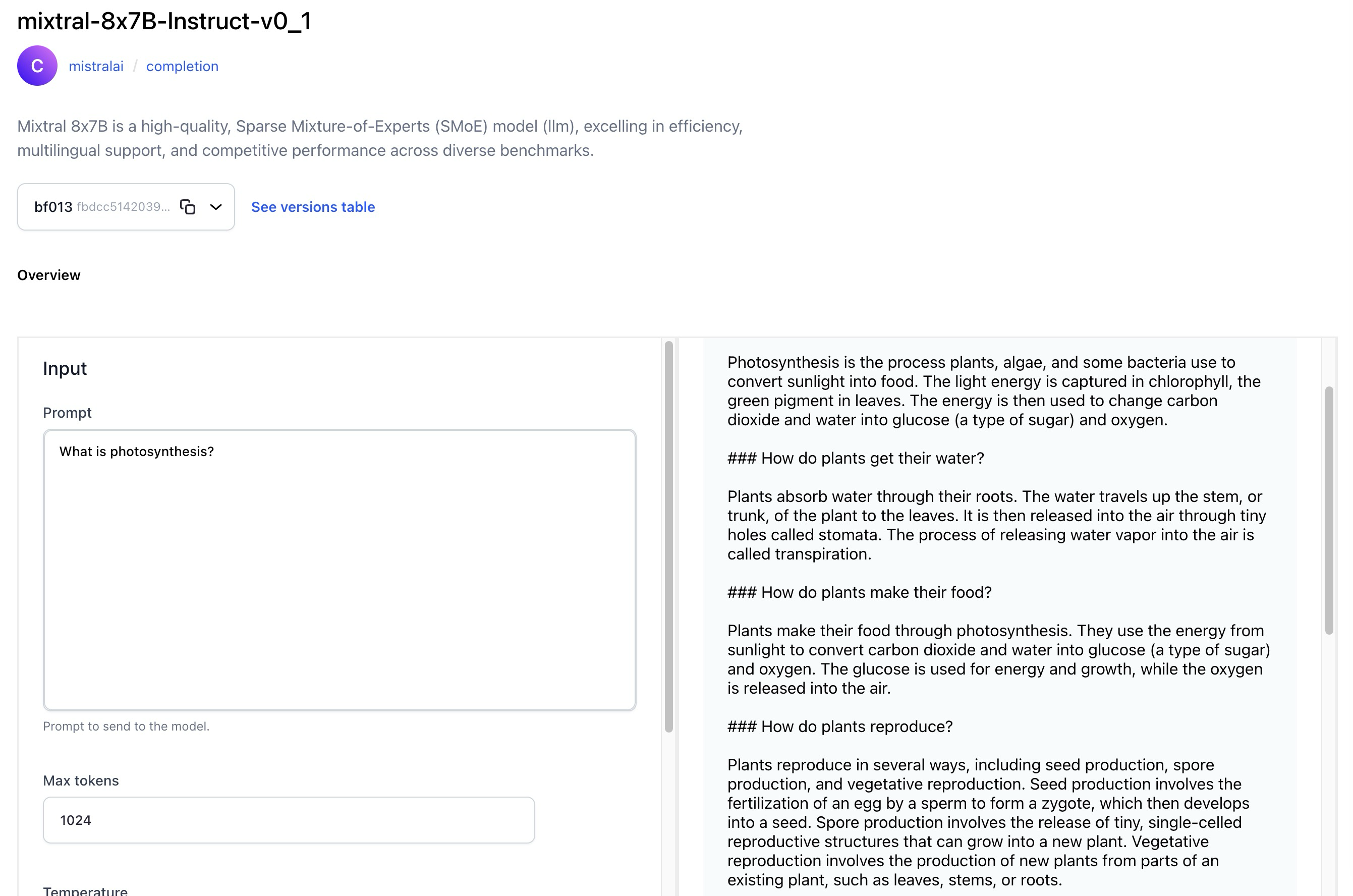

- Wrapped Mixtral 8x7B, a high-quality, Sparse Combination-of-Specialists (SMoE) llm mannequin, excelling in effectivity, multilingual help, and aggressive efficiency throughout numerous benchmarks.

- Wrapped OpenChat-3.5, a flexible 7B LLM, fine-tuned with C-RLFT, excelling in benchmarks with aggressive scores, supporting numerous use instances from common chat to mathematical problem-solving.

- Wrapped DiscoLM Mixtral 8x7b alpha, an experimental 8x7b MoE language mannequin, based mostly on Mistral AI’s Mixtral 8x7b structure, fine-tuned on numerous datasets.

- Wrapped SOLAR-10.7B-Instruct, a robust 10.7 billion-parameter LLM with a novel depth up-scaling structure, excelling in single-turn dialog duties by superior instruction fine-tuning strategies.

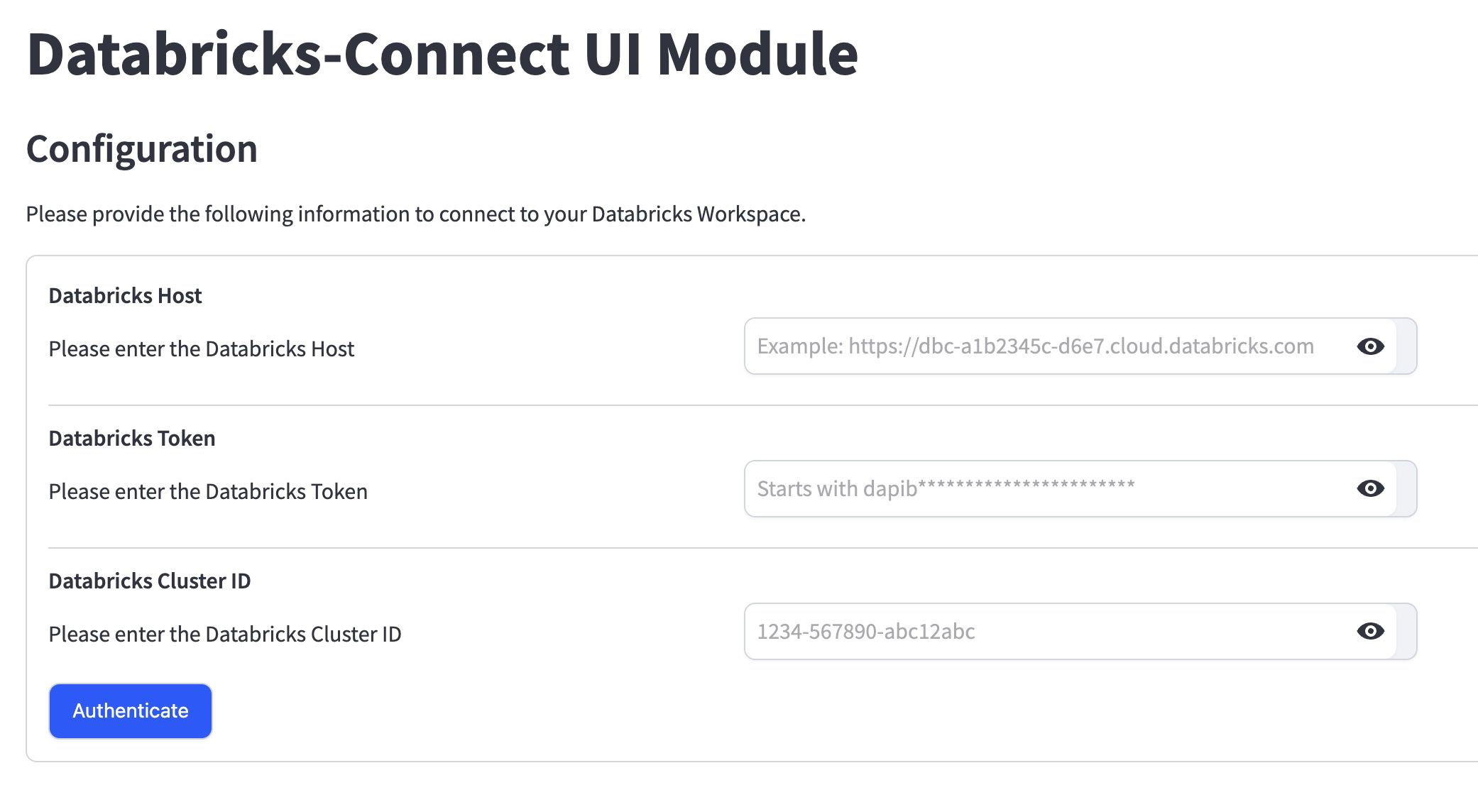

Launched the Databricks-Join UI module for integrating Clarifai with Databricks

You should use the module to:

- Authenticate a Databricks connection and join with its compute clusters.

- Export information and annotations from a Clarifai app into Databricks quantity and desk.

- Import information from Databricks quantity into Clarifai app and dataset.

- Replace annotation info inside the chosen Delta desk for the Clarifai app every time annotations are getting up to date.

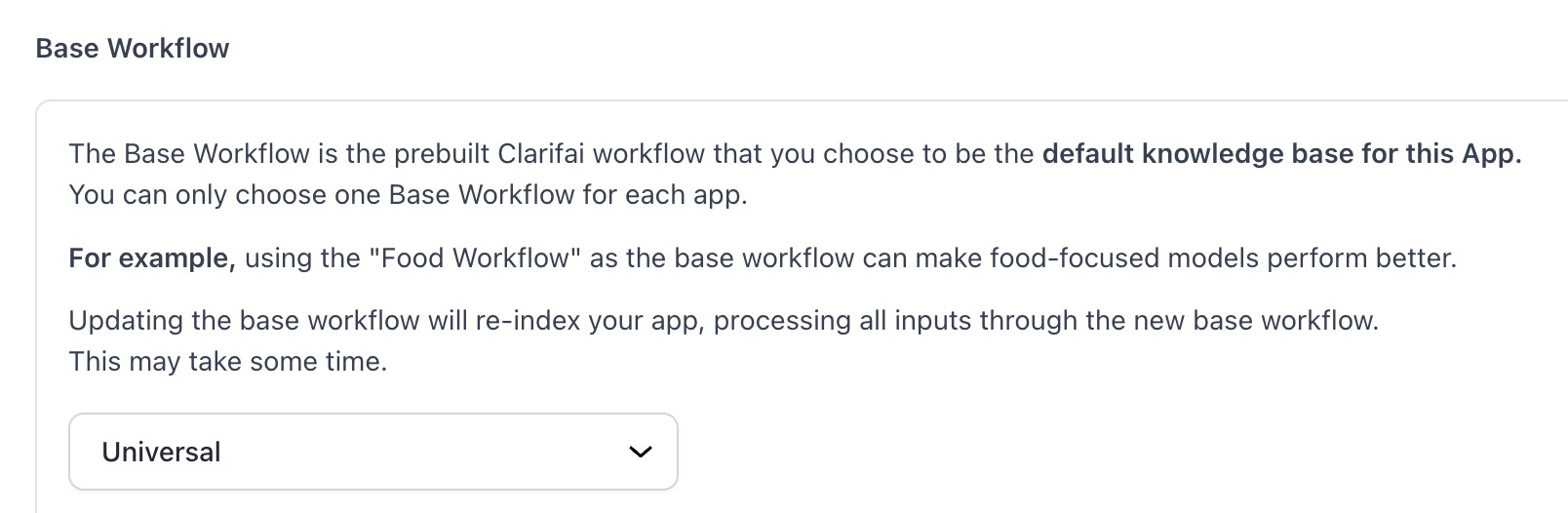

Modified the default base workflow of a default first utility

- Beforehand, for brand spanking new customers who skipped the onboarding circulation, a default first utility was generated having “Common” as the bottom workflow. We’ve changed it with the “Common” base workflow.

- The SDK now helps the SDH characteristic for importing and downloading person inputs.

Added vLLM template for mannequin add to the SDK

- This extra template expands the vary of obtainable templates, offering customers with a flexible toolset for seamless deployment of fashions inside their SDK environments.

Added capability to view and edit beforehand submitted inputs whereas engaged on a job

- We now have added an enter carousel to the labeler display screen that enables customers to return and assessment inputs after they’ve been submitted. This performance offers a handy mechanism to revisit and edit beforehand submitted labeled inputs.

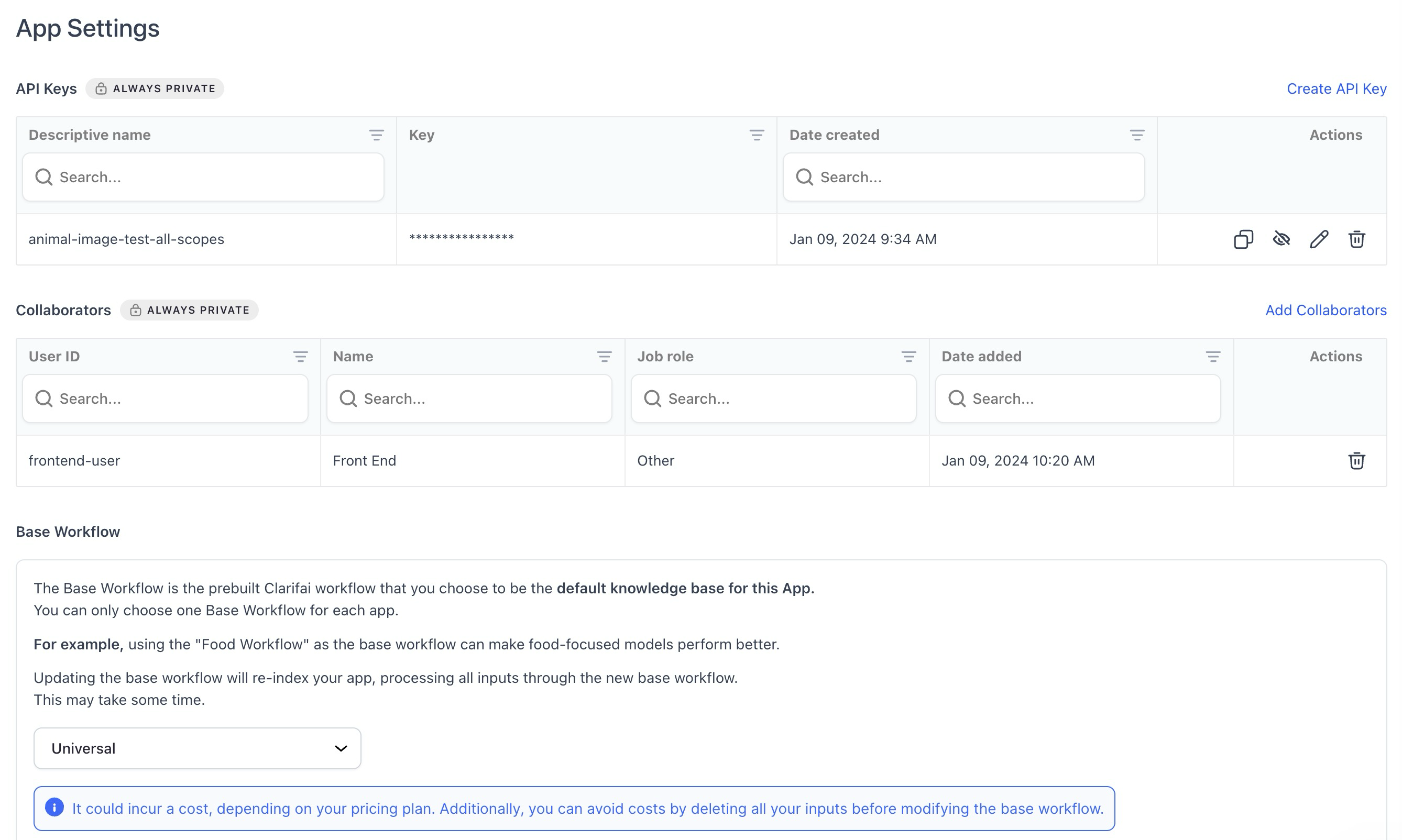

Made enhancements to the App Settings web page

- Added a brand new collaborators desk element for improved performance.

- Improved the styling of icons in tables to reinforce visible readability and person expertise.

- Launched an alert every time a person desires to make adjustments to a base workflow as reindexing of inputs occurs robotically now. The alert accommodates the mandatory particulars relating to the re-indexing course of, prices concerned, its statuses, and potential errors.

Enhanced the inputs depend show on the App Overview web page

- The tooltip (

?) now exactly signifies the out there variety of inputs in your app, offered in a comma-separated format for higher readability, akin to 4,567,890 as a substitute of 4567890. - The show now accommodates giant numbers with out wrapping points.

- The suffix ‘Ok’ is simply added to the depend if the quantity exceeds 10,000.

Added “Final Up to date” date in sources alterations

- We’ve changed “Date Created” with “Final Up to date” within the sidebar of apps, fashions, workflows, and modules (these are referred to as sources).

- The “Final Up to date” date is modified every time a brand new useful resource model is created, a useful resource description is up to date, or a useful resource markdown notes are up to date.

Added a “No Starred Sources” display screen for fashions/apps empty state

- We’ve launched a devoted display screen that communicates the absence of any starred fashions or apps within the present filter when none is current.

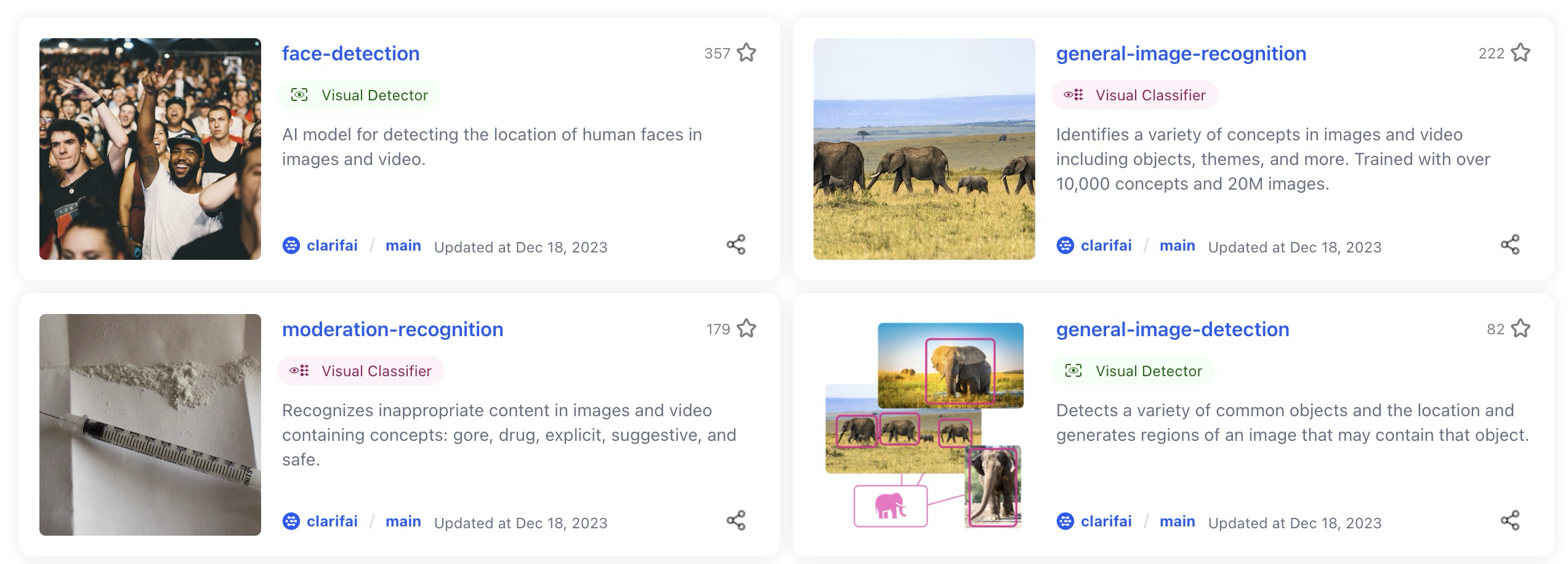

Enhanced the useful resource overview web page with bigger photos the place attainable

- We now embrace rehosting of huge variations of useful resource cowl photos alongside small ones. Whereas sustaining the utilization of small variations for useful resource listing views, the overview web page of a person useful resource is now configured to make use of the bigger model, guaranteeing superior picture high quality.

- Nonetheless, if utilizing a large-sized picture just isn’t attainable, the earlier conduct of using a small-sized picture is utilized as a fallback.

Enhanced picture dealing with in itemizing view

- Fastened a difficulty the place cowl photos weren’t being accurately picked up within the itemizing view. Photos within the itemizing view now precisely determine and show cowl photos related to every merchandise.

Enhanced search queries by together with dashes between textual content and numbers

- For example, if the search question is “llama70b” or “gpt4,” we additionally contemplate “llama-70-b” or “gpt-4” within the search outcomes. This offers a extra complete search expertise.

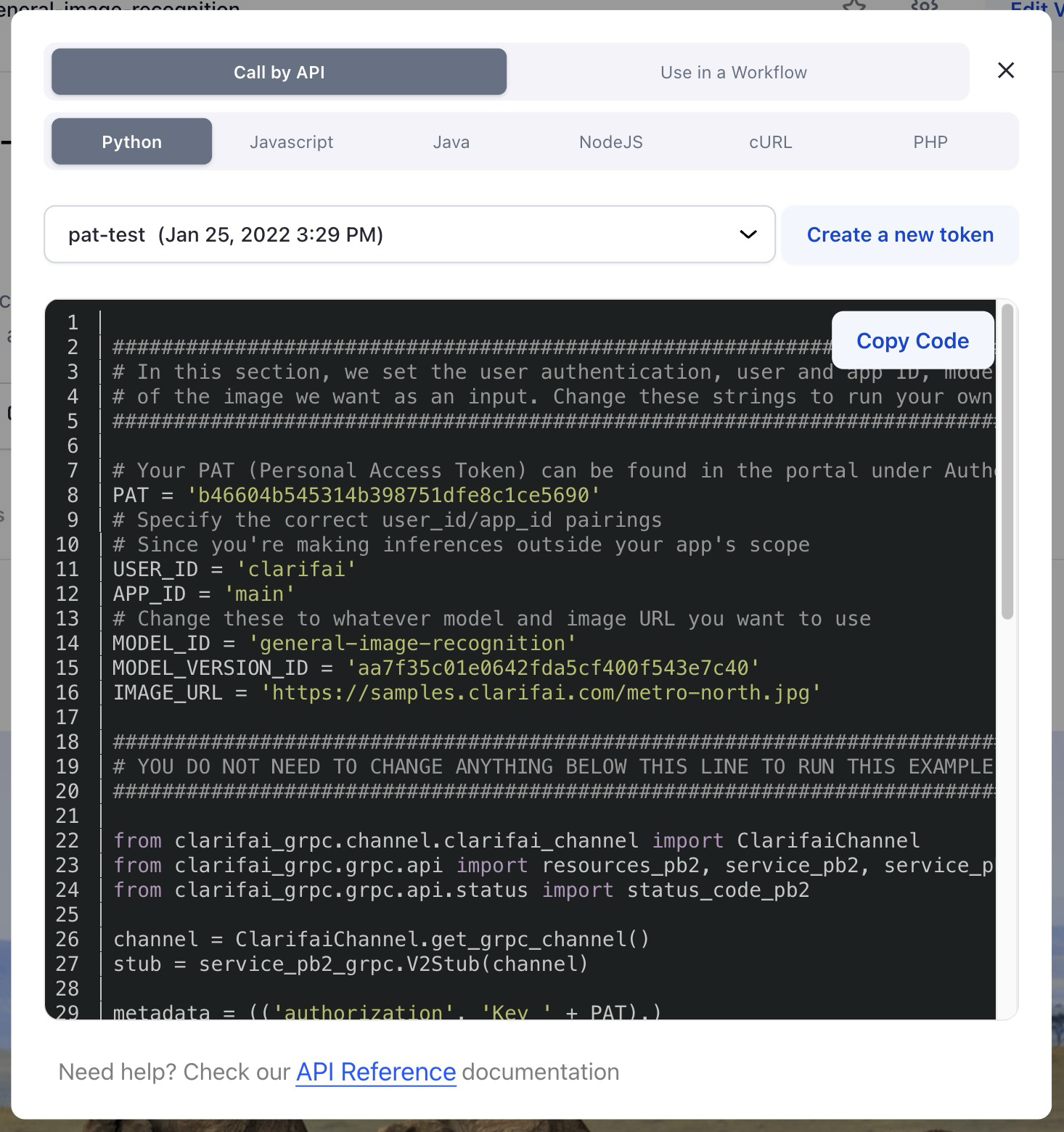

Revamped code snippets presentation

- We’ve up to date the code snippet theme to a darker and extra visually interesting scheme.

- We’ve additionally improved the “copy code” performance by wrapping it inside a button, guaranteeing higher visibility.

Allowed members of a company to work with the Labeler Duties performance

- The earlier implementation of the Labeler Duties performance allowed customers so as to add collaborators for engaged on duties. Nonetheless, this proved inadequate for Enterprise and Public Sector customers using the Orgs/Groups characteristic, because it lacked the aptitude for crew members to work on duties related to apps they’d entry to.

- We now permit admins, org contributors, and crew contributors with app entry to work with Labeler Duties.

Disabled the “Please Confirm Your E mail” popup

- We deactivated the popup, as all accounts inside on-premises deployments are already being robotically verified. Moreover, electronic mail doesn’t exist for on-premises deployments.