Overview

On this information, we’ll:

- Perceive the Blueprint of any trendy advice system

- Dive into an in depth evaluation of every stage inside the blueprint

- Talk about infrastructure challenges related to every stage

- Cowl particular circumstances inside the phases of the advice system blueprint

- Get launched to some storage concerns for advice techniques

- And eventually, finish with what the longer term holds for the advice techniques

Introduction

In a latest insightful discuss at Index convention, Nikhil, an professional within the area with a decade-long journey in machine studying and infrastructure, shared his useful experiences and insights into advice techniques. From his early days at Quora to main initiatives at Fb and his present enterprise at Fennel (a real-time characteristic retailer for ML), Nikhil has traversed the evolving panorama of machine studying engineering and machine studying infrastructure particularly within the context of advice techniques. This weblog publish distills his decade of expertise right into a complete learn, providing an in depth overview of the complexities and improvements at each stage of constructing a real-world recommender system.

Advice Programs at a excessive degree

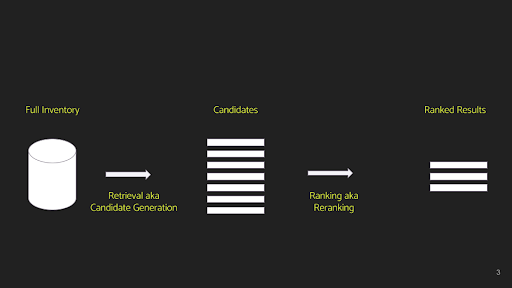

At a particularly excessive degree, a typical recommender system begins easy and may be compartmentalized as follows:

Be aware: All slide content material and associated supplies are credited to Nikhil Garg from Fennel.

Stage 1: Retrieval or candidate era – The concept of this stage is that we sometimes go from hundreds of thousands and even trillions (on the big-tech scale) to a whole bunch or a few thousand candidates.

Stage 2: Rating – We rank these candidates utilizing some heuristic to select the highest 10 to 50 gadgets.

Be aware: The need for a candidate era step earlier than rating arises as a result of it is impractical to run a scoring operate, even a non-machine-learning one, on hundreds of thousands of things.

Advice System – A basic blueprint

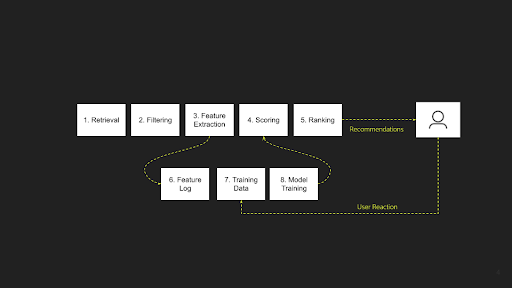

Drawing from his in depth expertise working with a wide range of advice techniques in quite a few contexts, Nikhil posits that each one types may be broadly categorized into the above two predominant phases. In his professional opinion, he additional delineates a recommender system into an 8-step course of, as follows:

The retrieval or candidate era stage is expanded into two steps: Retrieval and Filtering. The method of rating the candidates is additional developed into three distinct steps: Characteristic Extraction, Scoring, and Rating. Moreover, there’s an offline part that underpins these phases, encompassing Characteristic Logging, Coaching Knowledge Era, and Mannequin Coaching.

Let’s now delve into every stage, discussing them one after the other to grasp their features and the everyday challenges related to every:

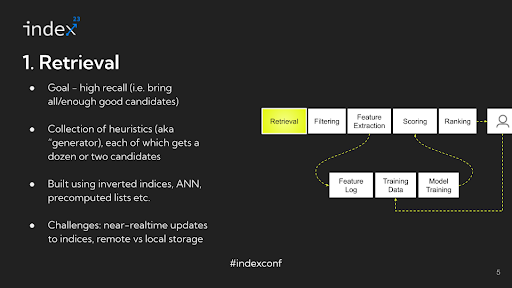

Step 1: Retrieval

Overview: The first goal of this stage is to introduce a top quality stock into the combination. The main focus is on recall — guaranteeing that the pool features a broad vary of probably related gadgets. Whereas some non-relevant or ‘junk’ content material can also be included, the important thing aim is to keep away from excluding any related candidates.

Detailed Evaluation: The important thing problem on this stage lies in narrowing down an enormous stock, doubtlessly comprising one million gadgets, to only a couple of thousand, all whereas guaranteeing that recall is preserved. This process may appear daunting at first, but it surely’s surprisingly manageable, particularly in its primary type. As an illustration, take into account a easy method the place you look at the content material a person has interacted with, determine the authors of that content material, after which choose the highest 5 items from every writer. This technique is an instance of a heuristic designed to generate a set of probably related candidates. Sometimes, a recommender system will make use of dozens of such turbines, starting from easy heuristics to extra subtle ones that contain machine studying fashions. Every generator sometimes yields a small group of candidates, a couple of dozen or so, and barely exceeds a pair dozen. By aggregating these candidates and forming a union or assortment, every generator contributes a definite sort of stock or content material taste. Combining a wide range of these turbines permits for capturing a various vary of content material sorts within the stock, thus addressing the problem successfully.

Infrastructure Challenges: The spine of those techniques regularly includes inverted indices. For instance, you may affiliate a selected writer ID with all of the content material they’ve created. Throughout a question, this interprets into extracting content material based mostly on specific writer IDs. Trendy techniques typically prolong this method by using nearest-neighbor lookups on embeddings. Moreover, some techniques make the most of pre-computed lists, comparable to these generated by information pipelines that determine the highest 100 hottest content material items globally, serving as one other type of candidate generator.

For machine studying engineers and information scientists, the method entails devising and implementing numerous methods to extract pertinent stock utilizing various heuristics or machine studying fashions. These methods are then built-in into the infrastructure layer, forming the core of the retrieval course of.

A major problem right here is guaranteeing close to real-time updates to those indices. Take Fb for instance: when an writer releases new content material, it is crucial for the brand new Content material ID to promptly seem in related person lists, and concurrently, the viewer-author mapping course of must be up to date. Though advanced, reaching these real-time updates is crucial for the system’s accuracy and timeliness.

Main Infrastructure Evolution: The business has seen vital infrastructural adjustments over the previous decade. About ten years in the past, Fb pioneered using native storage for content material indexing in Newsfeed, a observe later adopted by Quora, LinkedIn, Pinterest, and others. On this mannequin, the content material was listed on the machines answerable for rating, and queries had been sharded accordingly.

Nonetheless, with the development of community applied sciences, there’s been a shift again to distant storage. Content material indexing and information storage are more and more dealt with by distant machines, overseen by orchestrator machines that execute calls to those storage techniques. This shift, occurring over latest years, highlights a major evolution in information storage and indexing approaches. Regardless of these developments, the business continues to face challenges, significantly round real-time indexing.

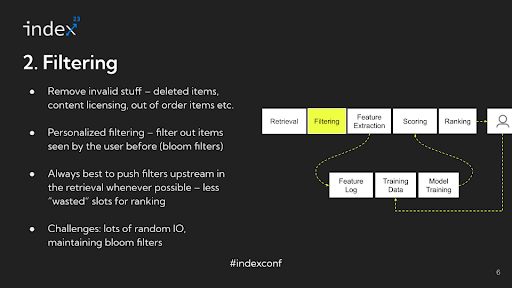

Step 2: Filtering

Overview: The filtering stage in advice techniques goals to sift out invalid stock from the pool of potential candidates. This course of will not be centered on personalization however somewhat on excluding gadgets which are inherently unsuitable for consideration.

Detailed Evaluation: To higher perceive the filtering course of, take into account particular examples throughout totally different platforms. In e-commerce, an out-of-stock merchandise shouldn’t be displayed. On social media platforms, any content material that has been deleted since its final indexing have to be faraway from the pool. For media streaming companies, movies missing licensing rights in sure areas must be excluded. Sometimes, this stage may contain making use of round 13 totally different filtering guidelines to every of the three,000 candidates, a course of that requires vital I/O, typically random disk I/O, presenting a problem by way of environment friendly administration.

A key facet of this course of is personalised filtering, typically utilizing Bloom filters. For instance, on platforms like TikTok, customers usually are not proven movies they’ve already seen. This includes repeatedly updating Bloom filters with person interactions to filter out beforehand seen content material. As person interactions enhance, so does the complexity of managing these filters.

Infrastructure Challenges: The first infrastructure problem lies in managing the dimensions and effectivity of Bloom filters. They have to be saved in reminiscence for pace however can develop giant over time, posing dangers of knowledge loss and administration difficulties. Regardless of these challenges, the filtering stage, significantly after figuring out legitimate candidates and eradicating invalid ones, is often seen as one of many extra manageable elements of advice system processes.

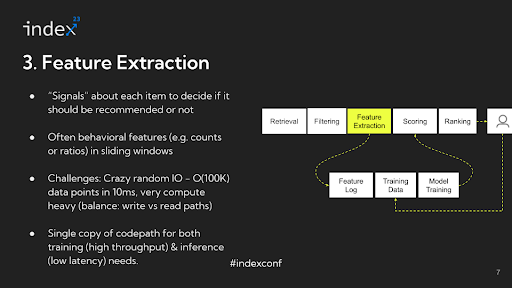

Step 3: Characteristic extraction

After figuring out appropriate candidates and filtering out invalid stock, the following important stage in a advice system is characteristic extraction. This section includes a radical understanding of all of the options and indicators that shall be utilized for rating functions. These options and indicators are important in figuring out the prioritization and presentation of content material to the person inside the advice feed. This stage is essential in guaranteeing that essentially the most pertinent and appropriate content material is elevated in rating, thereby considerably enhancing the person’s expertise with the system.

Detailed evaluation: Within the characteristic extraction stage, the extracted options are sometimes behavioral, reflecting person interactions and preferences. A standard instance is the variety of occasions a person has seen, clicked on, or bought one thing, factoring in particular attributes such because the content material’s writer, matter, or class inside a sure timeframe.

As an illustration, a typical characteristic could be the frequency of a person clicking on movies created by feminine publishers aged 18 to 24 over the previous 14 days. This characteristic not solely captures the content material’s attributes, just like the age and gender of the writer, but additionally the person’s interactions inside an outlined interval. Refined advice techniques may make use of a whole bunch and even 1000’s of such options, every contributing to a extra nuanced and personalised person expertise.

Infrastructure challenges: The characteristic extraction stage is taken into account essentially the most difficult from an infrastructure perspective in a advice system. The first cause for that is the in depth information I/O (Enter/Output) operations concerned. As an illustration, suppose you may have 1000’s of candidates after filtering and 1000’s of options within the system. This leads to a matrix with doubtlessly hundreds of thousands of knowledge factors. Every of those information factors includes trying up pre-computed portions, comparable to what number of occasions a selected occasion has occurred for a selected mixture. This course of is usually random entry, and the information factors must be frequently up to date to replicate the newest occasions.

For instance, if a person watches a video, the system must replace a number of counters related to that interplay. This requirement results in a storage system that should help very excessive write throughput and even greater learn throughput. Furthermore, the system is latency-bound, typically needing to course of these hundreds of thousands of knowledge factors inside tens of milliseconds..

Moreover, this stage requires vital computational energy. A few of this computation happens in the course of the information ingestion (write) path, and a few in the course of the information retrieval (learn) path. In most advice techniques, the majority of the computational assets is cut up between characteristic extraction and mannequin serving. Mannequin inference is one other important space that consumes a substantial quantity of compute assets. This interaction of excessive information throughput and computational calls for makes the characteristic extraction stage significantly intensive in advice techniques.

There are even deeper challenges related to characteristic extraction and processing, significantly associated to balancing latency and throughput necessities. Whereas the necessity for low latency is paramount in the course of the reside serving of suggestions, the identical code path used for characteristic extraction should additionally deal with batch processing for coaching fashions with hundreds of thousands of examples. On this situation, the issue turns into throughput-bound and fewer delicate to latency, contrasting with the real-time serving necessities.

To handle this dichotomy, the everyday method includes adapting the identical code for various functions. The code is compiled or configured in a method for batch processing, optimizing for throughput, and in one other method for real-time serving, optimizing for low latency. Reaching this twin optimization may be very difficult because of the differing necessities of those two modes of operation.

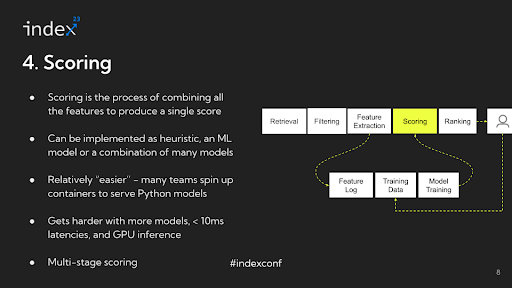

Step 4: Scoring

After getting recognized all of the indicators for all of the candidates you someway have to mix them and convert them right into a single quantity, that is known as scoring.

Detailed evaluation: Within the strategy of scoring for advice techniques, the methodology can fluctuate considerably relying on the applying. For instance, the rating for the primary merchandise could be 0.7, for the second merchandise 3.1, and for the third merchandise -0.1. The best way scoring is carried out can vary from easy heuristics to advanced machine studying fashions.

An illustrative instance is the evolution of the feed at Quora. Initially, the Quora feed was chronologically sorted, that means the scoring was so simple as utilizing the timestamp of content material creation. On this case, no advanced steps had been wanted, and gadgets had been sorted in descending order based mostly on the time they had been created. Later, the Quora feed developed to make use of a ratio of upvotes to downvotes, with some modifications, as its scoring operate.

This instance highlights that scoring doesn’t all the time contain machine studying. Nonetheless, in additional mature or subtle settings, scoring typically comes from machine studying fashions, generally even a mixture of a number of fashions. It’s normal to make use of a various set of machine studying fashions, probably half a dozen to a dozen, every contributing to the ultimate scoring in several methods. This variety in scoring strategies permits for a extra nuanced and tailor-made method to rating content material in advice techniques.

Infrastructure challenges: The infrastructure facet of scoring in advice techniques has considerably developed, turning into a lot simpler in comparison with what it was 5 to six years in the past. Beforehand a significant problem, the scoring course of has been simplified with developments in know-how and methodology. These days, a standard method is to make use of a Python-based mannequin, like XGBoost, spun up inside a container and hosted as a service behind FastAPI. This technique is simple and sufficiently efficient for many functions.

Nonetheless, the situation turns into extra advanced when coping with a number of fashions, tighter latency necessities, or deep studying duties that require GPU inference. One other fascinating facet is the multi-staged nature of rating in advice techniques. Totally different phases typically require totally different fashions. As an illustration, within the earlier phases of the method, the place there are extra candidates to think about, lighter fashions are sometimes used. As the method narrows right down to a smaller set of candidates, say round 200, extra computationally costly fashions are employed. Managing these various necessities and balancing the trade-offs between various kinds of fashions, particularly by way of computational depth and latency, turns into a vital facet of the advice system infrastructure.

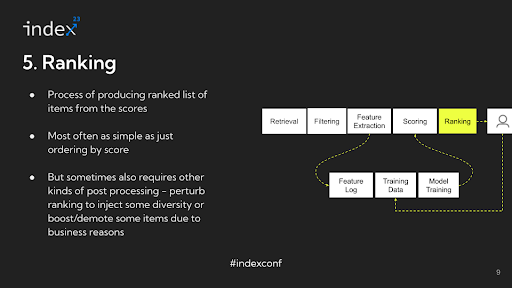

Step 5: Rating

Following the computation of scores, the ultimate step within the advice system is what may be described as ordering or sorting the gadgets. Whereas also known as ‘rating’, this stage could be extra precisely termed ‘ordering’, because it primarily includes sorting the gadgets based mostly on their computed scores.

Detailed evaluation: This sorting course of is simple — sometimes simply arranging the gadgets in descending order of their scores. There is not any further advanced processing concerned at this stage; it is merely about organizing the gadgets in a sequence that displays their relevance or significance as decided by their scores. In subtle advice techniques, there’s extra complexity concerned past simply ordering gadgets based mostly on scores. For instance, suppose a person on TikTok sees movies from the identical creator one after one other. In that case, it would result in a much less gratifying expertise, even when these movies are individually related. To handle this, these techniques typically alter or ‘perturb’ the scores to reinforce elements like variety within the person’s feed. This perturbation is a part of a post-processing stage the place the preliminary sorting based mostly on scores is modified to take care of different fascinating qualities, like selection or freshness, within the suggestions. After this ordering and adjustment course of, the outcomes are offered to the person.

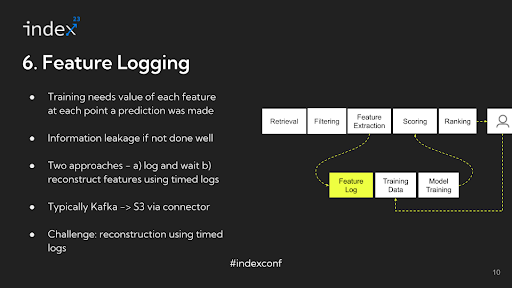

Step 6: Characteristic logging

When extracting options for coaching a mannequin in a advice system, it is essential to log the information precisely. The numbers which are extracted throughout characteristic extraction are sometimes logged in techniques like Apache Kafka. This logging step is important for the mannequin coaching course of that happens later.

As an illustration, when you plan to coach your mannequin 15 days after information assortment, you want the information to replicate the state of person interactions on the time of inference, not on the time of coaching. In different phrases, when you’re analyzing the variety of impressions a person had on a selected video, it is advisable to know this quantity because it was when the advice was made, not as it’s 15 days later. This method ensures that the coaching information precisely represents the person’s expertise and interactions on the related second.

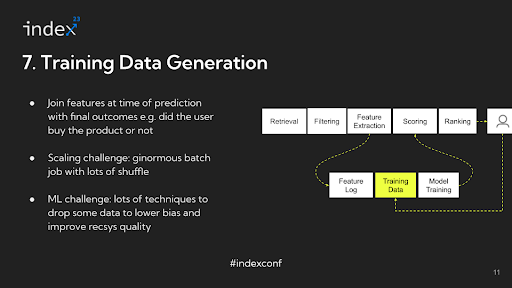

Step 7: Coaching Knowledge

To facilitate this, a standard observe is to log all of the extracted information, freeze it in its present state, after which carry out joins on this information at a later time when making ready it for mannequin coaching. This technique permits for an correct reconstruction of the person’s interplay state on the time of every inference, offering a dependable foundation for coaching the advice mannequin.

As an illustration, Airbnb may want to think about a 12 months’s price of knowledge on account of seasonality components, in contrast to a platform like Fb which could have a look at a shorter window. This necessitates sustaining in depth logs, which may be difficult and decelerate characteristic growth. In such eventualities, options could be reconstructed by traversing a log of uncooked occasions on the time of coaching information era.

The method of producing coaching information includes an enormous be part of operation at scale, combining the logged options with precise person actions like clicks or views. This step may be data-intensive and requires environment friendly dealing with to handle the information shuffle concerned.

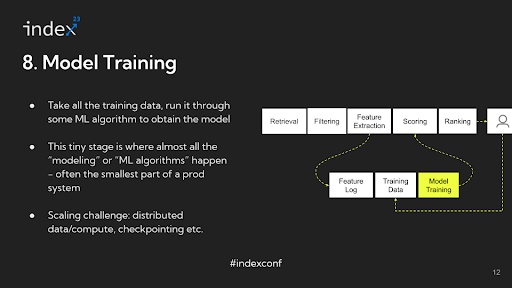

Step 8: Mannequin Coaching

Lastly, as soon as the coaching information is ready, the mannequin is educated, and its output is then used for scoring within the advice system. Apparently, in the complete pipeline of a advice system, the precise machine studying mannequin coaching may solely represent a small portion of an ML engineer’s time, with the bulk spent on dealing with information and infrastructure-related duties.

Infrastructure challenges: For larger-scale operations the place there’s a vital quantity of knowledge, distributed coaching turns into obligatory. In some circumstances, the fashions are so giant – actually terabytes in measurement – that they can’t match into the RAM of a single machine. This necessitates a distributed method, like utilizing a parameter server to handle totally different segments of the mannequin throughout a number of machines.

One other important facet in such eventualities is checkpointing. On condition that coaching these giant fashions can take in depth durations, generally as much as 24 hours or extra, the danger of job failures have to be mitigated. If a job fails, it is necessary to renew from the final checkpoint somewhat than beginning over from scratch. Implementing efficient checkpointing methods is crucial to handle these dangers and guarantee environment friendly use of computational assets.

Nonetheless, these infrastructure and scaling challenges are extra related for large-scale operations like these at Fb, Pinterest, or Airbnb. In smaller-scale settings, the place the information and mannequin complexity are comparatively modest, the complete system may match on a single machine (‘single field’). In such circumstances, the infrastructure calls for are considerably much less daunting, and the complexities of distributed coaching and checkpointing could not apply.

Total, this delineation highlights the various infrastructure necessities and challenges in constructing advice techniques, depending on the dimensions and complexity of the operation. The ‘blueprint’ for developing these techniques, subsequently, must be adaptable to those differing scales and complexities.

Particular Circumstances of Advice System Blueprint

Within the context of advice techniques, numerous approaches may be taken, every becoming right into a broader blueprint however with sure phases both omitted or simplified.

Let’s take a look at just a few examples as an instance this:

Chronological Sorting: In a really primary advice system, the content material could be sorted chronologically. This method includes minimal complexity, as there’s primarily no retrieval or characteristic extraction stage past utilizing the time at which the content material was created. The scoring on this case is solely the timestamp, and the sorting is predicated on this single characteristic.

Handcrafted Options with Weighted Averages: One other method includes some retrieval and using a restricted set of handcrafted options, possibly round 10. As an alternative of utilizing a machine studying mannequin for scoring, a weighted common calculated via a hand-tuned system is used. This technique represents an early stage within the evolution of rating techniques.

Sorting Primarily based on Reputation: A extra particular method focuses on the most well-liked content material. This might contain a single generator, probably an offline pipeline, that computes the most well-liked content material based mostly on metrics just like the variety of likes or upvotes. The sorting is then based mostly on these recognition metrics.

On-line Collaborative Filtering: Beforehand thought-about state-of-the-art, on-line collaborative filtering includes a single generator that performs an embedding lookup on a educated mannequin. On this case, there is no separate characteristic extraction or scoring stage; it is all about retrieval based mostly on model-generated embeddings.

Batch Collaborative Filtering: Just like on-line collaborative filtering, batch collaborative filtering makes use of the identical method however in a batch processing context.

These examples illustrate that whatever the particular structure or method of a rating advice system, they’re all variations of a basic blueprint. In less complicated techniques, sure phases like characteristic extraction and scoring could also be omitted or significantly simplified. As techniques develop extra subtle, they have a tendency to include extra phases of the blueprint, finally filling out the complete template of a fancy advice system.

Bonus Part: Storage concerns

Though now we have accomplished our blueprint, together with the particular circumstances for it, storage concerns nonetheless type an necessary a part of any trendy advice system. So, it is worthwhile to pay some consideration to this bit.

In advice techniques, Key-Worth (KV) shops play a pivotal function, particularly in characteristic serving. These shops are characterised by extraordinarily excessive write throughput. As an illustration, on platforms like Fb, TikTok, or Quora, 1000’s of writes can happen in response to person interactions, indicating a system with a excessive write throughput. Much more demanding is the learn throughput. For a single person request, options for doubtlessly 1000’s of candidates are extracted, though solely a fraction of those candidates shall be proven to the person. This leads to the learn throughput being magnitudes bigger than the write throughput, typically 100 occasions extra. Reaching single-digit millisecond latency (P99) beneath such circumstances is a difficult process.

The writes in these techniques are sometimes read-modify writes, that are extra advanced than easy appends. At smaller scales, it is possible to maintain the whole lot in RAM utilizing options like Redis or in-memory dictionaries, however this may be pricey. As scale and value enhance, information must be saved on disk. Log-Structured Merge-tree (LSM) databases are generally used for his or her means to maintain excessive write throughput whereas offering low-latency lookups. RocksDB, for instance, was initially utilized in Fb’s feed and is a well-liked alternative in such functions. Fennel makes use of RocksDB for the storage and serving of characteristic information. Rockset, a search and analytics database, additionally makes use of RocksDB as its underlying storage engine. Different LSM database variants like ScyllaDB are additionally gaining recognition.

As the quantity of knowledge being produced continues to develop, even disk storage is turning into pricey. This has led to the adoption of S3 tiering as vital answer for managing the sheer quantity of knowledge in petabytes or extra. S3 tiering additionally facilitates the separation of write and skim CPUs, guaranteeing that ingestion and compaction processes don’t deplete CPU assets wanted for serving on-line queries. As well as, techniques must handle periodic backups and snapshots, and guarantee exact-once processing for stream processing, additional complicating the storage necessities. Native state administration, typically utilizing options like RocksDB, turns into more and more difficult as the dimensions and complexity of those techniques develop, presenting quite a few intriguing storage issues for these delving deeper into this house.

What does the longer term maintain for the advice techniques?

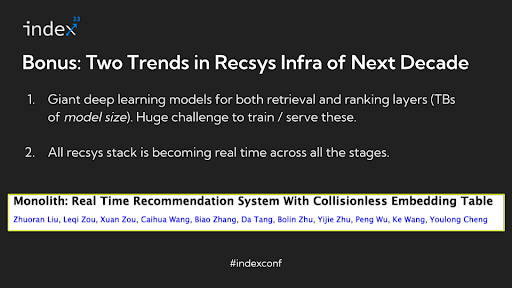

In discussing the way forward for advice techniques, Nikhil highlights two vital rising tendencies which are converging to create a transformative impression on the business.

Extraordinarily Massive Deep Studying Fashions: There is a development in direction of utilizing deep studying fashions which are extremely giant, with parameter areas within the vary of terabytes. These fashions are so in depth that they can’t match within the RAM of a single machine and are impractical to retailer on disk. Coaching and serving such huge fashions current appreciable challenges. Handbook sharding of those fashions throughout GPU playing cards and different advanced strategies are presently being explored to handle them. Though these approaches are nonetheless evolving, and the sector is essentially uncharted, libraries like PyTorch are growing instruments to help with these challenges.

Actual-Time Advice Programs: The business is shifting away from batch-processed advice techniques to real-time techniques. This shift is pushed by the belief that real-time processing results in vital enhancements in key manufacturing metrics comparable to person engagement and gross merchandise worth (GMV) for e-commerce platforms. Actual-time techniques usually are not solely simpler in enhancing person expertise however are additionally simpler to handle and debug in comparison with batch-processed techniques. They are typically less expensive in the long term, as computations are carried out on-demand somewhat than pre-computing suggestions for each person, a lot of whom could not even interact with the platform every day.

A notable instance of the intersection of those tendencies is TikTok’s method, the place they’ve developed a system that mixes using very giant embedding fashions with real-time processing. From the second a person watches a video, the system updates the embeddings and serves suggestions in real-time. This method exemplifies the modern instructions during which advice techniques are heading, leveraging each the ability of large-scale deep studying fashions and the immediacy of real-time information processing.

These developments counsel a future the place advice techniques usually are not solely extra correct and aware of person habits but additionally extra advanced by way of the technological infrastructure required to help them. This intersection of huge mannequin capabilities and real-time processing is poised to be a major space of innovation and progress within the area.

Excited about exploring extra?

- Discover Fennel’s real-time characteristic retailer for machine studying

For an in-depth understanding of how a real-time characteristic retailer can improve machine studying capabilities, take into account exploring Fennel. Fennel gives modern options tailor-made for contemporary advice techniques. Go to Fennel or learn Fennel Docs.

- Discover out extra concerning the Rockset search and analytics database

Find out how Rockset serves many advice use circumstances via its efficiency, real-time replace functionality, and vector search performance. Learn extra about Rockset or attempt Rockset free of charge.