In a latest mission, we had been tasked with designing how we might substitute a

Mainframe system with a cloud native utility, constructing a roadmap and a

enterprise case to safe funding for the multi-year modernisation effort

required. We had been cautious of the dangers and potential pitfalls of a Huge Design

Up Entrance, so we suggested our consumer to work on a ‘simply sufficient, and simply in

time’ upfront design, with engineering throughout the first section. Our consumer

preferred our strategy and chosen us as their associate.

The system was constructed for a UK-based consumer’s Information Platform and

customer-facing merchandise. This was a really complicated and difficult process given

the scale of the Mainframe, which had been constructed over 40 years, with a

number of applied sciences which have considerably modified since they had been

first launched.

Our strategy relies on incrementally shifting capabilities from the

mainframe to the cloud, permitting a gradual legacy displacement reasonably than a

“Huge Bang” cutover. With a view to do that we would have liked to determine locations within the

mainframe design the place we might create seams: locations the place we will insert new

habits with the smallest doable modifications to the mainframe’s code. We will

then use these seams to create duplicate capabilities on the cloud, twin run

them with the mainframe to confirm their habits, after which retire the

mainframe functionality.

Thoughtworks had been concerned for the primary yr of the programme, after which we handed over our work to our consumer

to take it ahead. In that timeframe, we didn’t put our work into manufacturing, nonetheless, we trialled a number of

approaches that may allow you to get began extra shortly and ease your personal Mainframe modernisation journeys. This

article offers an summary of the context through which we labored, and descriptions the strategy we adopted for

incrementally shifting capabilities off the Mainframe.

Contextual Background

The Mainframe hosted a various vary of

providers essential to the consumer’s enterprise operations. Our programme

particularly targeted on the information platform designed for insights on Customers

in UK&I (United Kingdom & Eire). This specific subsystem on the

Mainframe comprised roughly 7 million strains of code, developed over a

span of 40 years. It supplied roughly ~50% of the capabilities of the UK&I

property, however accounted for ~80% of MIPS (Million directions per second)

from a runtime perspective. The system was considerably complicated, the

complexity was additional exacerbated by area tasks and issues

unfold throughout a number of layers of the legacy atmosphere.

A number of causes drove the consumer’s determination to transition away from the

Mainframe atmosphere, these are the next:

- Modifications to the system had been gradual and costly. The enterprise due to this fact had

challenges conserving tempo with the quickly evolving market, stopping

innovation. - Operational prices related to working the Mainframe system had been excessive;

the consumer confronted a industrial threat with an imminent worth improve from a core

software program vendor. - While our consumer had the mandatory ability units for working the Mainframe,

it had confirmed to be laborious to search out new professionals with experience on this tech

stack, because the pool of expert engineers on this area is proscribed. Moreover,

the job market doesn’t supply as many alternatives for Mainframes, thus folks

should not incentivised to discover ways to develop and function them.

Excessive-level view of Client Subsystem

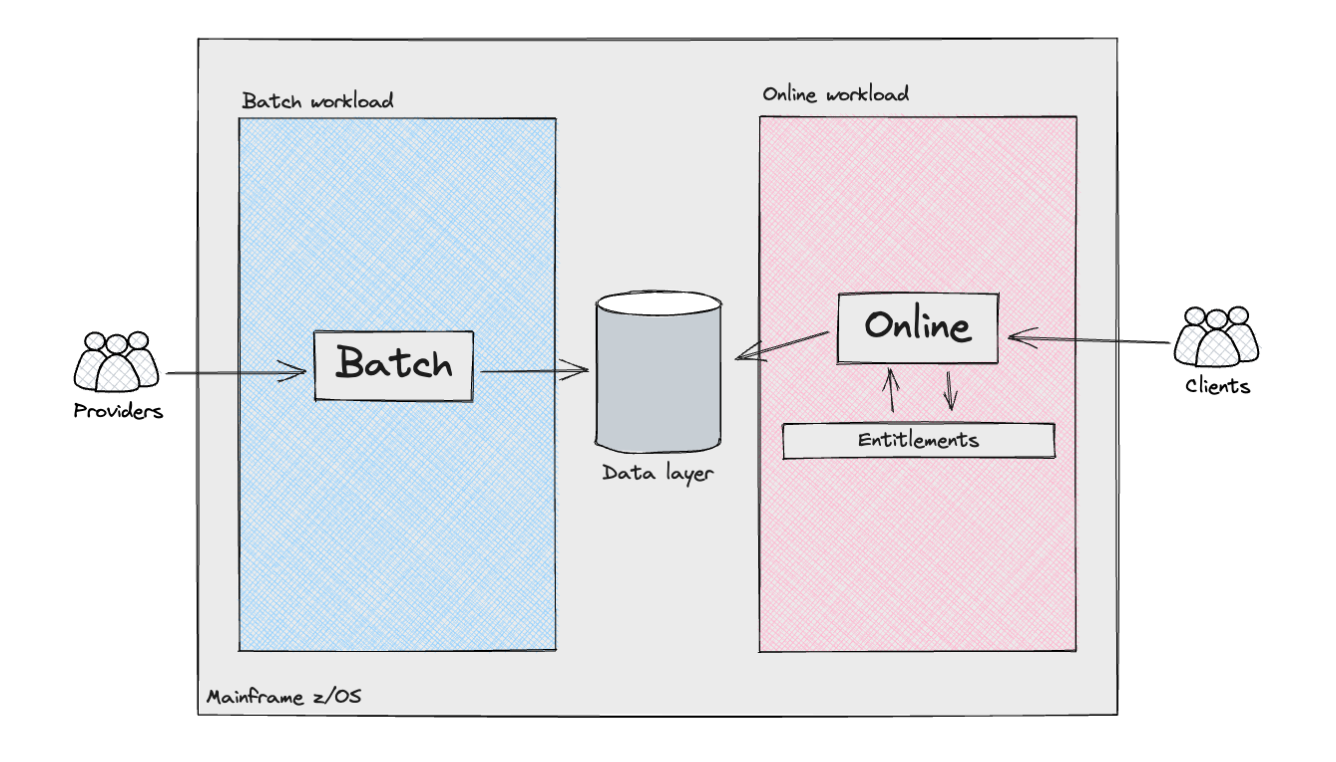

The next diagram exhibits, from a high-level perspective, the varied

parts and actors within the Client subsystem.

The Mainframe supported two distinct kinds of workloads: batch

processing and, for the product API layers, on-line transactions. The batch

workloads resembled what is often known as an information pipeline. They

concerned the ingestion of semi-structured knowledge from exterior

suppliers/sources, or different inside Mainframe techniques, adopted by knowledge

cleaning and modelling to align with the necessities of the Client

Subsystem. These pipelines integrated numerous complexities, together with

the implementation of the Id looking logic: in the UK,

in contrast to the USA with its social safety quantity, there isn’t a

universally distinctive identifier for residents. Consequently, firms

working within the UK&I need to make use of customised algorithms to precisely

decide the person identities related to that knowledge.

The net workload additionally introduced vital complexities. The

orchestration of API requests was managed by a number of internally developed

frameworks, which decided this system execution circulation by lookups in

datastores, alongside dealing with conditional branches by analysing the

output of the code. We should always not overlook the extent of customisation this

framework utilized for every buyer. For instance, some flows had been

orchestrated with ad-hoc configuration, catering for implementation

particulars or particular wants of the techniques interacting with our consumer’s

on-line merchandise. These configurations had been distinctive at first, however they

possible grew to become the norm over time, as our consumer augmented their on-line

choices.

This was applied via an Entitlements engine which operated

throughout layers to make sure that prospects accessing merchandise and underlying

knowledge had been authenticated and authorised to retrieve both uncooked or

aggregated knowledge, which might then be uncovered to them via an API

response.

Incremental Legacy Displacement: Ideas, Advantages, and

Issues

Contemplating the scope, dangers, and complexity of the Client Subsystem,

we believed the next rules could be tightly linked with us

succeeding with the programme:

- Early Danger Discount: With engineering ranging from the

starting, the implementation of a “Fail-Quick” strategy would assist us

determine potential pitfalls and uncertainties early, thus stopping

delays from a programme supply standpoint. These had been: - Consequence Parity: The consumer emphasised the significance of

upholding consequence parity between the prevailing legacy system and the

new system (It is very important be aware that this idea differs from

Function Parity). Within the consumer’s Legacy system, numerous

attributes had been generated for every shopper, and given the strict

trade rules, sustaining continuity was important to make sure

contractual compliance. We wanted to proactively determine

discrepancies in knowledge early on, promptly deal with or clarify them, and

set up belief and confidence with each our consumer and their

respective prospects at an early stage. - Cross-functional necessities: The Mainframe is a extremely

performant machine, and there have been uncertainties {that a} answer on

the Cloud would fulfill the Cross-functional necessities. - Ship Worth Early: Collaboration with the consumer would

guarantee we might determine a subset of probably the most important Enterprise

Capabilities we might ship early, making certain we might break the system

aside into smaller increments. These represented thin-slices of the

general system. Our aim was to construct upon these slices iteratively and

continuously, serving to us speed up our general studying within the area.

Moreover, working via a thin-slice helps cut back the cognitive

load required from the workforce, thus stopping evaluation paralysis and

making certain worth could be constantly delivered. To attain this, a

platform constructed across the Mainframe that gives higher management over

shoppers’ migration methods performs a significant function. Utilizing patterns similar to

Darkish Launching and Canary

Launch would place us within the driver’s seat for a easy

transition to the Cloud. Our aim was to attain a silent migration

course of, the place prospects would seamlessly transition between techniques

with none noticeable affect. This might solely be doable via

complete comparability testing and steady monitoring of outputs

from each techniques.

With the above rules and necessities in thoughts, we opted for an

Incremental Legacy Displacement strategy at the side of Twin

Run. Successfully, for every slice of the system we had been rebuilding on the

Cloud, we had been planning to feed each the brand new and as-is system with the

similar inputs and run them in parallel. This enables us to extract each

techniques’ outputs and test if they’re the identical, or at the least inside an

acceptable tolerance. On this context, we outlined Incremental Twin

Run as: utilizing a Transitional

Structure to assist slice-by-slice displacement of functionality

away from a legacy atmosphere, thereby enabling goal and as-is techniques

to run quickly in parallel and ship worth.

We determined to undertake this architectural sample to strike a steadiness

between delivering worth, discovering and managing dangers early on,

making certain consequence parity, and sustaining a easy transition for our

consumer all through the length of the programme.

Incremental Legacy Displacement strategy

To perform the offloading of capabilities to our goal

structure, the workforce labored carefully with Mainframe SMEs (Topic Matter

Consultants) and our consumer’s engineers. This collaboration facilitated a

simply sufficient understanding of the present as-is panorama, when it comes to each

technical and enterprise capabilities; it helped us design a Transitional

Structure to attach the prevailing Mainframe to the Cloud-based system,

the latter being developed by different supply workstreams within the

programme.

Our strategy started with the decomposition of the

Client subsystem into particular enterprise and technical domains, together with

knowledge load, knowledge retrieval & aggregation, and the product layer

accessible via external-facing APIs.

Due to our consumer’s enterprise

goal, we recognised early that we might exploit a serious technical boundary to organise our programme. The

consumer’s workload was largely analytical, processing principally exterior knowledge

to supply perception which was bought on to shoppers. We due to this fact noticed an

alternative to separate our transformation programme in two elements, one round

knowledge curation, the opposite round knowledge serving and product use instances utilizing

knowledge interactions as a seam. This was the primary excessive degree seam recognized.

Following that, we then wanted to additional break down the programme into

smaller increments.

On the information curation facet, we recognized that the information units had been

managed largely independently of one another; that’s, whereas there have been

upstream and downstream dependencies, there was no entanglement of the datasets throughout curation, i.e.

ingested knowledge units had a one to at least one mapping to their enter recordsdata.

.

We then collaborated carefully with SMEs to determine the seams

inside the technical implementation (laid out beneath) to plan how we might

ship a cloud migration for any given knowledge set, finally to the extent

the place they may very well be delivered in any order (Database Writers Processing Pipeline Seam, Coarse Seam: Batch Pipeline Step Handoff as Seam,

and Most Granular: Information Attribute

Seam). So long as up- and downstream dependencies might trade knowledge

from the brand new cloud system, these workloads may very well be modernised

independently of one another.

On the serving and product facet, we discovered that any given product used

80% of the capabilities and knowledge units that our consumer had created. We

wanted to discover a completely different strategy. After investigation of the best way entry

was bought to prospects, we discovered that we might take a “buyer phase”

strategy to ship the work incrementally. This entailed discovering an

preliminary subset of shoppers who had bought a smaller proportion of the

capabilities and knowledge, lowering the scope and time wanted to ship the

first increment. Subsequent increments would construct on high of prior work,

enabling additional buyer segments to be lower over from the as-is to the

goal structure. This required utilizing a special set of seams and

transitional structure, which we talk about in Database Readers and Downstream processing as a Seam.

Successfully, we ran a radical evaluation of the parts that, from a

enterprise perspective, functioned as a cohesive complete however had been constructed as

distinct components that may very well be migrated independently to the Cloud and

laid this out as a programme of sequenced increments.