Information engineers depend on math and statistics to coax insights out of complicated, noisy information. Among the many most vital domains is calculus, which provides us integrals, mostly described as calculating the world underneath a curve. That is helpful for engineers as many information that specific a fee will be built-in to supply a helpful measurement. For instance:

- Level-in-time sensor readings, as soon as built-in, can produce time-weighted averages

- The integral of auto velocities can be utilized to calculate distance traveled

- Information quantity transferred outcomes from integrating community switch charges

After all, sooner or later most college students discover ways to calculate integrals, and the computation itself is easy on batch, static information. Nonetheless, there are frequent engineering patterns that require low-latency, incremental computation of integrals to appreciate enterprise worth, comparable to setting alerts based mostly on tools efficiency thresholds or detecting anomalies in logistics use-cases.

| Level-in-time Measurement: | Integral used to calculate: | Low-Latency Enterprise Use-case & Worth |

|---|---|---|

| Windspeed | Time-Weighted Common | Shutdown delicate tools at working thresholds for value avoidance |

| Velocity | Distance | Anticipate logistics delays to alert clients |

| Switch Price | Complete Quantity Transferred | Detect community bandwidth points or anomalous actions |

Calculating integrals is a vital software in a toolbelt for contemporary information engineers engaged on real-world sensor information. These are only a few examples, and whereas the methods described beneath will be tailored to many information engineering pipelines, the rest of this weblog will deal with calculating streaming integrals on real-world sensor information to derive time-weighted averages.

An Abundance of Sensors

A standard sample when working with sensor information is definitely an overabundance of knowledge: transmitting at 60 hertz, a temperature sensor on a wind turbine generates over 5 million information factors per day. Multiply that by 100 sensors per turbine and a single piece of kit may produce a number of GB of knowledge per day. Additionally contemplate that for many bodily processes, every studying is most definitely almost equivalent to the earlier studying.

Whereas storing that is low-cost, transmitting it will not be, and lots of IoT manufacturing programs right this moment have strategies to distill this deluge of knowledge. Many sensors, or their intermediate programs, are set as much as solely transmit a studying when one thing “attention-grabbing” occurs, comparable to altering from one binary state to a different or measurements which are 5% completely different than the final. Due to this fact, for the info engineer, the absence of latest readings will be important in itself (nothing has modified within the system), or may characterize late arriving information as a result of a community outage within the subject.

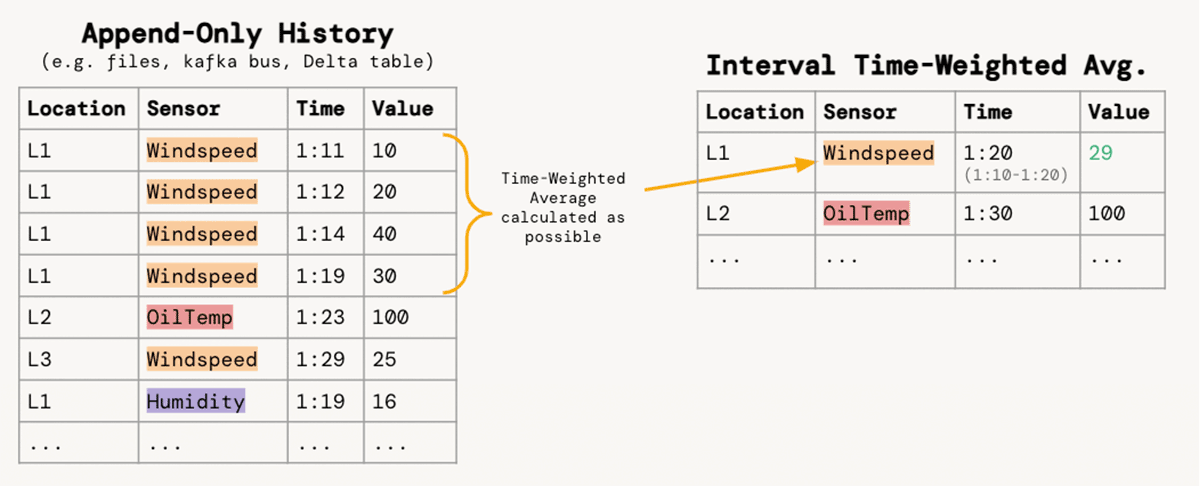

For groups of service engineers who’re answerable for analyzing and stopping tools failure, the flexibility to derive well timed perception depends on the info engineers who flip huge portions of sensor information into usable evaluation tables. We are going to deal with the requirement to combination a slim, append-only stream of sensor readings into 10-min intervals for every location/sensor pair with the time-weighted common of values:

Apart: Integrals Refresher

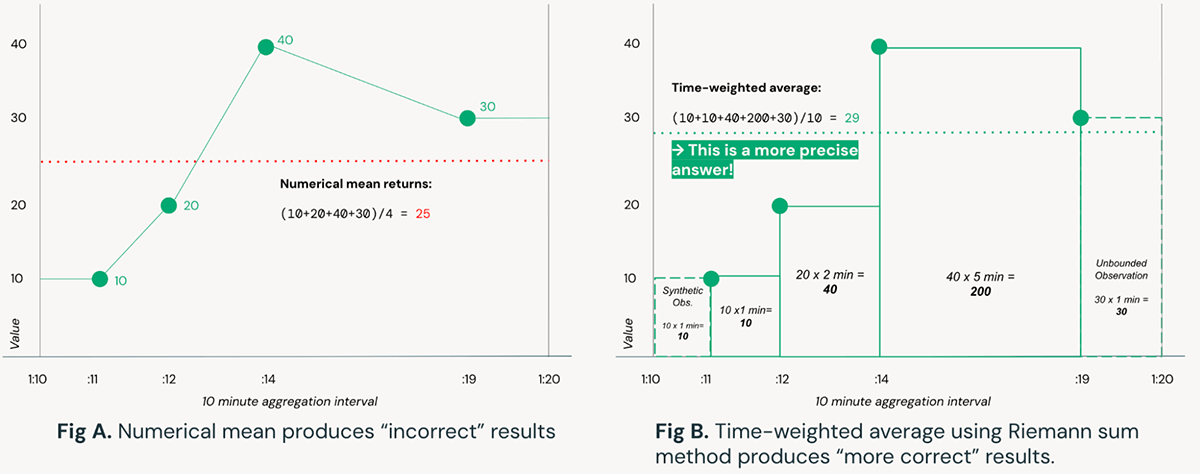

Put merely, an integral is the world underneath a curve. Whereas there are strong mathematical methods to approximate an equation then symbolically calculate the integral for any curve, for the needs of real-time streaming information we’ll depend on a numerical approximation utilizing Riemann sums as they are often extra effectively computed as information arrive over time. For an illustration of why the appliance of integrals is vital, contemplate the instance beneath:

Determine A depends on easy numerical means to compute the common of a sensor studying over a time interval. In distinction, Determine B makes use of a Riemann sum method to calculate time-weighted averages, leading to a extra exact reply; this might be prolonged additional with trapezoids (Trapezoidal rule) as an alternative of rectangles. Think about that the end result produced by the naive technique in Determine A is over 10% completely different than the strategy in Determine B, which in complicated programs comparable to wind generators would be the distinction between steady-state operations and tools failure.

Resolution Overview

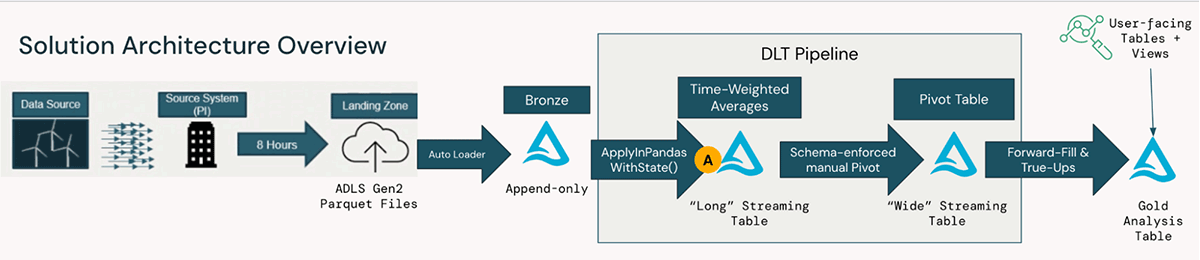

For a big American utility firm, this sample was applied as a part of an end-to-end answer to show high-volume turbine information into actionable insights for preventive upkeep and different proprietary use-cases. The beneath diagram illustrates the transformations of uncooked turbine information ingested from a whole lot of machines, by way of ingestion from cloud storage, to high-performance streaming pipelines orchestrated with Delta Dwell Tables, to user-facing tables and views:

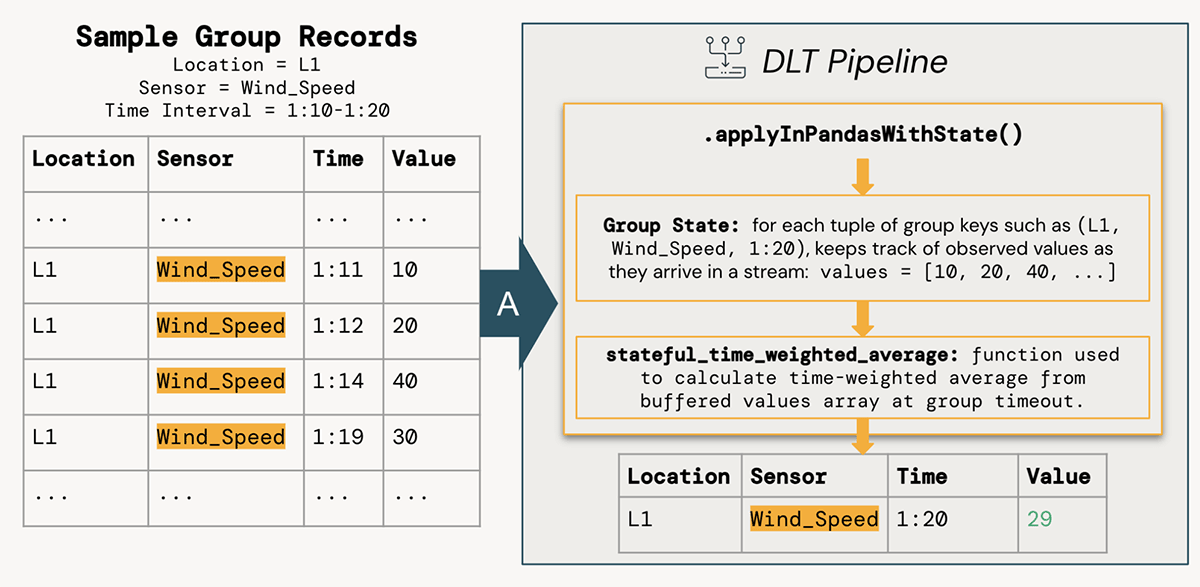

The code samples (see delta-live-tables-notebooks github) deal with the transformation step A labeled above, particularly ApplyInPandasWithState() for stateful time-weighted common computation. The rest of the answer, together with working with different software program instruments that deal with IoT information comparable to Pi Historians, is easy to implement with the open-source requirements and suppleness of the Databricks Information Intelligence Platform.

Stateful Processing of Integrals

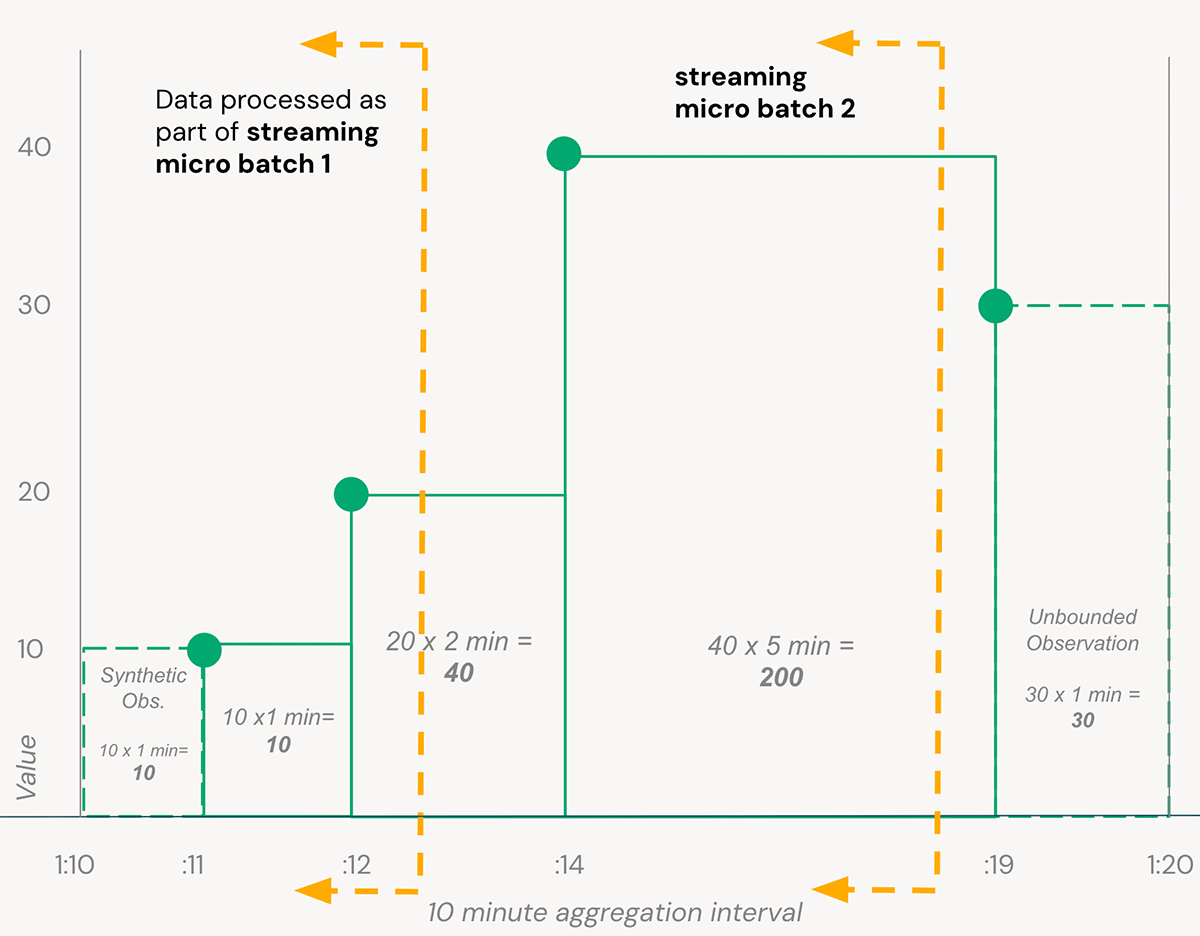

We will now carry ahead the easy instance from Determine B within the Integrals Refresher part above: to course of information rapidly from our turbine sensors, an answer should contemplate information because it arrives as a part of a stream. On this instance, we wish to compute aggregates over a ten minute window for every turbine+sensor mixture. As information is arriving repeatedly and a pipeline processes micro batches of knowledge as they’re accessible, we should maintain observe of the state of every aggregation window till the purpose we will contemplate that point interval full (managed with Structured Streaming Watermarks).

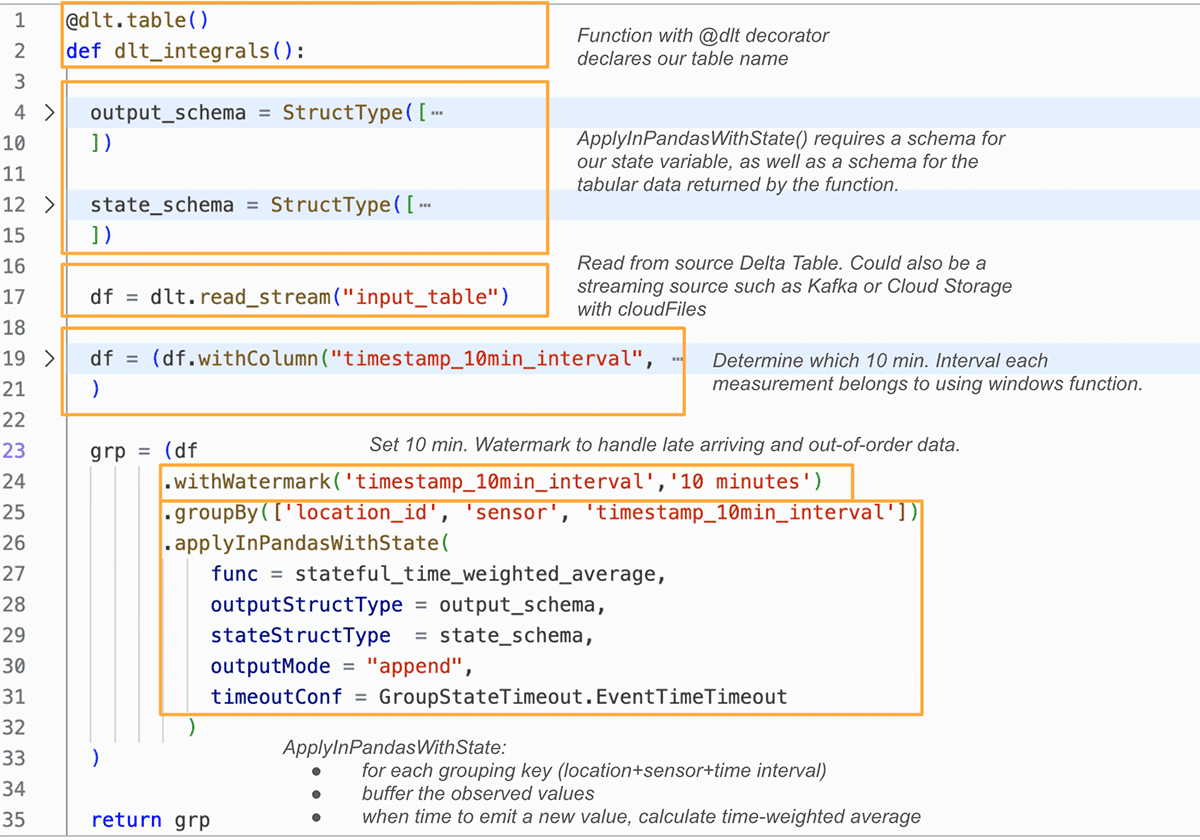

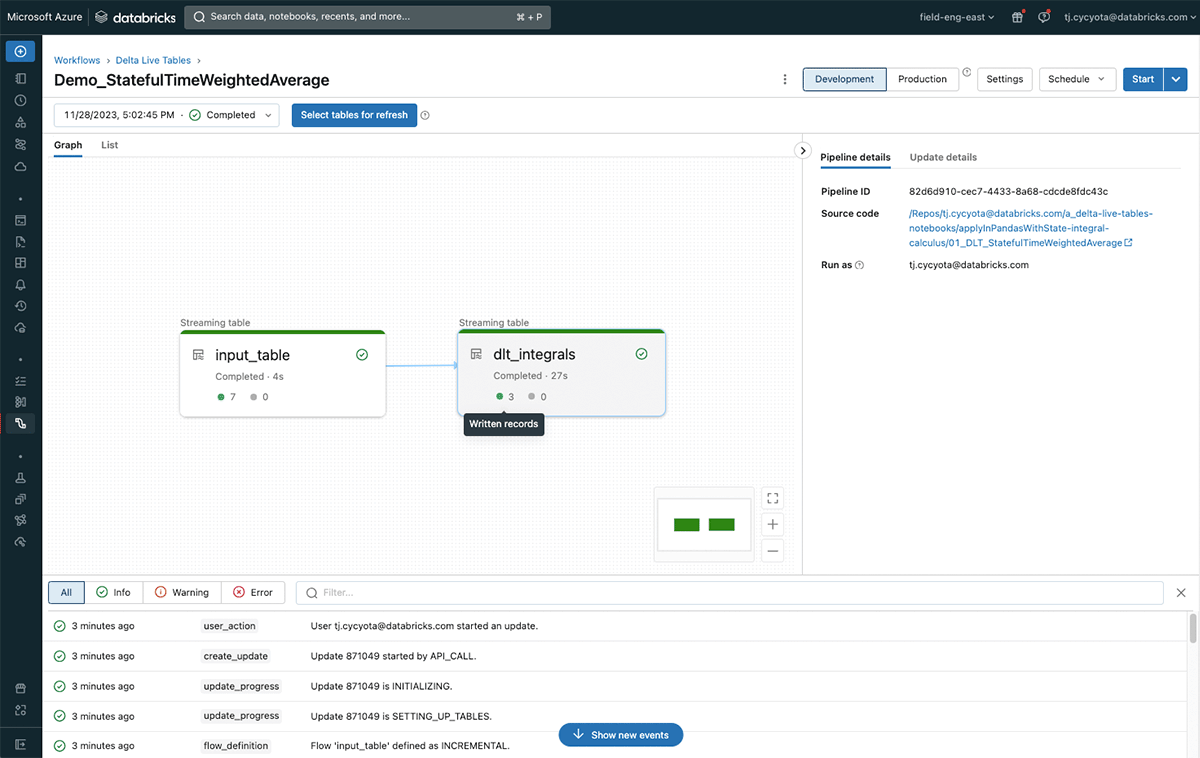

Implementing this in Delta Dwell Tables (DLT), the Databricks declarative ETL framework, permits us to deal with the transformation logic somewhat than operational points like stream checkpoints and compute optimization. See the instance repo for full code samples, however here is how we use Spark’s ApplyInPandasWithState() operate to effectively compute stateful time-weighted averages in a DLT pipeline:

Within the groupBy().applyInPandasWithState() pipelining above, we use a easy Pandas operate named stateful_time_weighted_average to compute time-weighted averages. This operate successfully “buffers” noticed values for every state group till that group will be “closed” when the stream has seen sufficiently later timestamp values (managed by the watermark). These buffered values are then handed by way of a easy Python operate to compute Rieman sums.

The advantage of this method is the flexibility to jot down a sturdy, testable operate that operates on a single Pandas DataFrame, however will be computed in parallel throughout all employees in a Spark cluster on 1000’s of state teams concurrently. The power to maintain observe of state and decide when to emit the row for every location+sensor+time interval group is dealt with with the timeoutConf setting and use of the state.hasTimedOut technique inside the operate.

Outcomes and Purposes

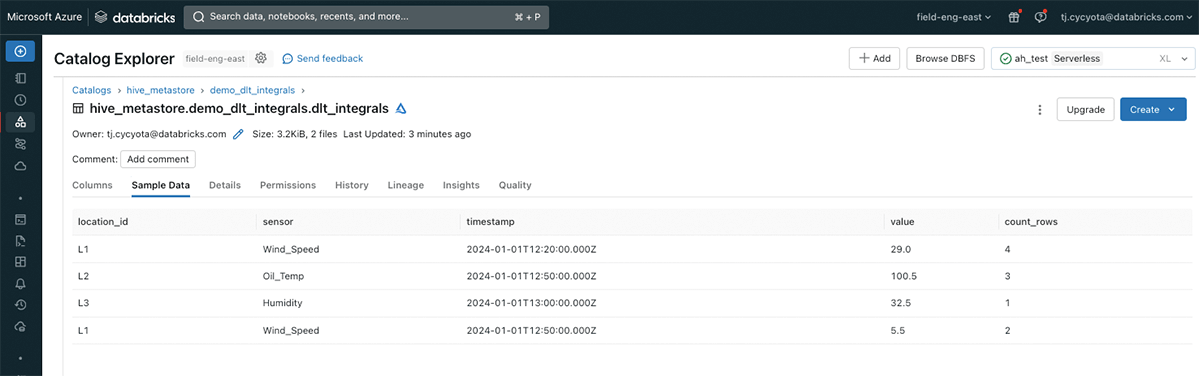

The related code for this weblog walks by way of the setup of this logic in a Delta Dwell Tables pipeline with pattern information, and is runnable in any Databricks workspace.

The outcomes show that it’s attainable to effectively and incrementally compute integral-based metrics comparable to time-weighted averages on high-volume streaming information for a lot of IoT use-cases.

For the American utility firm that applied this answer, the affect was great. With a uniform aggregation method throughout 1000’s of wind generators, information shoppers from upkeep, efficiency, and different engineering departments are in a position to analyze complicated tendencies and take proactive actions to keep up tools reliability. This built-in information may even function the inspiration for future machine studying use-cases round fault prediction and will be joined with high-volume vibration information for extra close to real-time evaluation.

Stateful streaming aggregations comparable to integrals are only one software within the trendy information engineer’s toolbelt, and with Databricks it’s easy to use them to business-critical purposes involving streaming information.