Posted by Paris Hsu – Product Supervisor, Android Studio

We shared an thrilling dwell demo from the Developer Keynote at Google I/O 2024 the place Gemini remodeled a wireframe sketch of an app’s UI into Jetpack Compose code, straight inside Android Studio. Whereas we’re nonetheless refining this function to be sure to get an excellent expertise inside Android Studio, it is constructed on prime of foundational Gemini capabilities which you’ll be able to experiment with in the present day in Google AI Studio.

Particularly, we’ll delve into:

- Turning designs into UI code: Convert a easy picture of your app’s UI into working code.

- Good UI fixes with Gemini: Obtain options on learn how to enhance or repair your UI.

- Integrating Gemini prompts in your app: Simplify complicated duties and streamline person experiences with tailor-made prompts.

Be aware: Google AI Studio gives varied general-purpose Gemini fashions, whereas Android Studio makes use of a customized model of Gemini which has been particularly optimized for developer duties. Whereas because of this these general-purpose fashions might not provide the identical depth of Android data as Gemini in Android Studio, they supply a enjoyable and fascinating playground to experiment and achieve perception into the potential of AI in Android growth.

Experiment 1: Turning designs into UI code

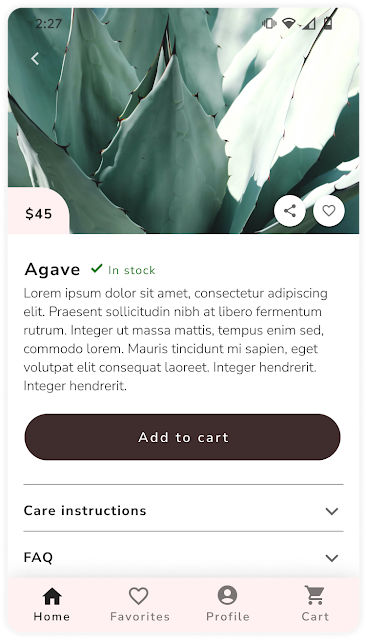

First, to show designs into Compose UI code: Open the chat immediate part of Google AI Studio, add a picture of your app’s UI display (see instance under) and enter the next immediate:

“Act as an Android app developer. For the picture offered, use Jetpack Compose to construct the display in order that the Compose Preview is as near this picture as potential. Additionally be sure to incorporate imports and use Material3.”

Then, click on “run” to execute your question and see the generated code. You may copy the generated output straight into a brand new file in Android Studio.

With this experiment, Gemini was capable of infer particulars from the picture and generate corresponding code components. For instance, the unique picture of the plant element display featured a “Care Directions” part with an expandable icon — Gemini’s generated code included an expandable card particularly for plant care directions, showcasing its contextual understanding and code era capabilities.

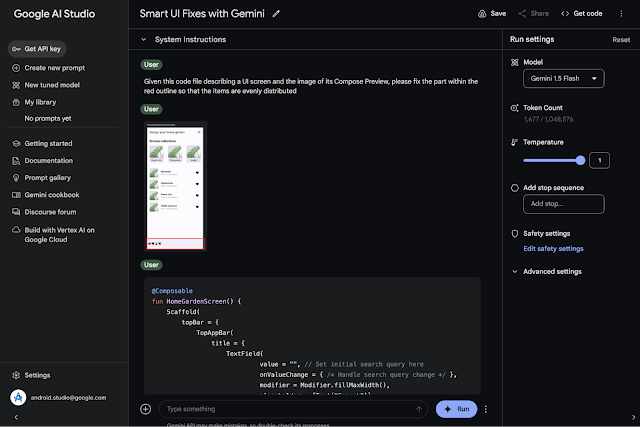

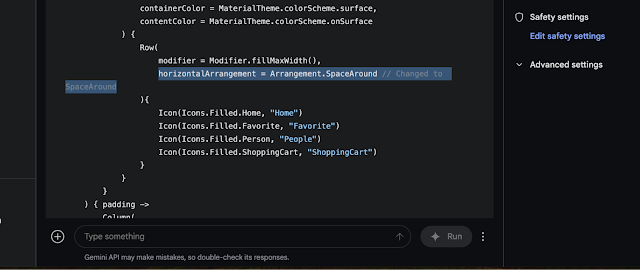

Experiment 2: Good UI fixes with Gemini in AI Studio

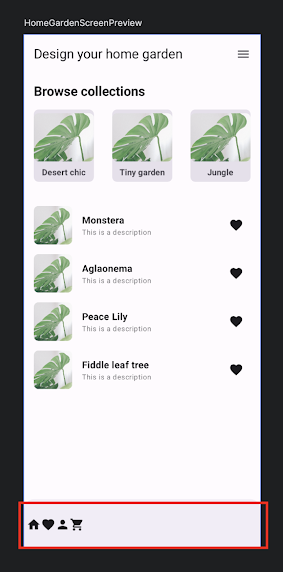

Impressed by “Circle to Search“, one other enjoyable experiment you may strive is to “circle” drawback areas on a screenshot, together with related Compose code context, and ask Gemini to recommend acceptable code fixes.

You may discover with this idea in Google AI Studio:

1. Add Compose code and screenshot: Add the Compose code file for a UI display and a screenshot of its Compose Preview, with a crimson define highlighting the problem—on this case, objects within the Backside Navigation Bar that ought to be evenly spaced.

Experiment 3: Integrating Gemini prompts in your app

Gemini can streamline experimentation and growth of customized app options. Think about you need to construct a function that offers customers recipe concepts primarily based on a picture of the components they’ve readily available. Prior to now, this may have concerned complicated duties like internet hosting a picture recognition library, coaching your personal ingredient-to-recipe mannequin, and managing the infrastructure to help all of it.

Now, with Gemini, you may obtain this with a easy, tailor-made immediate. Let’s stroll by learn how to add this “Cook dinner Helper” function into your Android app for instance:

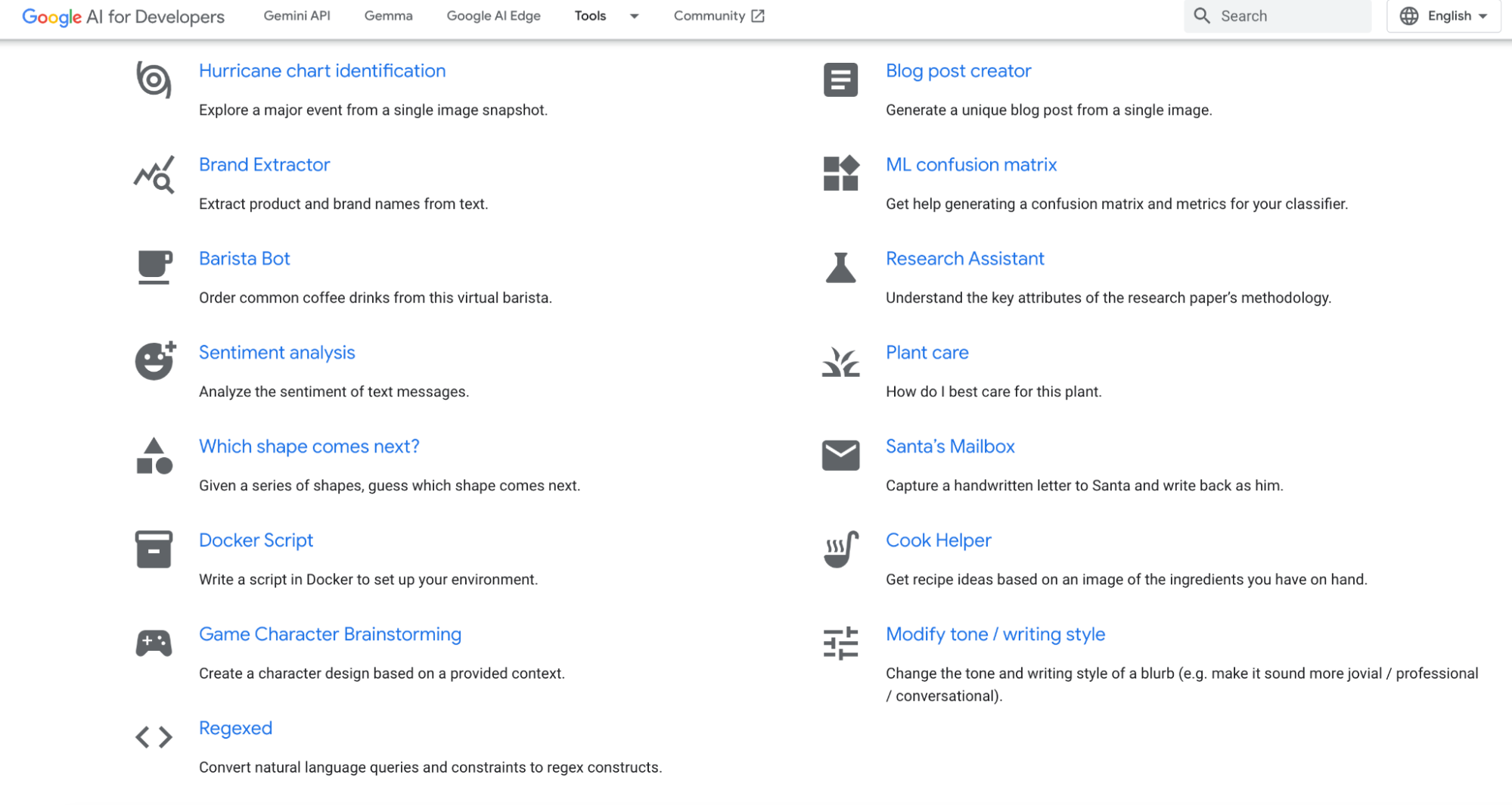

1. Discover the Gemini immediate gallery: Uncover instance prompts or craft your personal. We’ll use the “Cook dinner Helper” immediate.

2. Open and experiment in Google AI Studio: Take a look at the immediate with completely different pictures, settings, and fashions to make sure the mannequin responds as anticipated and the immediate aligns together with your targets.

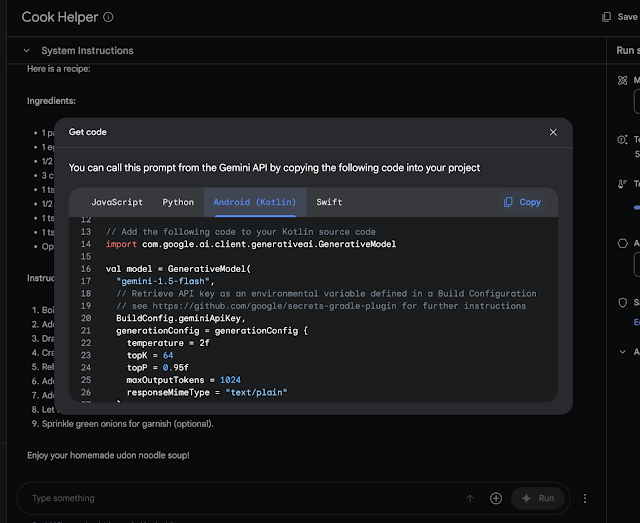

3. Generate the combination code: When you’re happy with the immediate’s efficiency, click on “Get code” and choose “Android (Kotlin)”. Copy the generated code snippet.

4. Combine the Gemini API into Android Studio: Open your Android Studio challenge. You may both use the new Gemini API app template offered inside Android Studio or comply with this tutorial. Paste the copied generated immediate code into your challenge.

That is it – your app now has a functioning Cook dinner Helper function powered by Gemini. We encourage you to experiment with completely different instance prompts and even create your personal customized prompts to boost your Android app with highly effective Gemini options.

Our method on bringing AI to Android Studio

Whereas these experiments are promising, it is vital to do not forget that massive language mannequin (LLM) know-how remains to be evolving, and we’re studying alongside the way in which. LLMs could be non-deterministic, which means they’ll generally produce sudden outcomes. That is why we’re taking a cautious and considerate method to integrating AI options into Android Studio.

Our philosophy in direction of AI in Android Studio is to enhance the developer and guarantee they continue to be “within the loop.” Specifically, when the AI is making options or writing code, we wish builders to have the ability to rigorously audit the code earlier than checking it into manufacturing. That is why, for instance, the brand new Code Strategies function in Canary mechanically brings up a diff view for builders to preview how Gemini is proposing to change your code, quite than blindly making use of the modifications straight.

We need to be sure these options, like Gemini in Android Studio itself, are completely examined, dependable, and really helpful to builders earlier than we deliver them into the IDE.

What’s subsequent?

We invite you to strive these experiments and share your favourite prompts and examples with us utilizing the #AndroidGeminiEra tag on X and LinkedIn as we proceed to discover this thrilling frontier collectively. Additionally, be sure to comply with Android Developer on LinkedIn, Medium, YouTube, or X for extra updates! AI has the potential to revolutionize the way in which we construct Android apps, and we won’t wait to see what we will create collectively.